Похожие презентации:

Beyond Adversarial Learning — 深度学习系统的数据流攻击

1.

Beyond Adversarial Learning —深度学习系统的数据流攻击

李 康

乔治亚大学

Collaborators: Qixue Xiao, Yufei Chen, Deyue Zhang,

and many others from Qihoo 360

360 智能安全研究院

2.

⾃自我介绍360 智能安全研究团队 负责⼈

Disekt、SecDawgs CTF 战队创始⼈

xCTF 和蓝莲花战队 启蒙导师

2016 年 DARPA Cyber Grand Challenge 决赛获奖者

disekt

CTF Team

360

3.

AI 的成功应用4.

为 么要关心AI的安全问题?5.

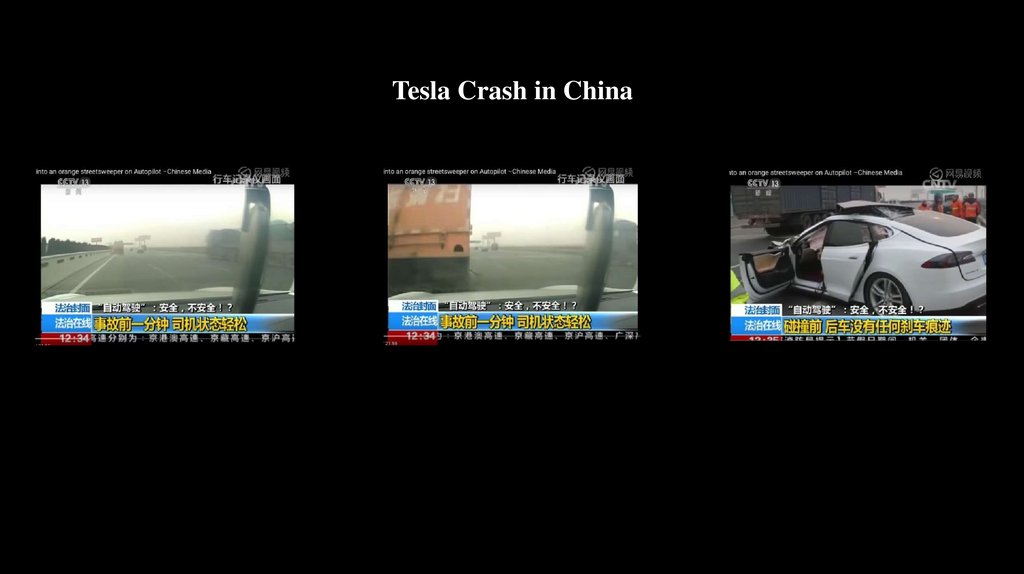

Tesla Crash in China6.

Uber ⾃动驾驶事故7.

为什么要关心AI的安全问题 (reason #2)8.

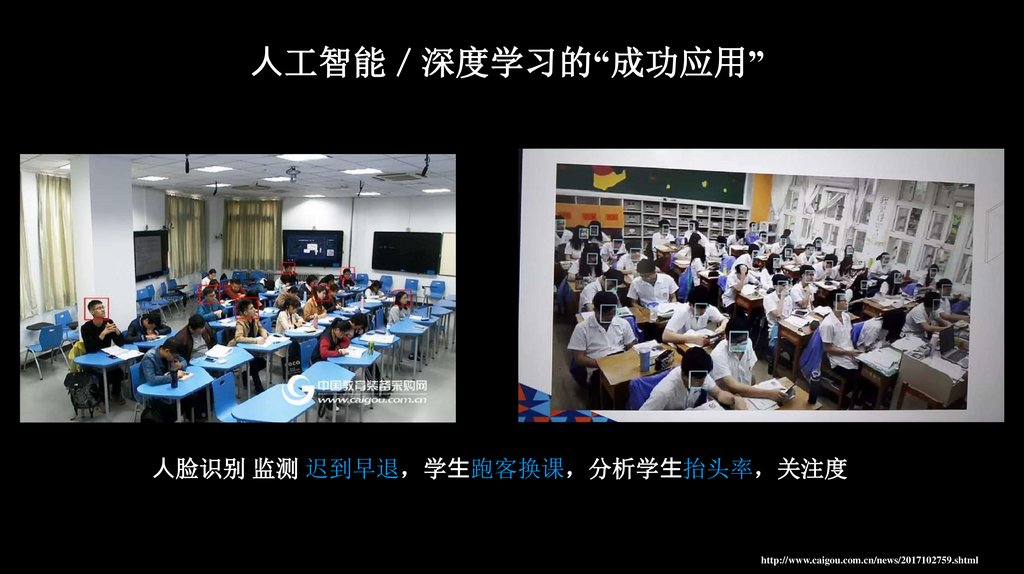

⼈工智能 深度学习的“成功应用”⼈脸识别 监测 迟到早退 学⽣跑客换课 分析学⽣抬头率 关注度

http://www.caigou.com.cn/news/2017102759.shtml

9.

⼈工智能 深度学习的“成功应用”利⽤机器学习刷帖 刷评价

“Leverage deep learning language models (Recurrent Neural

Networks or RNNs) to automate the generation of fake online

reviews for products and services"

https://www.schneier.com/blog/archives/2017/09/

new_techniques_.html

10.

⼈工智能 深度学习的“成功应用”利用机器学习破解图片验证码

Defeating Captcha with Learning

https://deepmlblog.wordpress.com/2016/01/03/how-to-breaka-captcha-system/

I’m not a human: Breaking the Google reCAPTCHA

https://www.blackhat.com/docs/asia-16/materials/asia-16Sivakorn-Im-Not-a-Human

11.

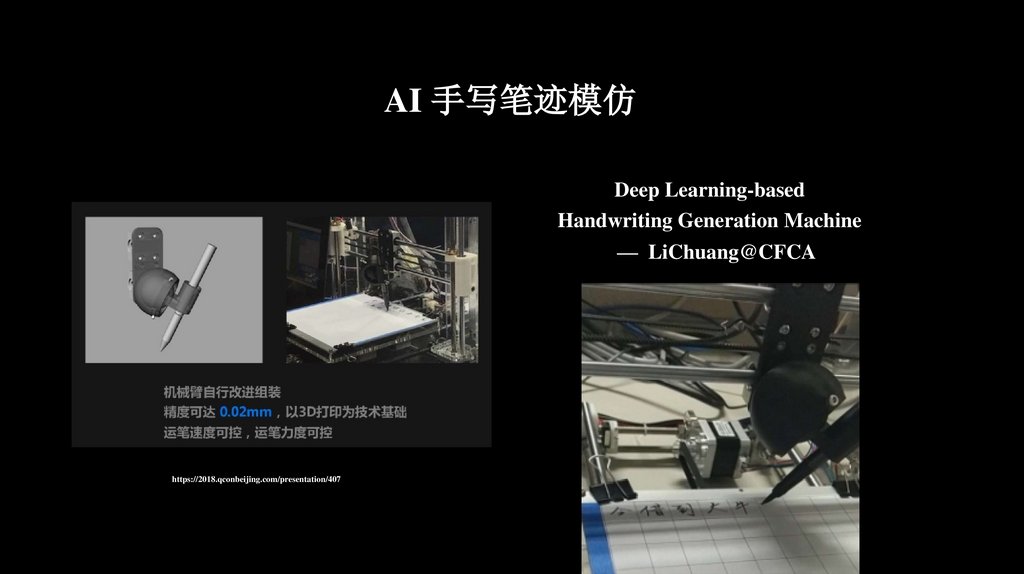

AI 手写笔迹模仿Deep Learning-based

Handwriting Generation Machine

— LiChuang@CFCA

https://2018.qconbeijing.com/presentation/407

12.

对抗⼈工智能的需求13.

如何攻击⼀个⼈工智能人脸/猫脸识别系统https://www.pyimagesearch.com/2016/06/20/detecting-cats-in-images-with-opencv/

14.

如何攻击⼀个⼈工智能人脸/猫脸识别系统https://www.pyimagesearch.com/2016/06/20/detecting-cats-in-images-with-opencv/

https://www.wired.com/story/tried-to-beat-face-id-and-failed-so-far/

15.

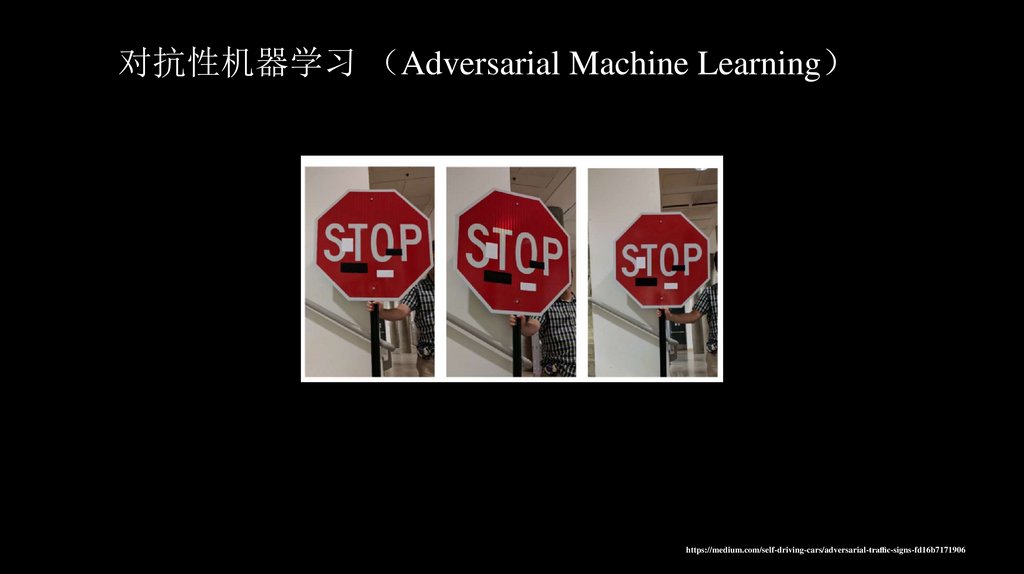

对抗性机器学习 Adversarial Machine Learninghttps://medium.com/self-driving-cars/adversarial-tra c-signs-fd16b7171906

16.

Beyond Adversarial Machine Learning对抗样本之外的攻击方法

17.

通过利用软件 洞来攻击⼈工智能系统奇虎360 安全团队在2017年 夏天发现的⼈工智能框架漏洞 CVEs

18.

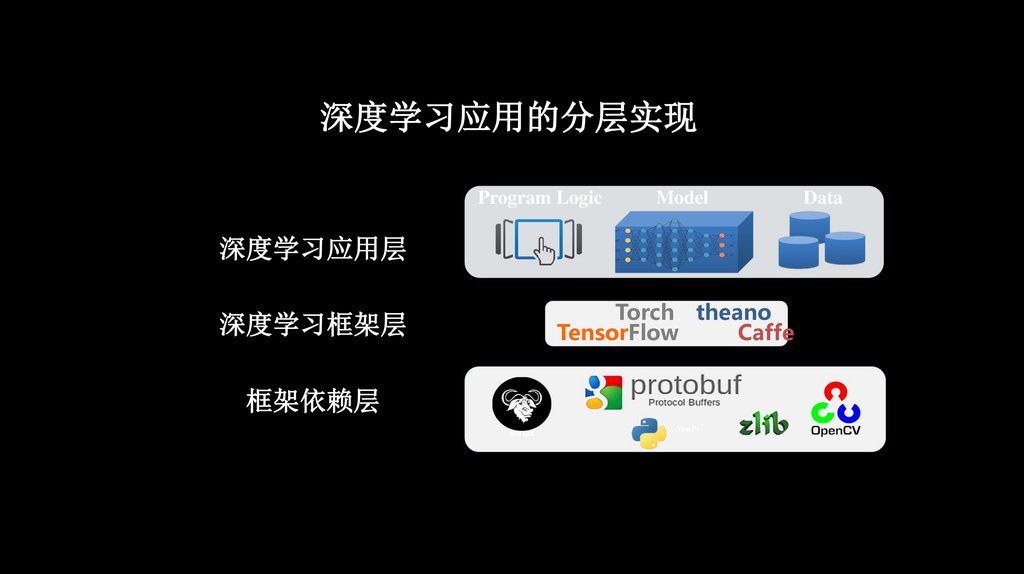

深度学习应用的分层实现Program Logic

Model

深度学习应用层

Torch theano

TensorFlow

Caffe

深度学习框架层

框架依赖层

GNU LibC

NumPy

Data

19.

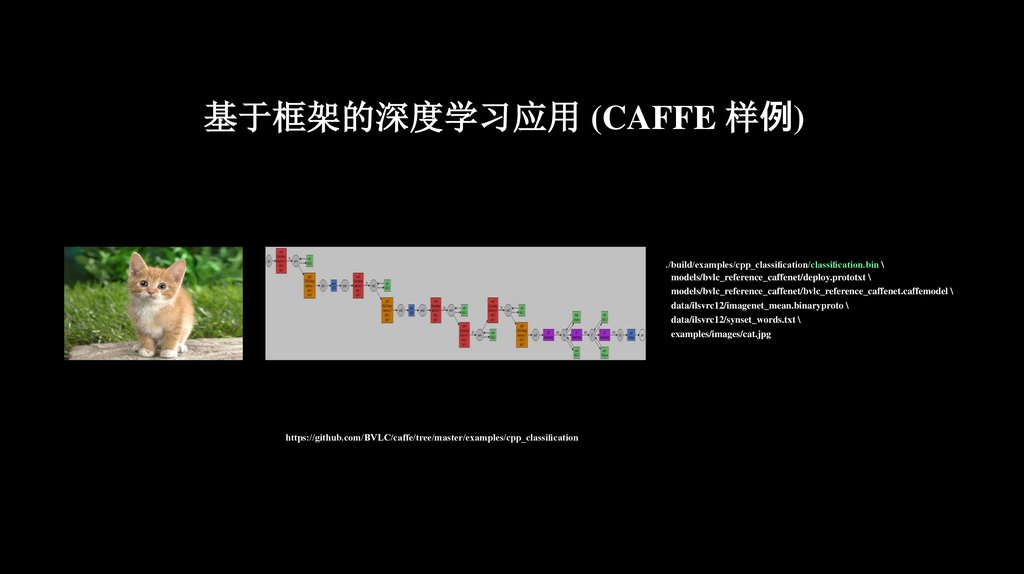

基于框架的深度学习应用 (CAFFE 样 )./build/examples/cpp_classi cation/classi cation.bin \

models/bvlc_reference_caffenet/deploy.prototxt \

models/bvlc_reference_caffenet/bvlc_reference_caffenet.caffemodel \

data/ilsvrc12/imagenet_mean.binaryproto \

data/ilsvrc12/synset_words.txt \

examples/images/cat.jpg

https://github.com/BVLC/caffe/tree/master/examples/cpp_classi cation

20.

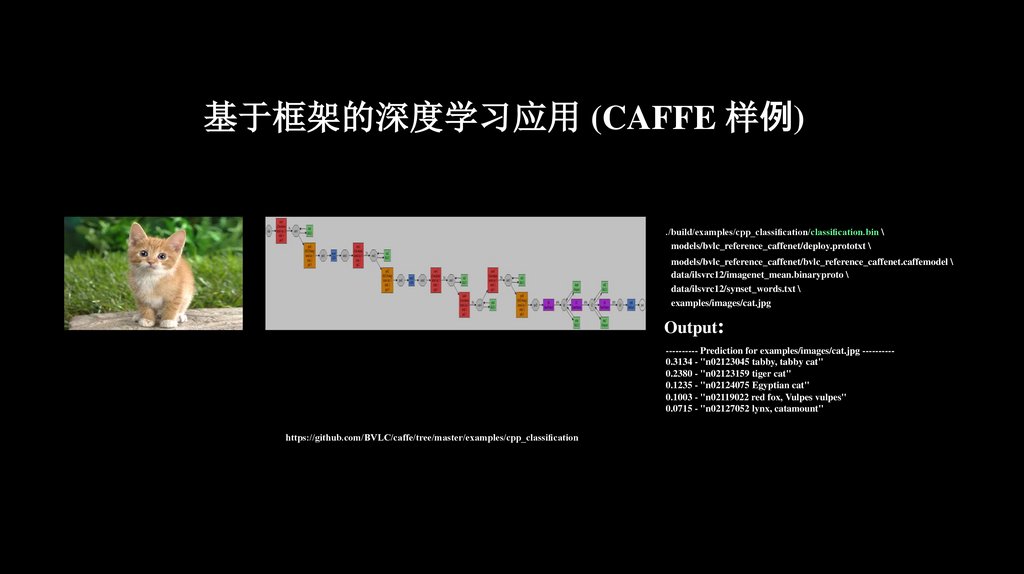

基于框架的深度学习应用 (CAFFE 样 )./build/examples/cpp_classi cation/classi cation.bin \

models/bvlc_reference_caffenet/deploy.prototxt \

models/bvlc_reference_caffenet/bvlc_reference_caffenet.caffemodel \

data/ilsvrc12/imagenet_mean.binaryproto \

data/ilsvrc12/synset_words.txt \

examples/images/cat.jpg

Output:

---------- Prediction for examples/images/cat.jpg ---------0.3134 - "n02123045 tabby, tabby cat"

0.2380 - "n02123159 tiger cat"

0.1235 - "n02124075 Egyptian cat"

0.1003 - "n02119022 red fox, Vulpes vulpes"

0.0715 - "n02127052 lynx, catamount"

https://github.com/BVLC/caffe/tree/master/examples/cpp_classi cation

21.

22.

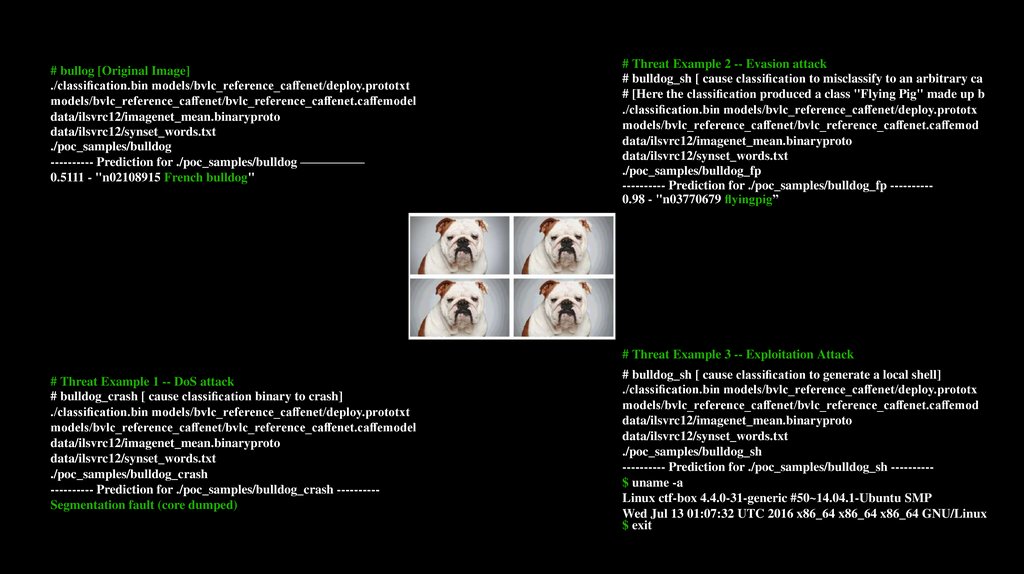

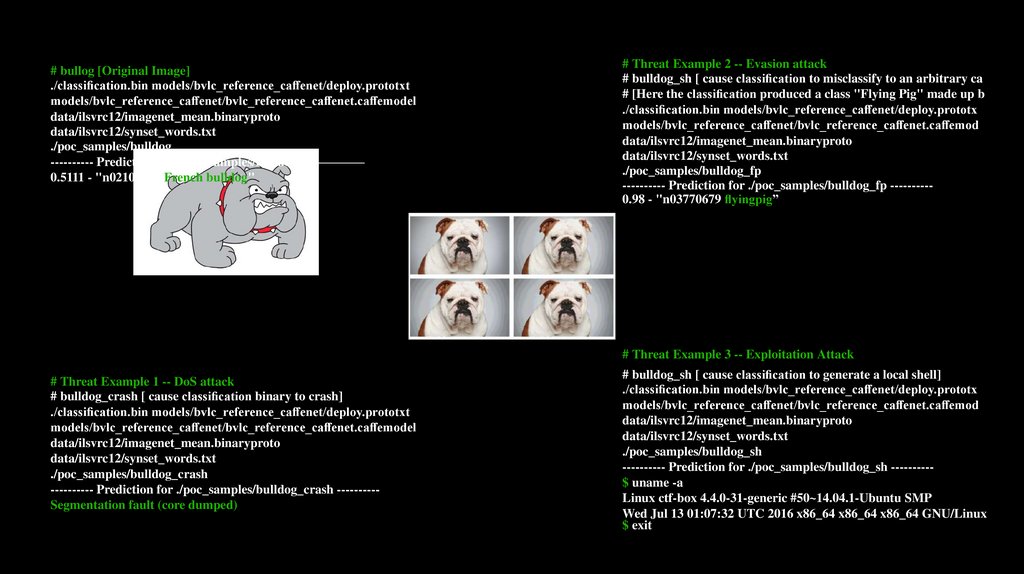

# bullog [Original Image]./classi cation.bin models/bvlc_reference_ca enet/deploy.prototxt

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emodel

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog

---------- Prediction for ./poc_samples/bulldog —————

0.5111 - "n02108915 French bulldog"

# Threat Example 2 -- Evasion attack

# bulldog_sh [ cause classi cation to misclassify to an arbitrary ca

# [Here the classi cation produced a class "Flying Pig" made up b

./classi cation.bin models/bvlc_reference_ca enet/deploy.prototx

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emod

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog_fp

---------- Prediction for ./poc_samples/bulldog_fp ---------0.98 - "n03770679 yingpig”

# Threat Example 3 -- Exploitation Attack

# Threat Example 1 -- DoS attack

# bulldog_crash [ cause classi cation binary to crash]

./classi cation.bin models/bvlc_reference_ca enet/deploy.prototxt

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emodel

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog_crash

---------- Prediction for ./poc_samples/bulldog_crash ---------Segmentation fault (core dumped)

# bulldog_sh [ cause classi cation to generate a local shell]

./classi cation.bin models/bvlc_reference_ca enet/deploy.prototx

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emod

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog_sh

---------- Prediction for ./poc_samples/bulldog_sh ---------$ uname -a

Linux ctf-box 4.4.0-31-generic #50~14.04.1-Ubuntu SMP

Wed Jul 13 01:07:32 UTC 2016 x86_64 x86_64 x86_64 GNU/Linux

$ exit

23.

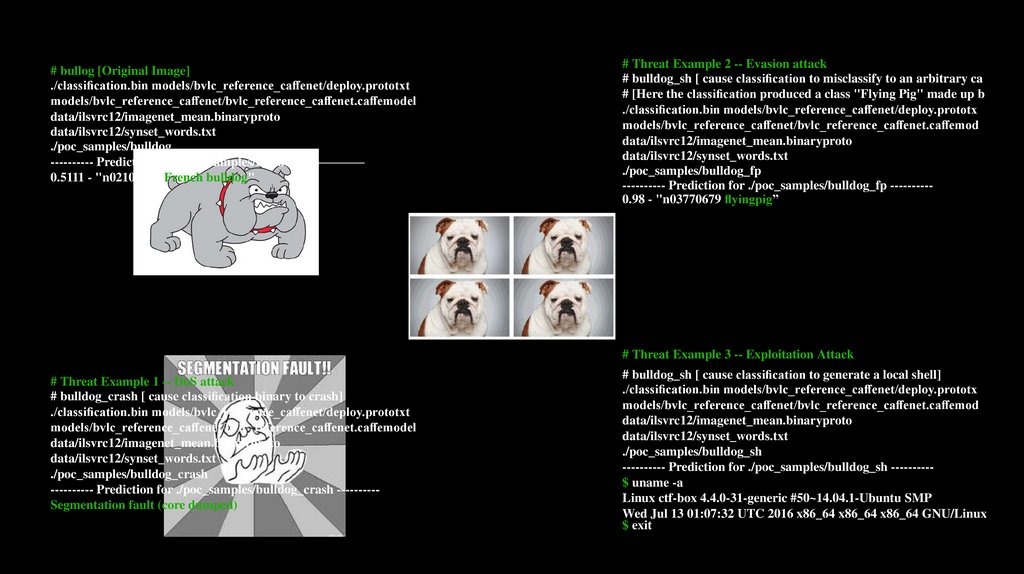

# bullog [Original Image]./classi cation.bin models/bvlc_reference_ca enet/deploy.prototxt

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emodel

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog

---------- Prediction for ./poc_samples/bulldog —————

0.5111 - "n02108915 French bulldog"

# Threat Example 2 -- Evasion attack

# bulldog_sh [ cause classi cation to misclassify to an arbitrary ca

# [Here the classi cation produced a class "Flying Pig" made up b

./classi cation.bin models/bvlc_reference_ca enet/deploy.prototx

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emod

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog_fp

---------- Prediction for ./poc_samples/bulldog_fp ---------0.98 - "n03770679 yingpig”

# Threat Example 3 -- Exploitation Attack

# Threat Example 1 -- DoS attack

# bulldog_crash [ cause classi cation binary to crash]

./classi cation.bin models/bvlc_reference_ca enet/deploy.prototxt

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emodel

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog_crash

---------- Prediction for ./poc_samples/bulldog_crash ---------Segmentation fault (core dumped)

# bulldog_sh [ cause classi cation to generate a local shell]

./classi cation.bin models/bvlc_reference_ca enet/deploy.prototx

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emod

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog_sh

---------- Prediction for ./poc_samples/bulldog_sh ---------$ uname -a

Linux ctf-box 4.4.0-31-generic #50~14.04.1-Ubuntu SMP

Wed Jul 13 01:07:32 UTC 2016 x86_64 x86_64 x86_64 GNU/Linux

$ exit

24.

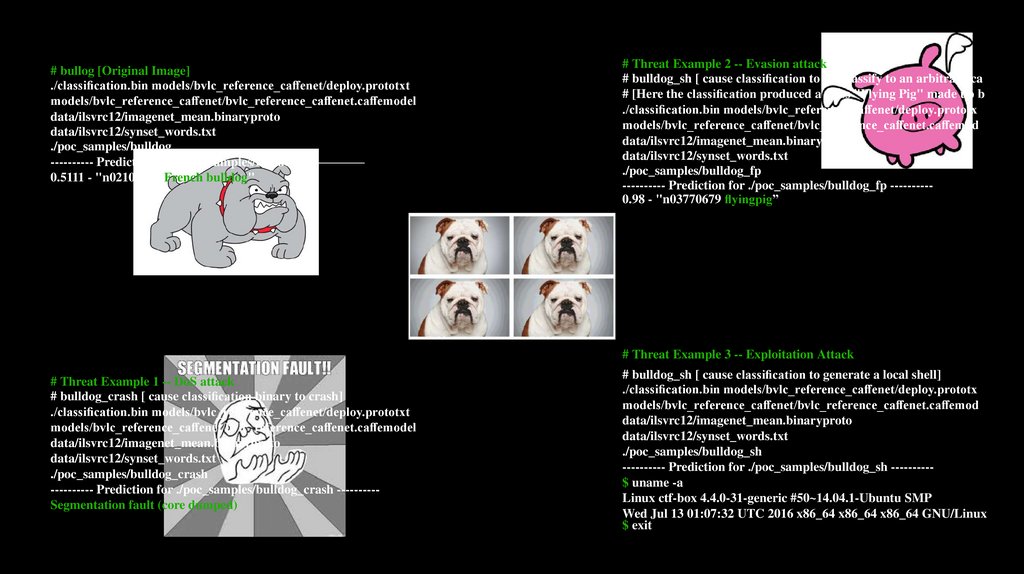

# bullog [Original Image]./classi cation.bin models/bvlc_reference_ca enet/deploy.prototxt

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emodel

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog

---------- Prediction for ./poc_samples/bulldog —————

0.5111 - "n02108915 French bulldog"

# Threat Example 2 -- Evasion attack

# bulldog_sh [ cause classi cation to misclassify to an arbitrary ca

# [Here the classi cation produced a class "Flying Pig" made up b

./classi cation.bin models/bvlc_reference_ca enet/deploy.prototx

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emod

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog_fp

---------- Prediction for ./poc_samples/bulldog_fp ---------0.98 - "n03770679 yingpig”

# Threat Example 3 -- Exploitation Attack

# Threat Example 1 -- DoS attack

# bulldog_crash [ cause classi cation binary to crash]

./classi cation.bin models/bvlc_reference_ca enet/deploy.prototxt

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emodel

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog_crash

---------- Prediction for ./poc_samples/bulldog_crash ---------Segmentation fault (core dumped)

# bulldog_sh [ cause classi cation to generate a local shell]

./classi cation.bin models/bvlc_reference_ca enet/deploy.prototx

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emod

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog_sh

---------- Prediction for ./poc_samples/bulldog_sh ---------$ uname -a

Linux ctf-box 4.4.0-31-generic #50~14.04.1-Ubuntu SMP

Wed Jul 13 01:07:32 UTC 2016 x86_64 x86_64 x86_64 GNU/Linux

$ exit

25.

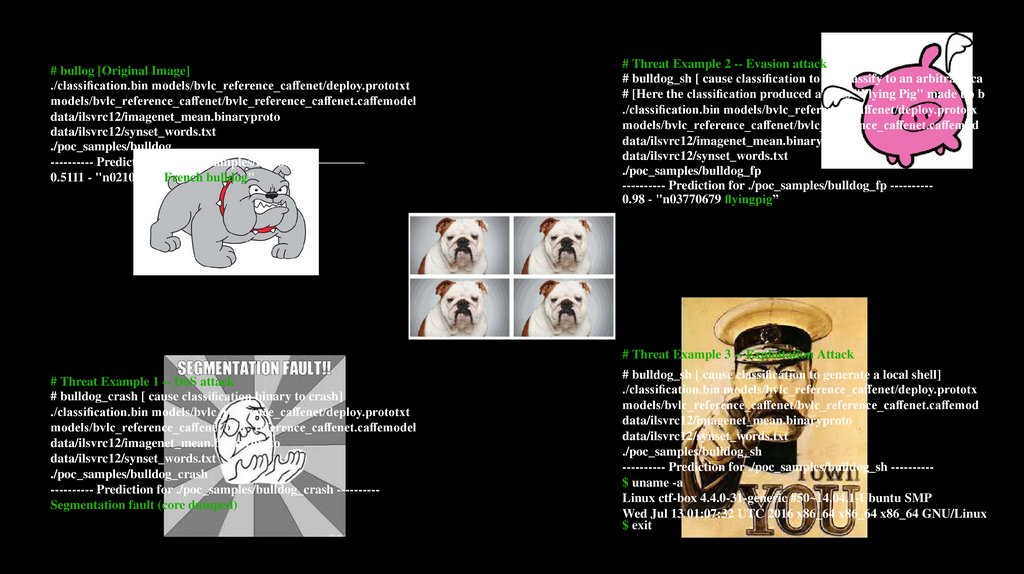

# bullog [Original Image]./classi cation.bin models/bvlc_reference_ca enet/deploy.prototxt

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emodel

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog

---------- Prediction for ./poc_samples/bulldog —————

0.5111 - "n02108915 French bulldog"

# Threat Example 2 -- Evasion attack

# bulldog_sh [ cause classi cation to misclassify to an arbitrary ca

# [Here the classi cation produced a class "Flying Pig" made up b

./classi cation.bin models/bvlc_reference_ca enet/deploy.prototx

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emod

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog_fp

---------- Prediction for ./poc_samples/bulldog_fp ---------0.98 - "n03770679 yingpig”

# Threat Example 3 -- Exploitation Attack

# Threat Example 1 -- DoS attack

# bulldog_crash [ cause classi cation binary to crash]

./classi cation.bin models/bvlc_reference_ca enet/deploy.prototxt

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emodel

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog_crash

---------- Prediction for ./poc_samples/bulldog_crash ---------Segmentation fault (core dumped)

# bulldog_sh [ cause classi cation to generate a local shell]

./classi cation.bin models/bvlc_reference_ca enet/deploy.prototx

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emod

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog_sh

---------- Prediction for ./poc_samples/bulldog_sh ---------$ uname -a

Linux ctf-box 4.4.0-31-generic #50~14.04.1-Ubuntu SMP

Wed Jul 13 01:07:32 UTC 2016 x86_64 x86_64 x86_64 GNU/Linux

$ exit

26.

# bullog [Original Image]./classi cation.bin models/bvlc_reference_ca enet/deploy.prototxt

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emodel

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog

---------- Prediction for ./poc_samples/bulldog —————

0.5111 - "n02108915 French bulldog"

# Threat Example 2 -- Evasion attack

# bulldog_sh [ cause classi cation to misclassify to an arbitrary ca

# [Here the classi cation produced a class "Flying Pig" made up b

./classi cation.bin models/bvlc_reference_ca enet/deploy.prototx

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emod

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog_fp

---------- Prediction for ./poc_samples/bulldog_fp ---------0.98 - "n03770679 yingpig”

# Threat Example 3 -- Exploitation Attack

# Threat Example 1 -- DoS attack

# bulldog_crash [ cause classi cation binary to crash]

./classi cation.bin models/bvlc_reference_ca enet/deploy.prototxt

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emodel

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog_crash

---------- Prediction for ./poc_samples/bulldog_crash ---------Segmentation fault (core dumped)

# bulldog_sh [ cause classi cation to generate a local shell]

./classi cation.bin models/bvlc_reference_ca enet/deploy.prototx

models/bvlc_reference_ca enet/bvlc_reference_ca enet.ca emod

data/ilsvrc12/imagenet_mean.binaryproto

data/ilsvrc12/synset_words.txt

./poc_samples/bulldog_sh

---------- Prediction for ./poc_samples/bulldog_sh ---------$ uname -a

Linux ctf-box 4.4.0-31-generic #50~14.04.1-Ubuntu SMP

Wed Jul 13 01:07:32 UTC 2016 x86_64 x86_64 x86_64 GNU/Linux

$ exit

27.

深度学习系统的数据流攻击28.

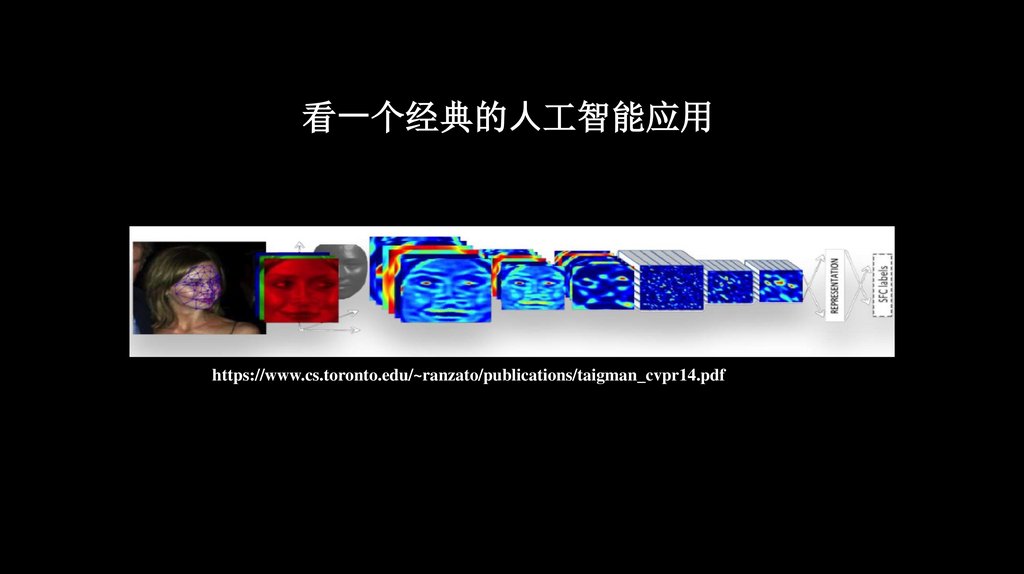

看⼀个经典的⼈工智能应用https://www.cs.toronto.edu/~ranzato/publications/taigman_cvpr14.pdf

29.

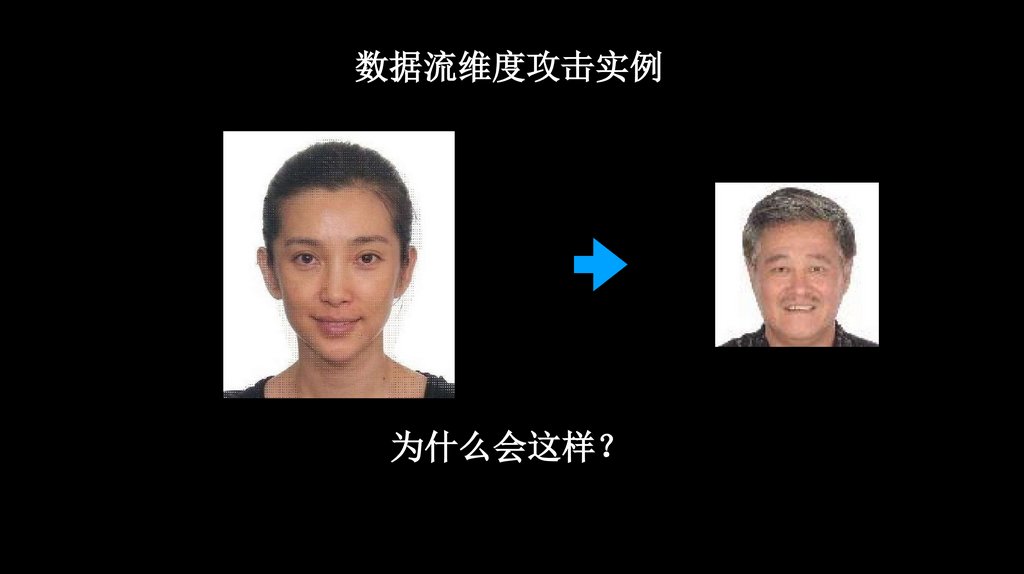

数据流维度攻击实例AI 人脸识别会认成谁

30.

数据流维度攻击实例为什么会这样

31.

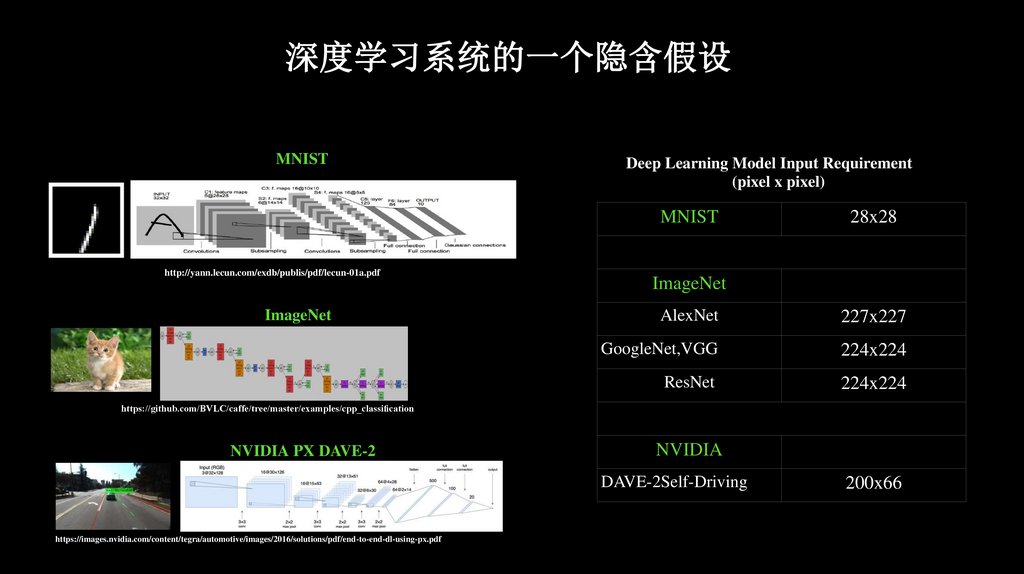

深度学习系统的一个隐含假设MNIST

Deep Learning Model Input Requirement

(pixel x pixel)

MNIST

http://yann.lecun.com/exdb/publis/pdf/lecun-01a.pdf

ImageNet

28x28

ImageNet

AlexNet

227x227

GoogleNet,VGG

224x224

ResNet

224x224

https://github.com/BVLC/caffe/tree/master/examples/cpp_classi cation

NVIDIA PX DAVE-2

NVIDIA

DAVE-2Self-Driving

https://images.nvidia.com/content/tegra/automotive/images/2016/solutions/pdf/end-to-end-dl-using-px.pdf

200x66

32.

如果输⼊的维度与模型的维度不匹配会怎样33.

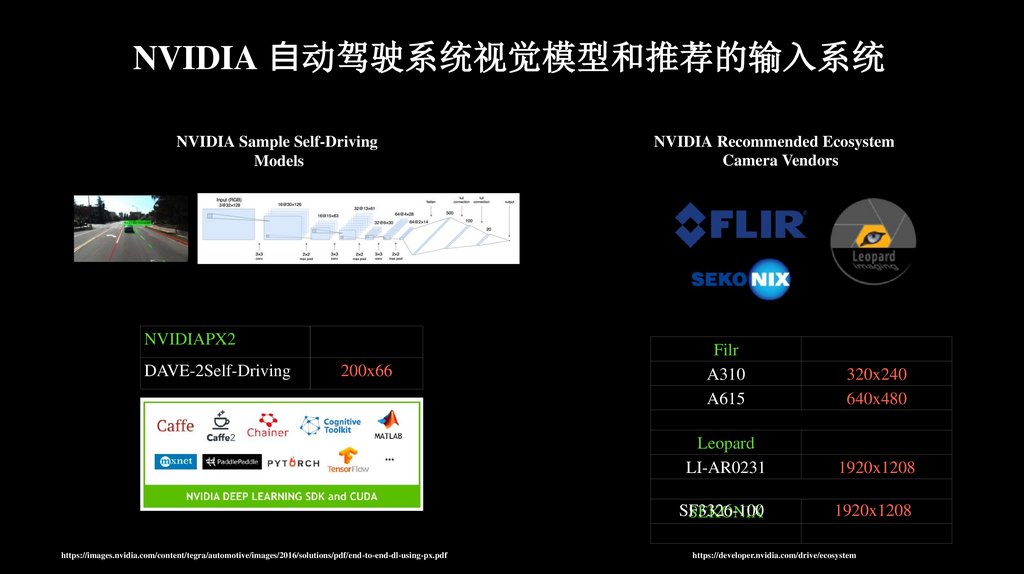

NVIDIA ⾃动驾驶系统视觉模型和推荐的输入系统NVIDIA Sample Self-Driving

Models

NVIDIAPX2

DAVE-2Self-Driving

200x66

https://images.nvidia.com/content/tegra/automotive/images/2016/solutions/pdf/end-to-end-dl-using-px.pdf

NVIDIA Recommended Ecosystem

Camera Vendors

Filr

A310

A615

320x240

640x480

Leopard

LI-AR0231

1920x1208

SF3326-100

SEKONIX

1920x1208

https://developer.nvidia.com/drive/ecosystem

34.

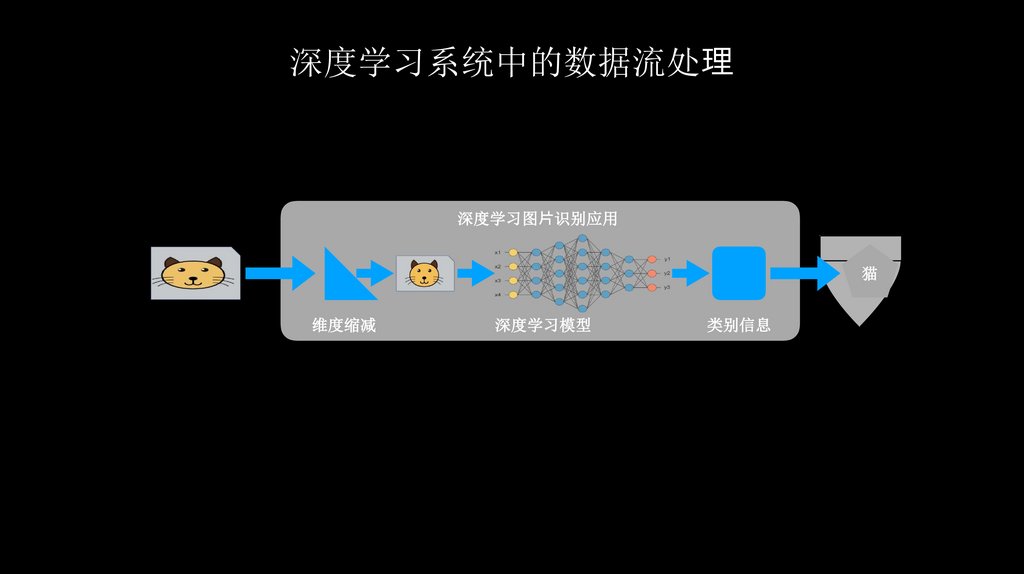

深度学习系统中的数据流处深度学习图⽚识别应用

猫

维度缩减

深度学习模型

类别信息

35.

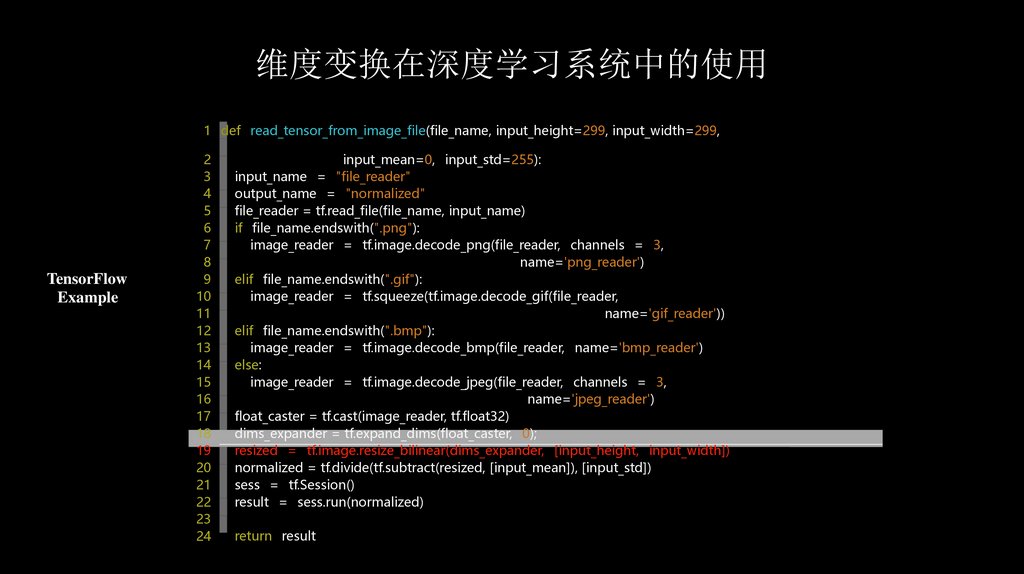

维度变换在深度学习系统中的使用1 def read_tensor_from_image_file(file_name, input_height=299, input_width=299,

TensorFlow

Example

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

input_mean=0, input_std=255):

input_name = "file_reader"

output_name = "normalized"

file_reader = tf.read_file(file_name, input_name)

if file_name.endswith(".png"):

image_reader = tf.image.decode_png(file_reader, channels = 3,

name='png_reader')

elif file_name.endswith(".gif"):

image_reader = tf.squeeze(tf.image.decode_gif(file_reader,

name='gif_reader'))

elif file_name.endswith(".bmp"):

image_reader = tf.image.decode_bmp(file_reader, name='bmp_reader')

else:

image_reader = tf.image.decode_jpeg(file_reader, channels = 3,

name='jpeg_reader')

float_caster = tf.cast(image_reader, tf.float32)

dims_expander = tf.expand_dims(float_caster, 0);

resized = tf.image.resize_bilinear(dims_expander, [input_height, input_width])

normalized = tf.divide(tf.subtract(resized, [input_mean]), [input_std])

sess = tf.Session()

result = sess.run(normalized)

return result

36.

维度变换在深度学习系统中的使用DeepDetect Example

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

int read_file(const std::string &fname)

{

cv::Mat img = cv::imread(fname,_bw ? CV_LOAD_IMAGE_GRAYSCALE :

CV_LOAD_IMAGE_COLOR);

if (img.empty())

{

LOG(ERROR) << "empty image";

return -1;

}

_imgs_size.push_back(std::pair<int,int>(img.rows,img.cols));

cv::Size size(_width,_height);

cv::Mat rimg;

cv::resize(img,rimg,size,0,0,CV_INTER_CUBIC);

_imgs.push_back(rimg);

return 0;

}

37.

常用维度变换算法38.

维度变换和差值算法Scaling is supposed to preserve the visual features of an image

and thus does not change its semantic meaning.

39.

维度变换和差值算法差值算法 猜测新的点上像素的值

40.

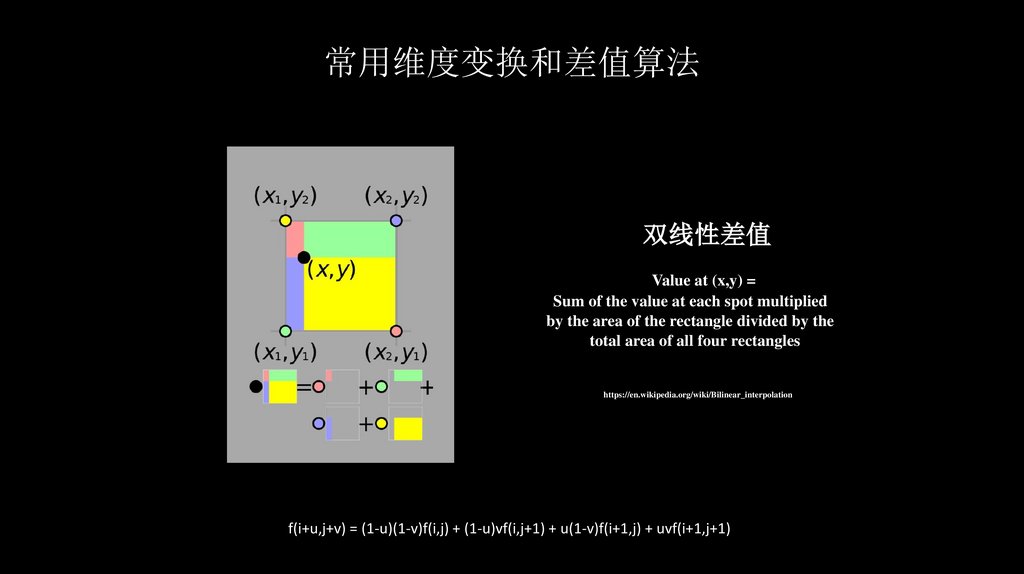

常用维度变换和差值算法双线性差值

Value at (x,y) =

Sum of the value at each spot multiplied

by the area of the rectangle divided by the

total area of all four rectangles

https://en.wikipedia.org/wiki/Bilinear_interpolation

f(i+u,j+v) = (1-u)(1-v)f(i,j) + (1-u)vf(i,j+1) + u(1-v)f(i+1,j) + uvf(i+1,j+1)

41.

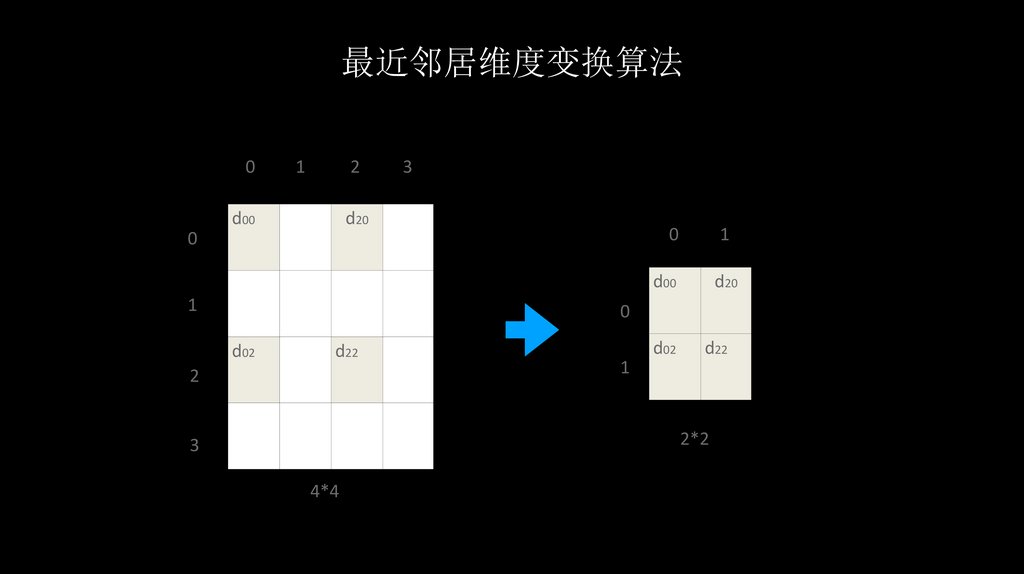

最近邻居维度变换算法0

1

2

d00

3

d20

0

1

0

1

d00

d20

d02

d22

0

d02

d22

2

1

2*2

3

4*4

42.

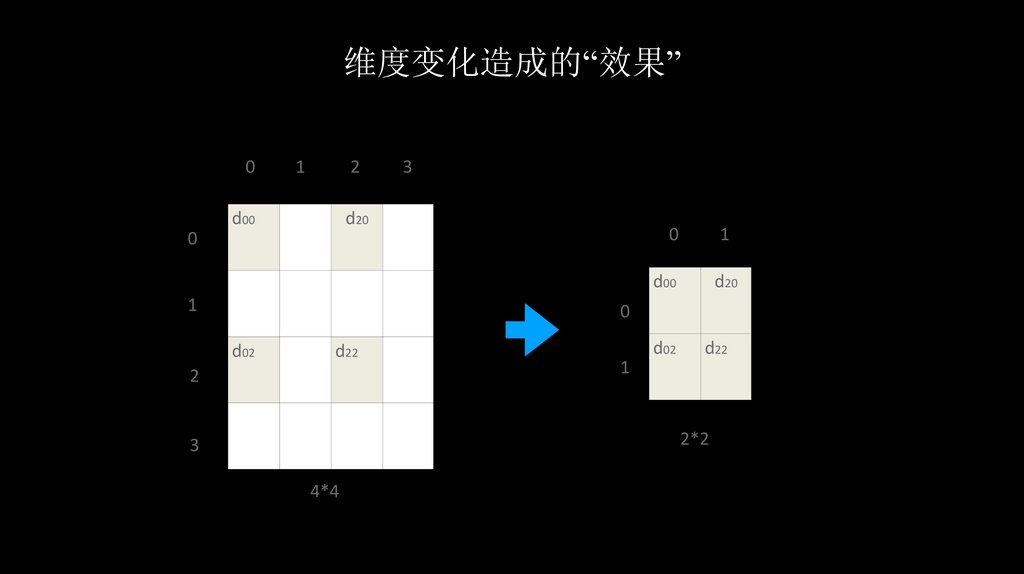

维度变化造成的“效果”0

1

2

d00

3

d20

0

1

0

1

d00

d20

d02

d22

0

d02

d22

2

1

2*2

3

4*4

43.

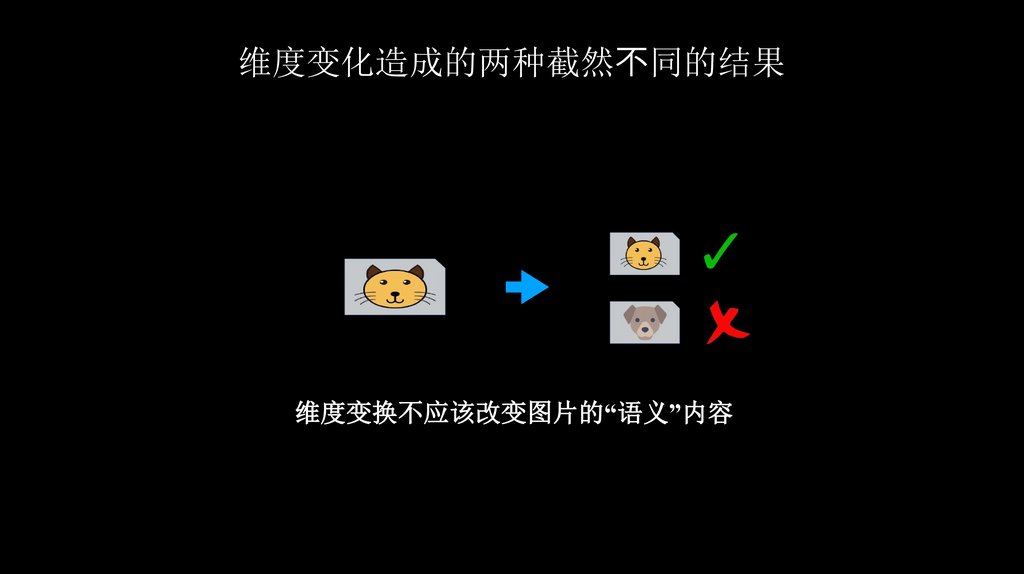

维度变化造成的两种截然 同的结果维度变换不应该改变图片的“语义”内容

44.

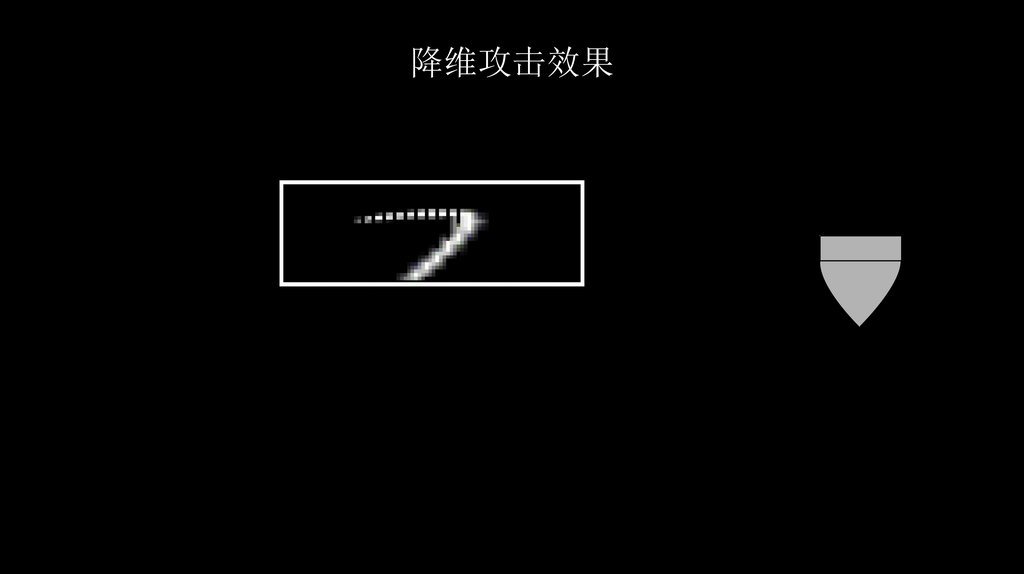

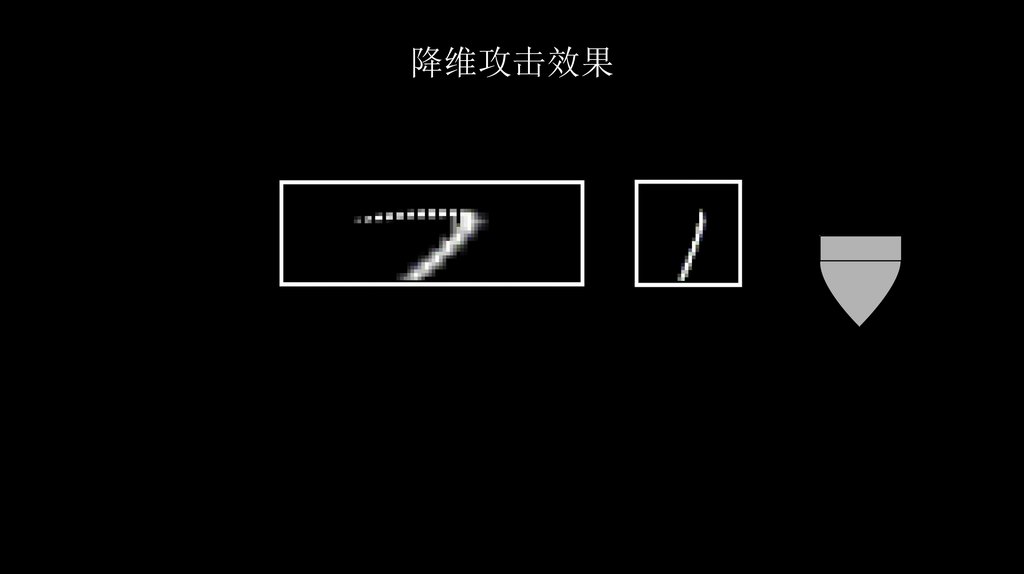

降维攻击效果45.

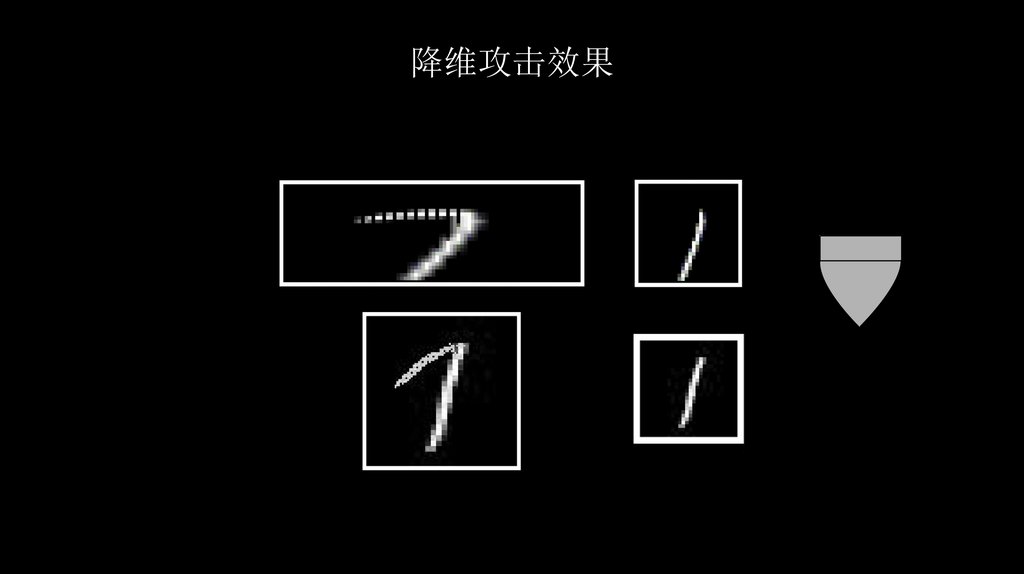

降维攻击效果46.

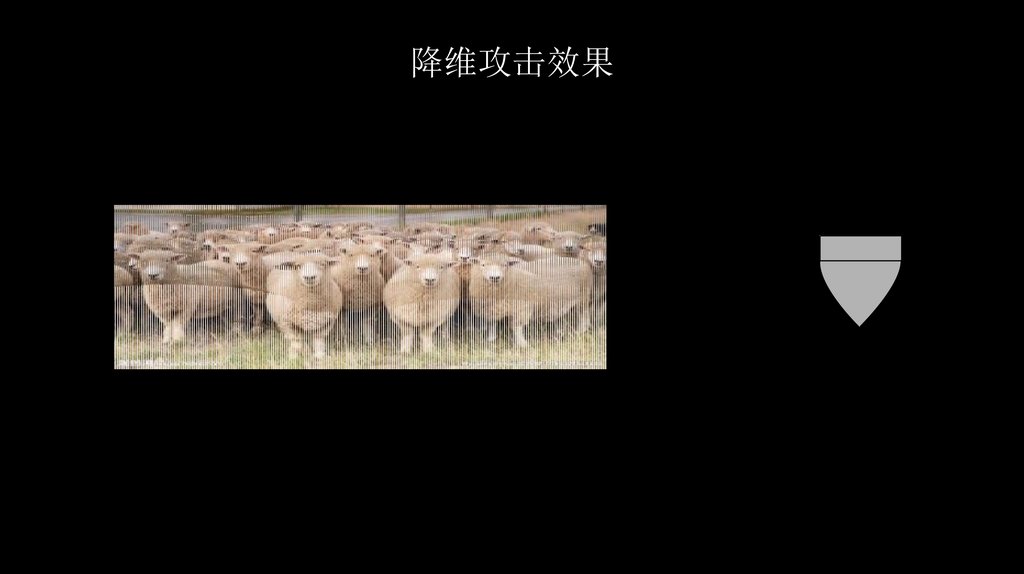

降维攻击效果47.

降维攻击效果48.

降维攻击效果49.

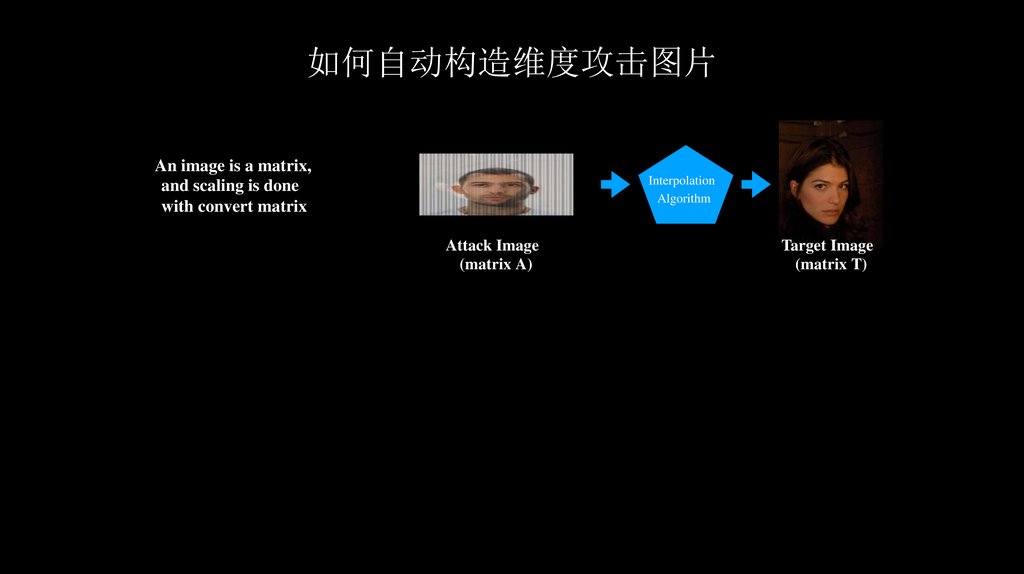

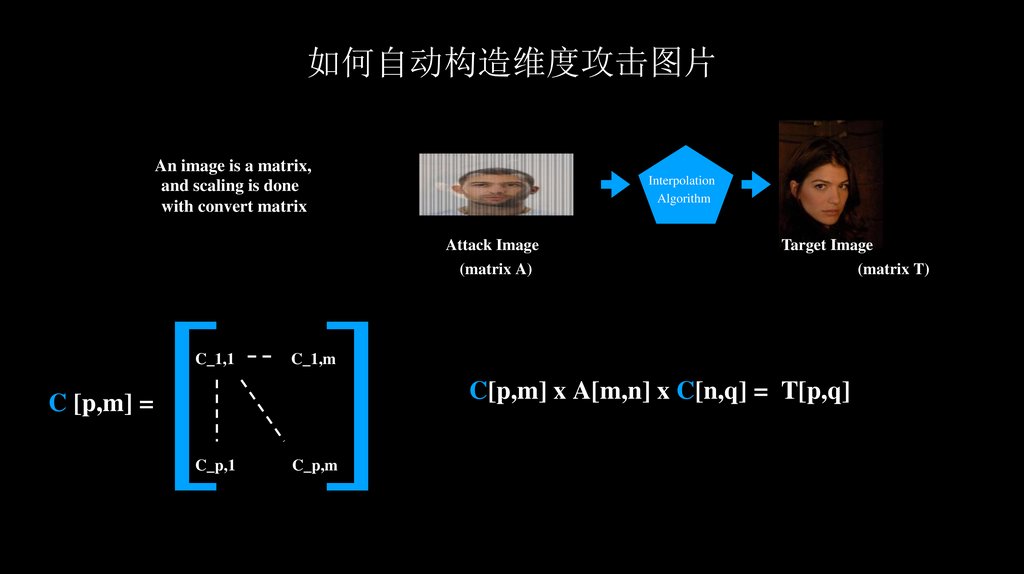

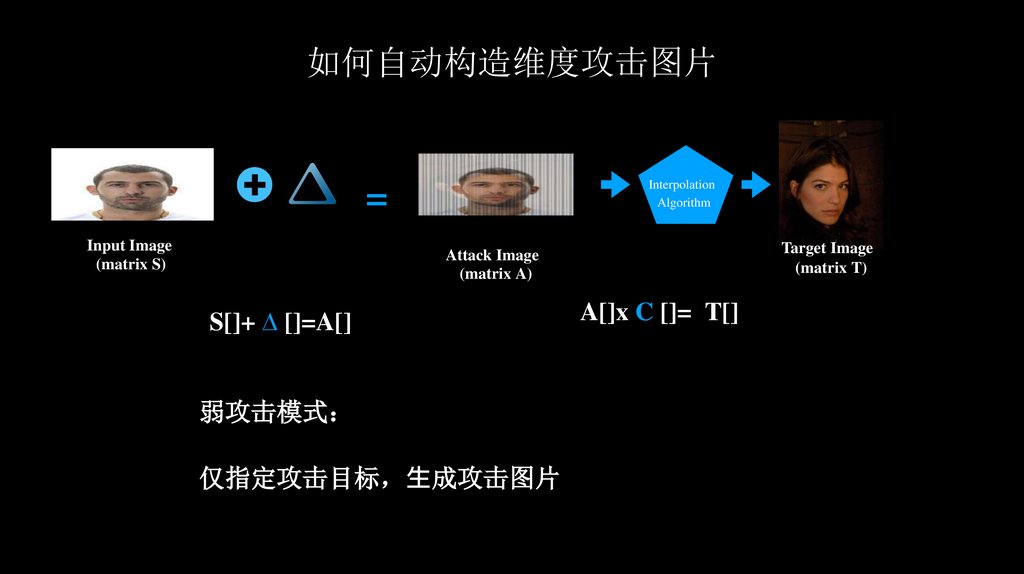

如何自动构造维度攻击图片An image is a matrix,

and scaling is done

with convert matrix

Interpolation

Algorithm

Attack Image

(matrix A)

Target Image

(matrix T)

50.

如何自动构造维度攻击图片An image is a matrix,

and scaling is done

with convert matrix

Interpolation

Algorithm

Attack Image

(matrix A)

C_1,1

Target Image

(matrix T)

C_1,m

C[p,m] x A[m,n] x C[n,q] = T[p,q]

C [p,m] =

C_p,1

C_p,m

51.

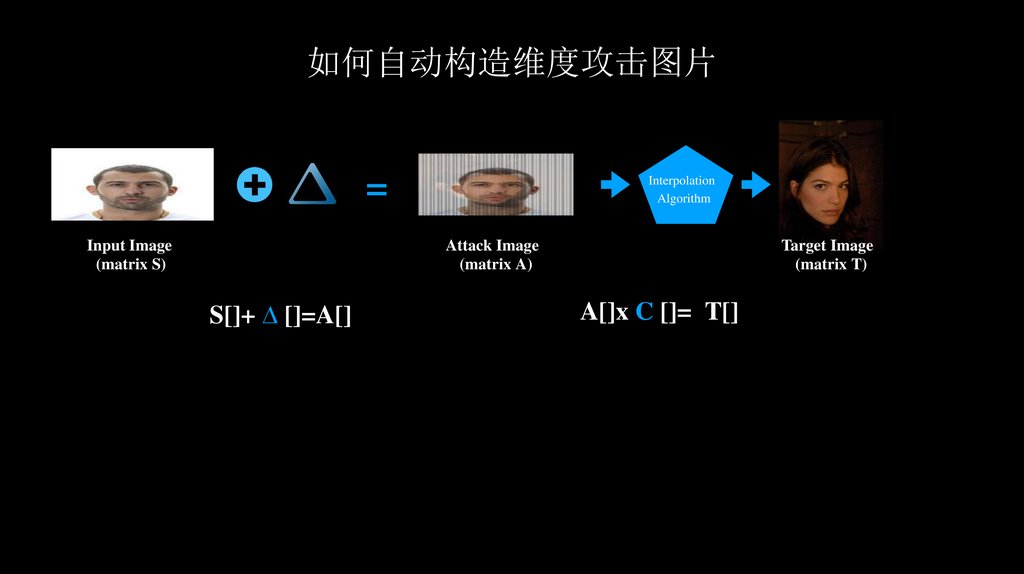

如何自动构造维度攻击图片=

Input Image

(matrix S)

Interpolation

Algorithm

Attack Image

(matrix A)

S[]+ ∆ []=A[]

Target Image

(matrix T)

A[]x C []= T[]

52.

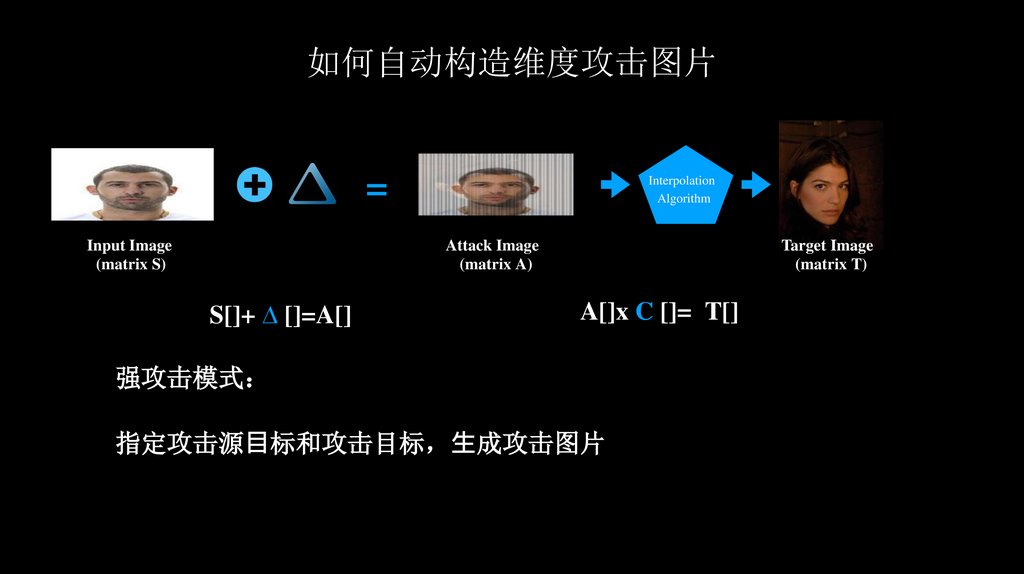

如何自动构造维度攻击图片=

Input Image

(matrix S)

Interpolation

Algorithm

Attack Image

(matrix A)

S[]+ ∆ []=A[]

Target Image

(matrix T)

A[]x C []= T[]

强攻击模式

指定攻击源⽬标和攻击目标 ⽣成攻击图片

53.

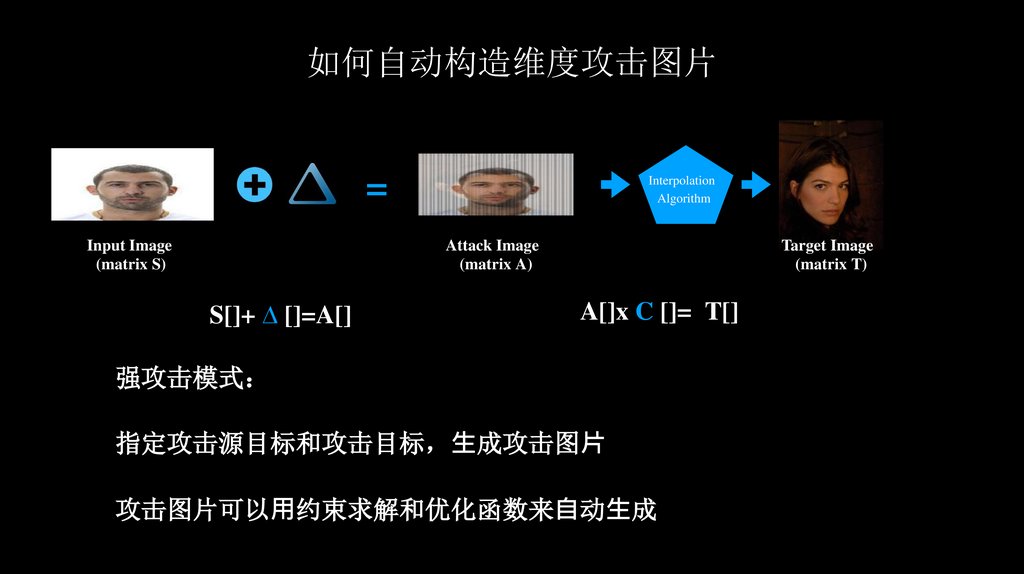

如何自动构造维度攻击图片=

Input Image

(matrix S)

Interpolation

Algorithm

Attack Image

(matrix A)

S[]+ ∆ []=A[]

Target Image

(matrix T)

A[]x C []= T[]

强攻击模式

指定攻击源目标和攻击目标 ⽣成攻击图⽚

攻击图片可以⽤约束求解和优化函数来⾃动⽣成

54.

如何自动构造维度攻击图片Interpolation

Algorithm

=

Input Image

(matrix S)

Target Image

(matrix T)

Attack Image

(matrix A)

S[]+ ∆ []=A[]

弱攻击模式

仅指定攻击目标 ⽣成攻击图片

A[]x C []= T[]

55.

此处省略 300字 。。。约束求解和目标函数优化的数学方程 …

56.

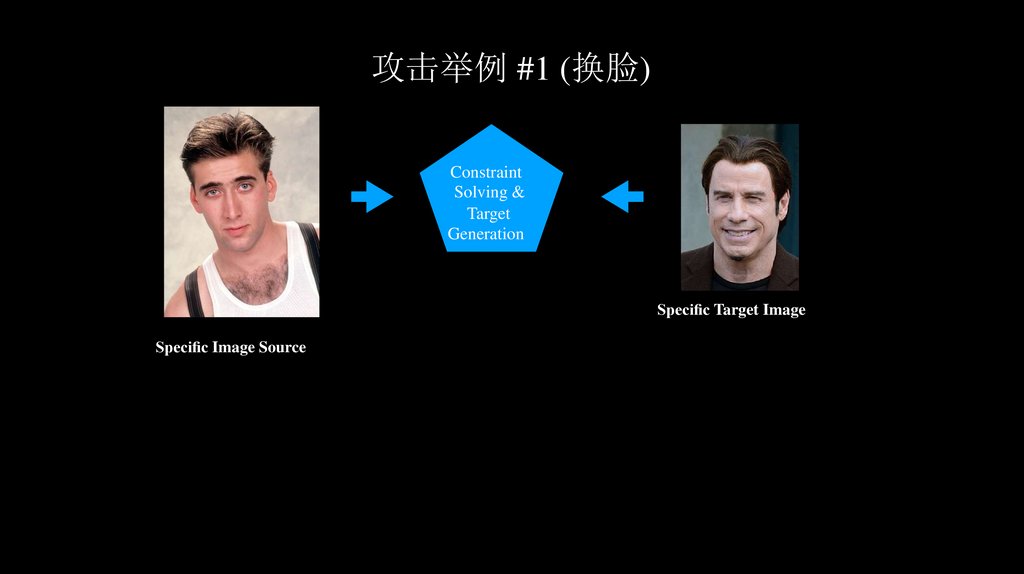

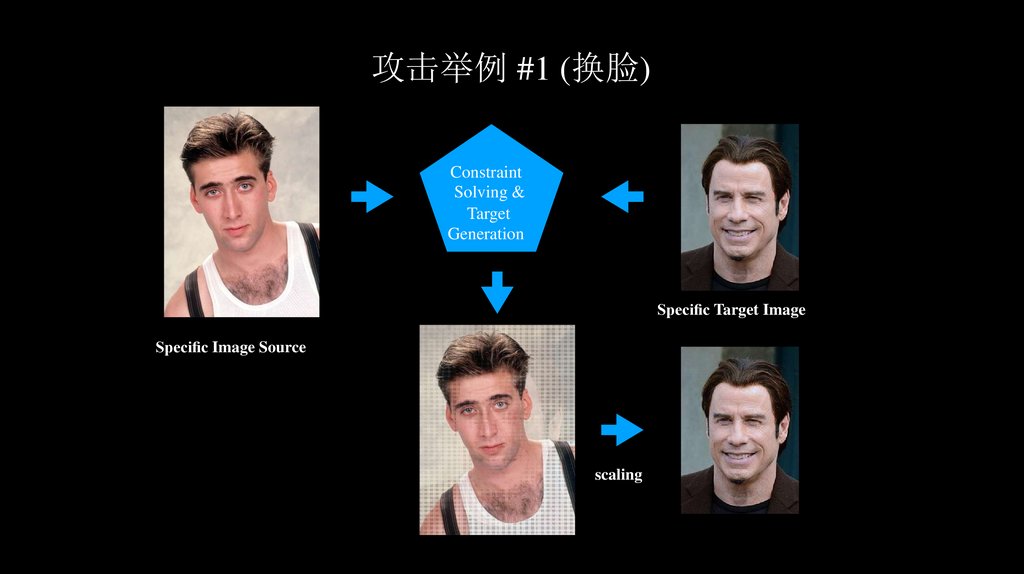

攻击举例 #1 (换脸)Constraint

Solving &

Target

Generation

Speci c Target Image

Speci c Image Source

57.

攻击举例 #1 (换脸)Constraint

Solving &

Target

Generation

Speci c Target Image

Speci c Image Source

58.

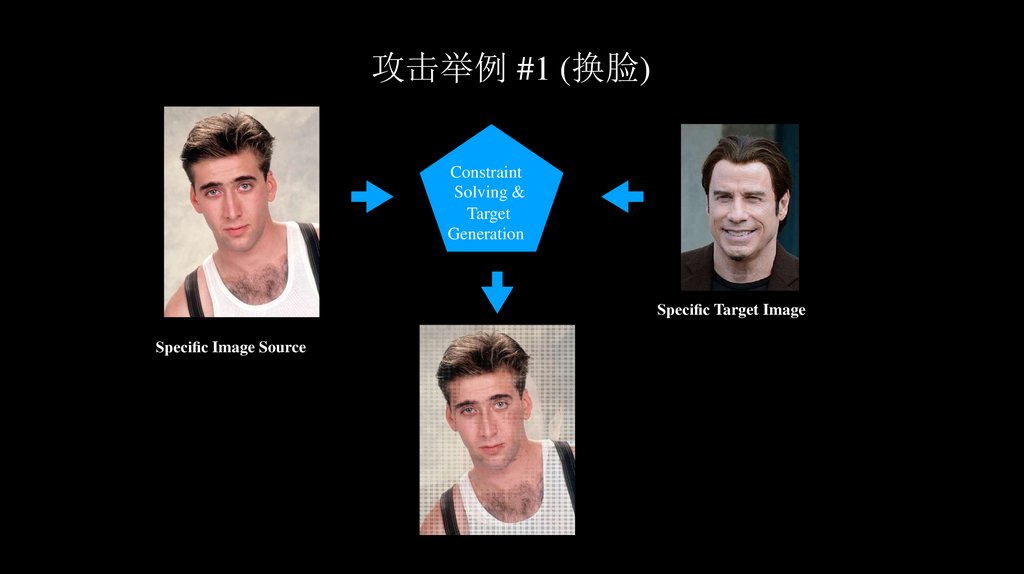

攻击举例 #1 (换脸)Constraint

Solving &

Target

Generation

Speci c Target Image

Speci c Image Source

scaling

59.

攻击举例 #1 (换脸)强攻击模式结果

60.

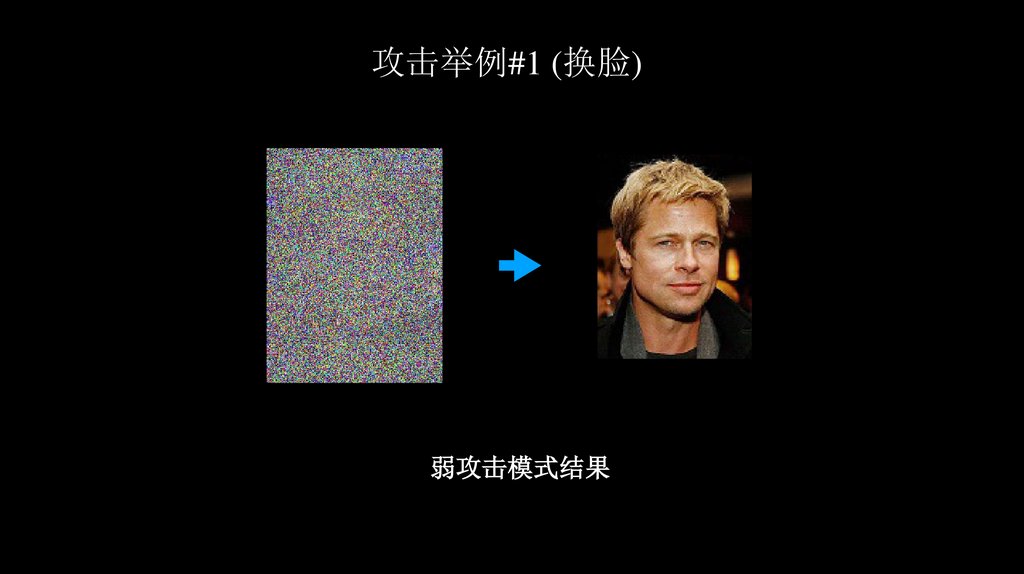

攻击举例#1 (换脸)弱攻击模式结果

61.

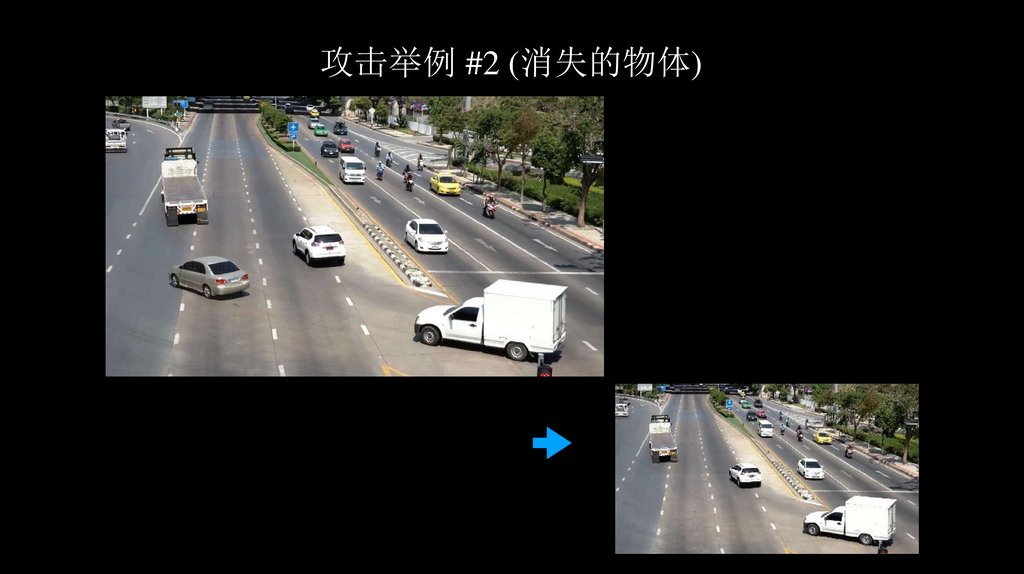

攻击举例 #2 (消失的物体)62.

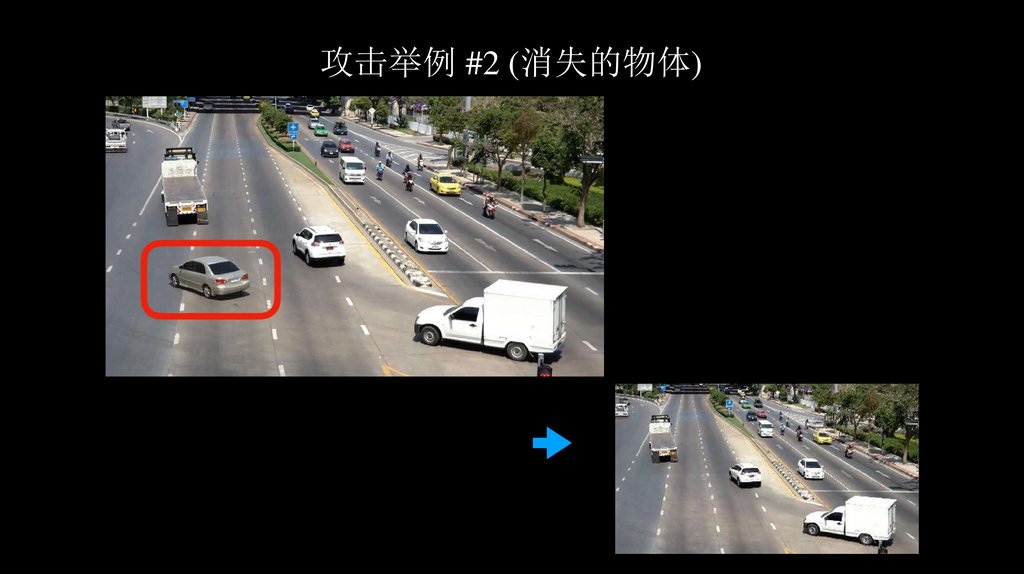

攻击举例 #2 (消失的物体)63.

攻击举例 #2 (消失的物体)64.

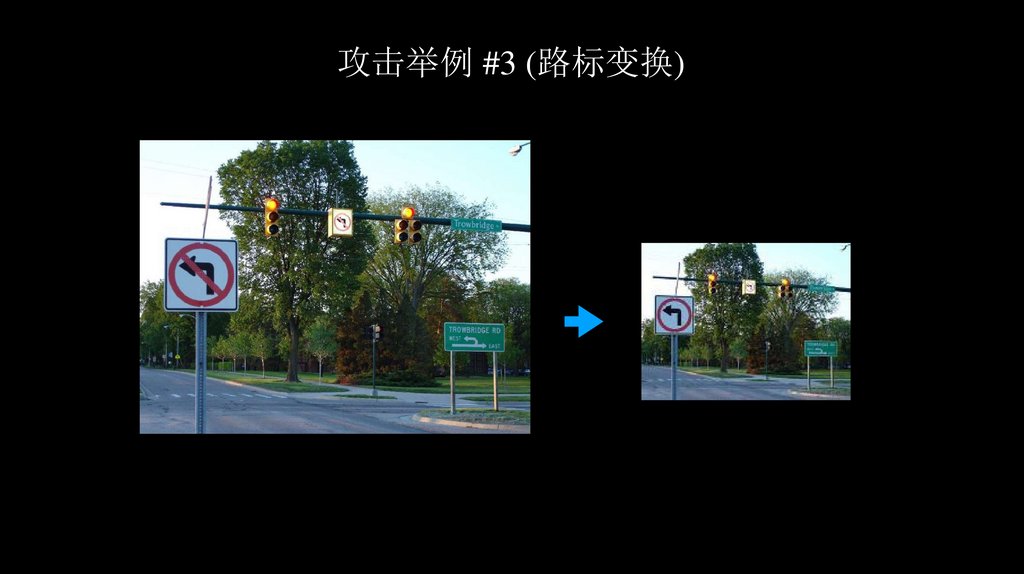

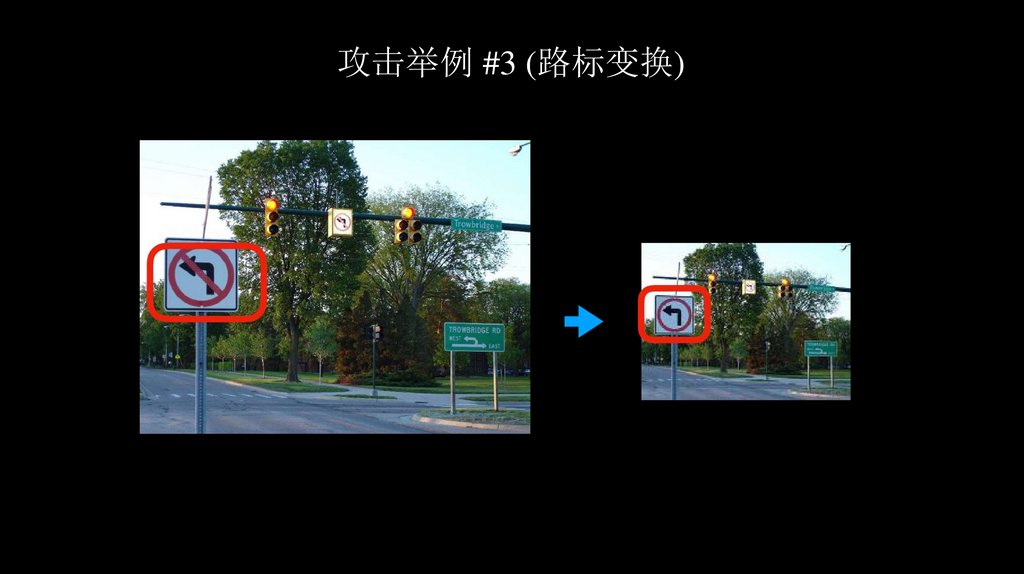

攻击举例 #3 (路标变换)65.

攻击举例 #3 (路标变换)66.

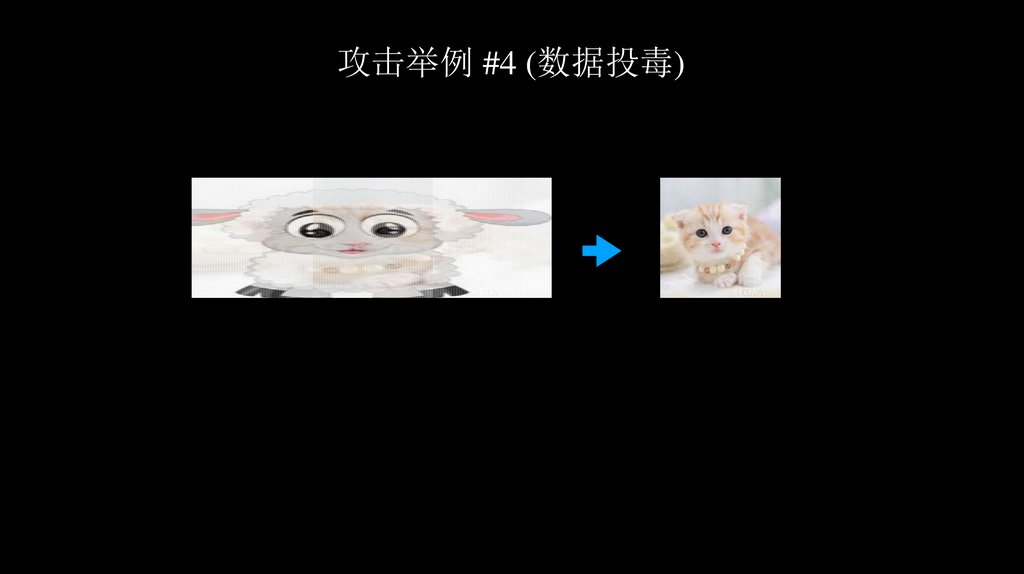

攻击举例 #4 (数据投毒)67.

攻击举例 #4 (数据投毒)68.

攻击举例 #4 (数据投毒)69.

如何防御数据流维度攻击• 只⽤模型⼤小的数据做训练和输⼊

• 不适用于开放型互联网应用

• 只接收来⾃特定传感 的数据

• 网络和传感器依然是攻击面

• 检测图⽚维度变换前后“语义”特征

• 但检测只能看到“语法”层面的特征

70.

检测“恶意”图片维度转换• 可观测的特征

• 图⽚特征

• Color histogram

• Color scattering index

• 基本思路

• 正常的图⽚维度变化目的是维持图片内容的基本形象

• 正常图片变换前后⾊彩分布近似

71.

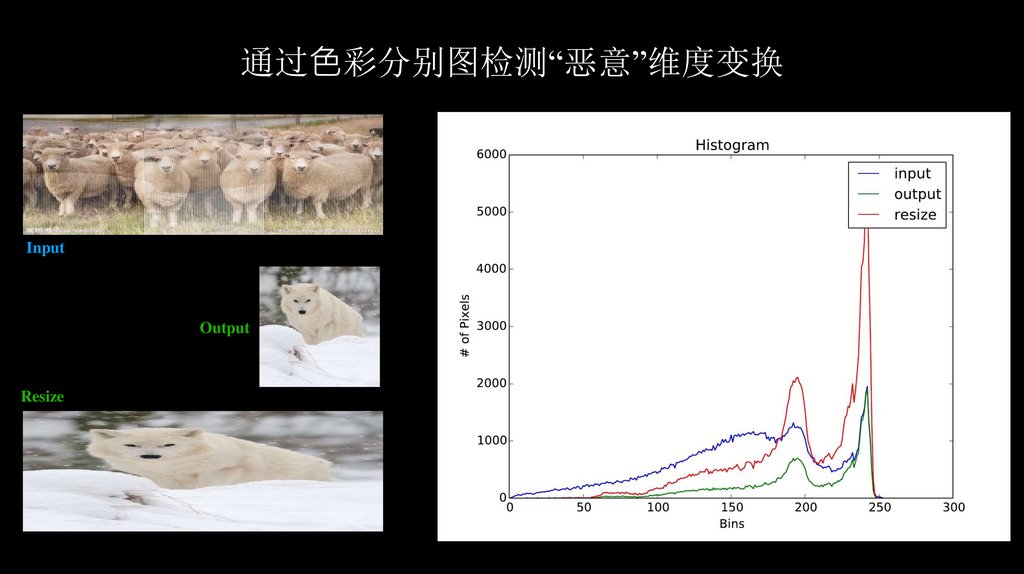

通过⾊彩分别图检测“恶意”维度变换Input

Output

Resize

72.

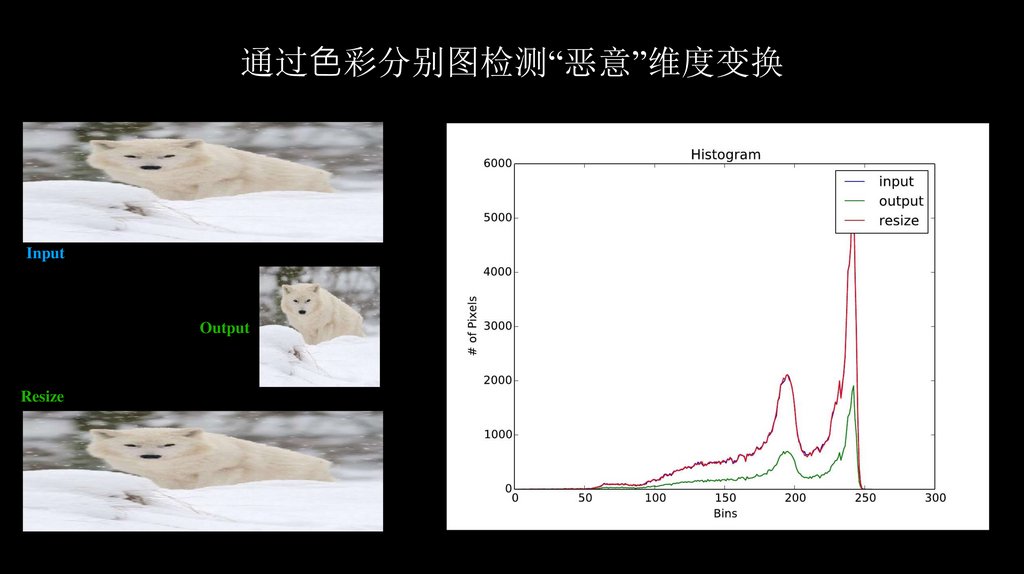

通过⾊彩分别图检测“恶意”维度变换Input

Output

Resize

73.

总结⼈工智能深度学习系统有一个重要的隐含假设 模型维度是固定的

维度变换函数被广泛应⽤于深度学习系统应用当中

现有的维度变换算法没有对恶意的输入进⾏足够的考虑

恶意构造的攻击可以造成分类逃逸 数据投毒等攻击结果

Программирование

Программирование