Похожие презентации:

Next generation assessment and test development

1.

Next generation assessmentand test development

Tarasova Ksenia

2.

«Technologically Enhanced Items»- technology-enabled items,

innovative items, technology-enhanced innovative items, computerbased items, innovative computerized test items…

…contain content or functionality that is not possible in text-based or multiplechoice tasks…

Wendt & Harmes, 1999

…are computerized tasks that utilize the capabilities and functions of the computer and

that are difficult to implement in traditional paper form…

Parshall & Harmes, 2007

TEI:

and what's

that?

…interactive, computer-based tasks, which makes them different from traditional formbased tasks…

Parshall, Davey, and Pashley, 2000

TEIs (technologically enhanced item types) are computer-based test items

that involve specialized interaction to produce an answer and/or

associated response data.

Stimulus vs. Response Interaction

3.

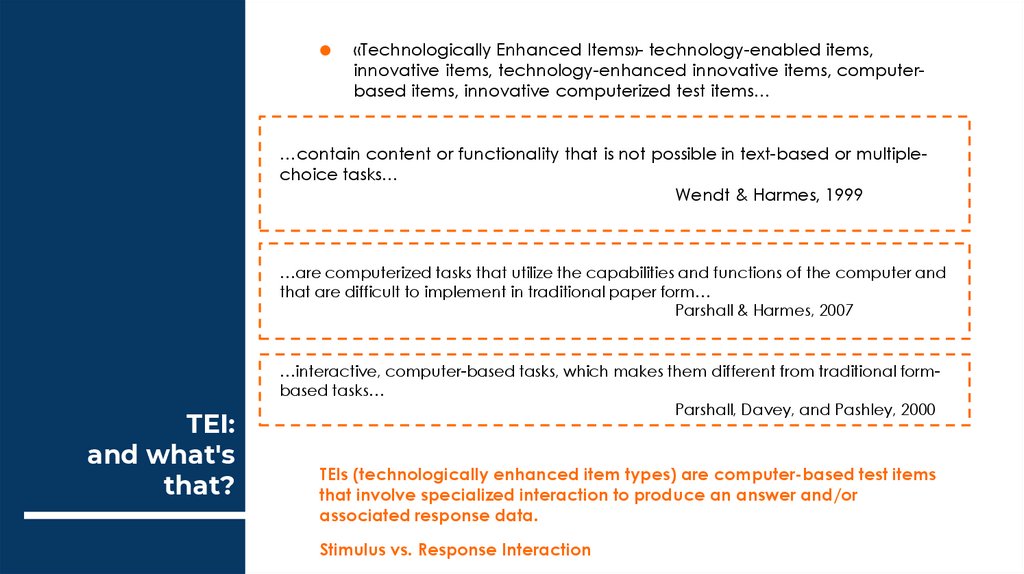

Stimulus: Multimedia4.

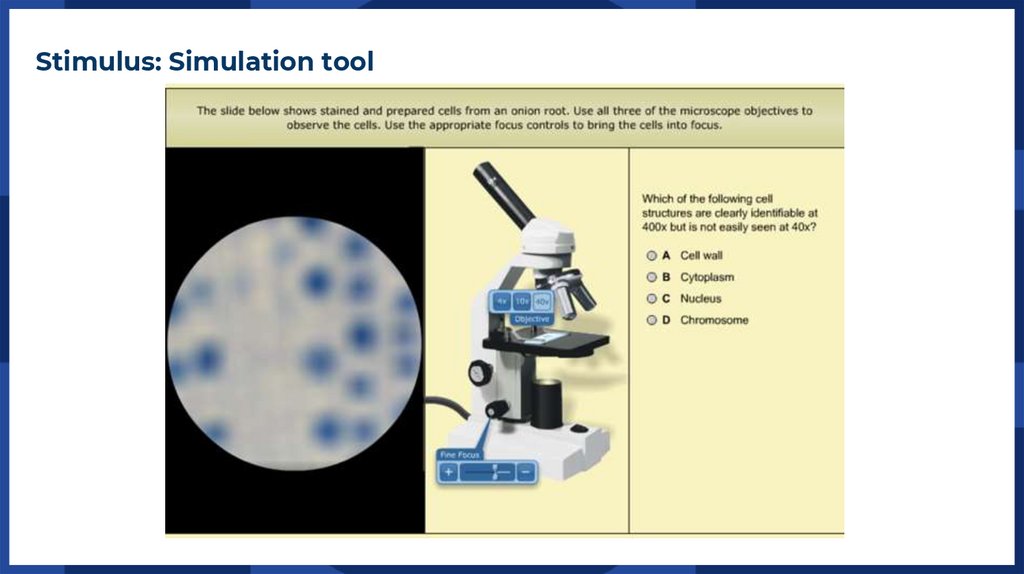

Stimulus: Simulation tool5.

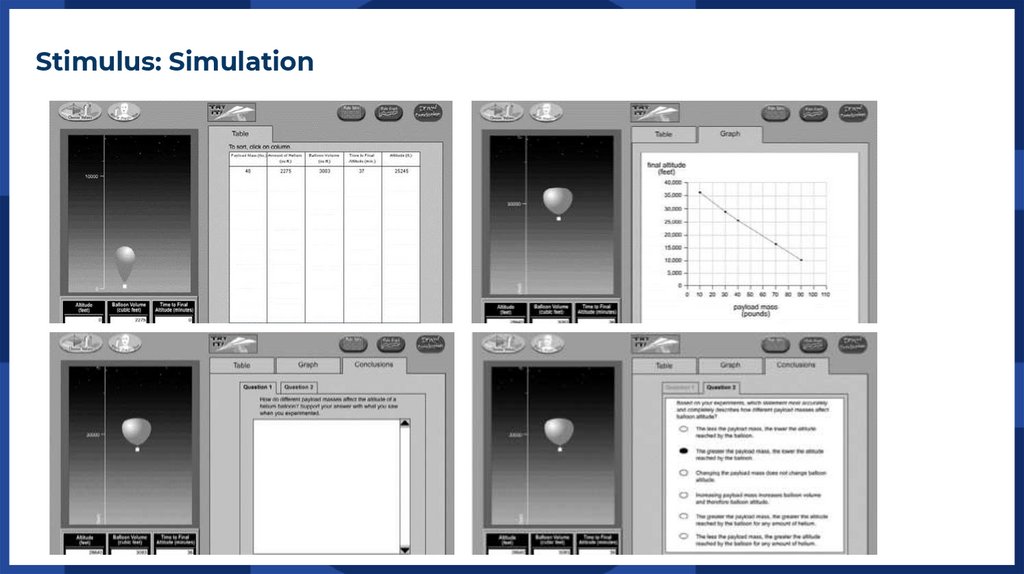

Stimulus: Simulation6.

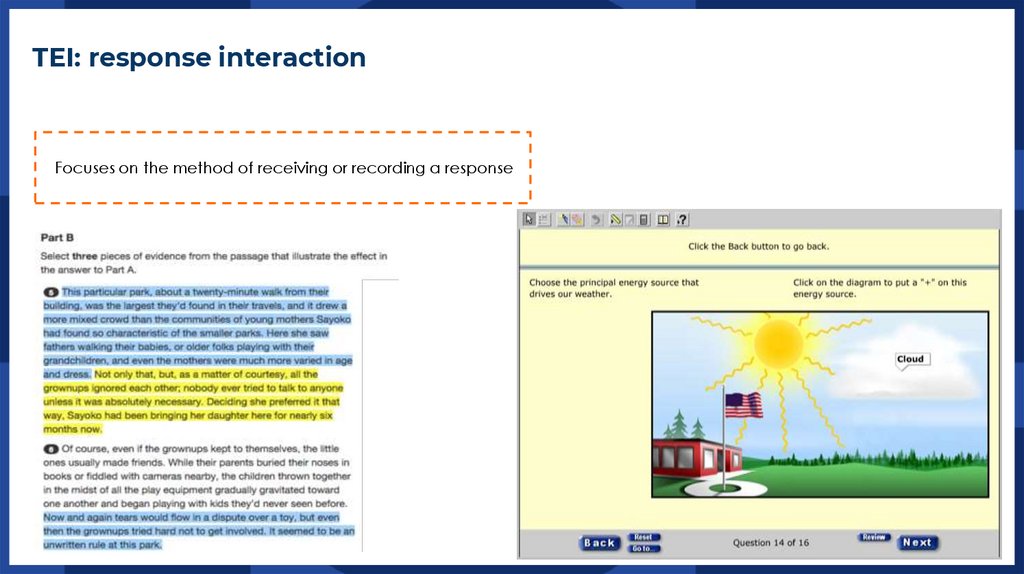

TEI: response interactionFocuses on the method of receiving or recording a response

7.

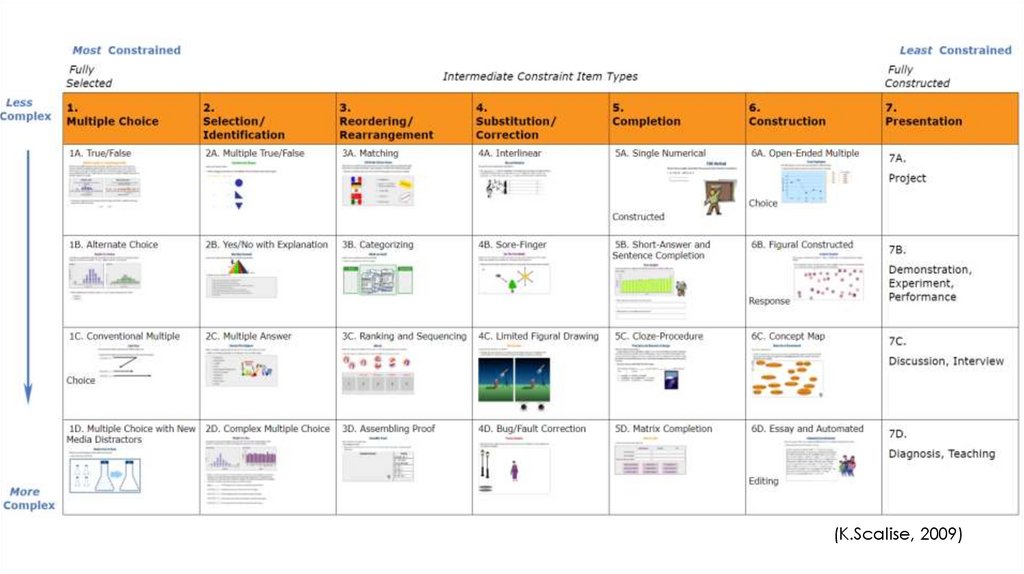

(K.Scalise, 2009)8.

«…evolution from technology-enhanced assessment to assessmentenhanced technology as passing through four stages» (DiCerbo &Behrens, 2012)

It's a 4-level process:

• use of technology to create new item types and improve feedback on

assessments that are still close neighbors of pre-digital «paper andpencil tests»; creating new types of elements, mechanics, and

feedback improvements

TEI:

next

generation

assessment

• is defined by the creation of new assessments that are focused on a

central, context-providing problem to be solved; these might be

simulations that are designed and administered as discrete assessment

activities (simulation tasks)

• creating natural digital environments designed with embedded

assessment arguments (using of stealth assessment in a game (Shute &

Ventura, 2013)

• consists of an ecosystem that accumulates information from various

natural digital environments like those in level 3.

9.

Virtual Performance-Based Assessments (VPBAs) - are environments for testtakers to interact with systems, sometimes including other persons or agents, in

order to provide evidence about their knowledge, skills, or other attributes.

Digital data capture makes it possible to acquire rich details about test taker

actions and the evolving situations in which they occur.

VPBA

Scenario-Based Assessments

Simulation-Based Assessments

Game-Based Assessments

Collaborative Assessments

10.

Scenario-Based AssessmentsA scenario-based assessment introduces a narrative context for

a student’s experience, to add a layer of meaning to

motivations, thoughts, and actions. The simplest version

introduces an ongoing context to a sequence of tasks, which

are frequently in a traditional format (e.g. multiple-choice or

short answer). More complex scenario-based assessments can

add elements of simulation and gaming.

Scenario-Based

Assessments

11.

Simulation-Based AssessmentsIn simulation-based assessments, the goal is to create a particular

situation that resembles the real-world context (e.g., conducting a

science experiment, administering medical care, or flying a jet fighter) to

provide opportunities to assess individuals’ capabilities in such a

situation” (Davey et al., 2015).

SimulationBased

Assessments

Simulation-based assessments are particularly important to afford

opportunities for people to demonstrate their knowledge, skills, and

abilities in situations that can be too expensive, too time consuming, or

too dangerous to carry out in real-world contexts (Snir et al., 1993).

12.

Game-Based AssessmentsGame-based assessments incorporate principles of game design in

addition to assessment design to measure individuals’ knowledge,

skills, and abilities. These types of assessment can increase

motivation by making the tasks more engaging.

In designing a game-based assessment, it is important to find the

balance between design considerations for game elements and

assessment elements.

Given that game designers build complex, interactive experiences,

game-based assessments are particularly suitable for complex

constructs such as systems thinking and crosscultural competence.

Game-Based

Assessments

13.

Collaborative AssessmentsCollaborative assessments are designed to create opportunities for individuals to

demonstrate their proficiency in working or communicating effectively with others.

For this purpose, an environment is created that ensures communication and

cooperation with one or more persons.

Virtual environments are well-suited for this, as they can present complex problems

and scenarios for individuals to work through in concert with others.

• communication can be asynchronous, such as streaming online conversations in a

learning management system or massive open online courses (MOOCs) (Rosé,

Ferschke, 2016; Wise, Chiu, 2011)

Collaborative

Assessments

synchronous, where people communicate in real time via chat or audio and video

channels (Andrews-Todd & Forsyth, 2020; Andrews-Todd et al., 2019; Bower et al.,

2017; van Joolingen et al., 2005)

• can include environments that support communication between a human

participant and one or more computer agents (Biswas et al., 2010; Graesser et al.,

2012; OECD, 2013).

• can include two human participants and artificial agents (e.g., Liu et al., 2015).

14.

Collaborative AssessmentsIn virtual environments, all the actions and discourse among

team members can be captured rather than just the answers

they provide. The resulting data can illuminate the processes

and strategies team members use during performance, as to

both the substance of the problem and the nature of the

interactions.

Collaborative

Assessments

15.

The work and mastery of thetest developer in today's digital

world is to solve some of the

outlined problems even before

the psychometric analysis stage.

16.

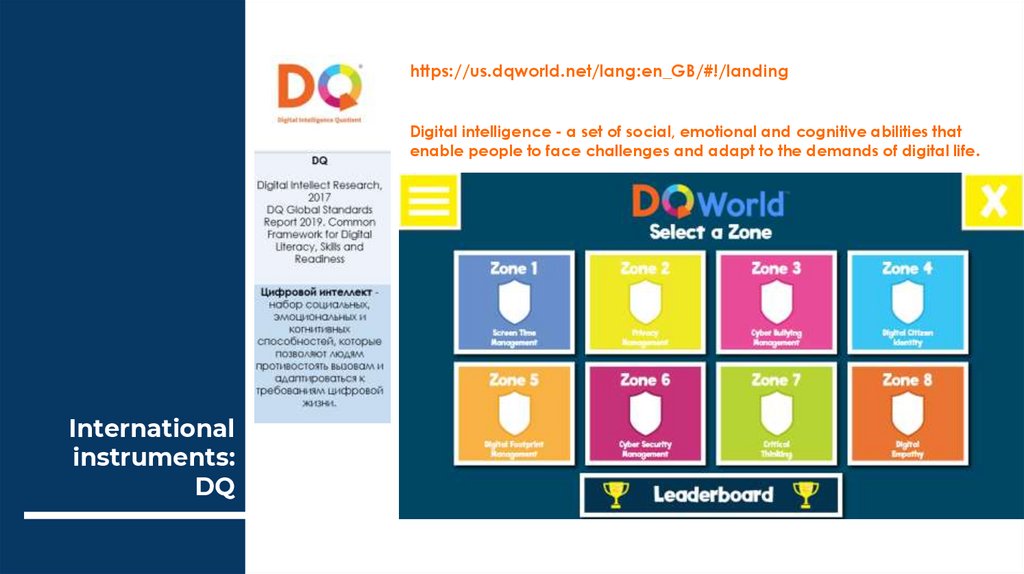

https://us.dqworld.net/lang:en_GB/#!/landingDigital intelligence - a set of social, emotional and cognitive abilities that

enable people to face challenges and adapt to the demands of digital life.

International

instruments:

DQ

17.

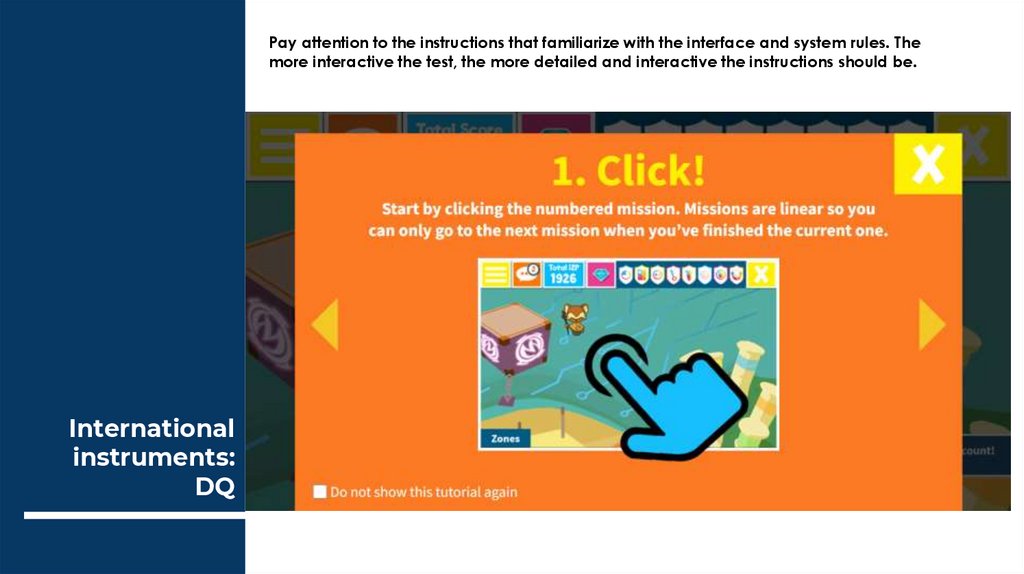

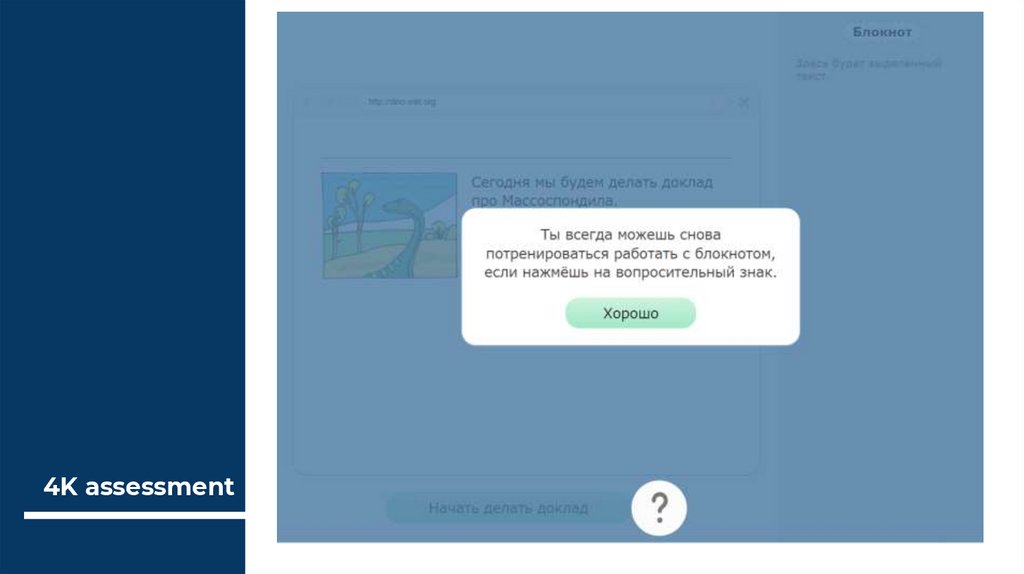

Pay attention to the instructions that familiarize with the interface and system rules. Themore interactive the test, the more detailed and interactive the instructions should be.

International

instruments:

DQ

18.

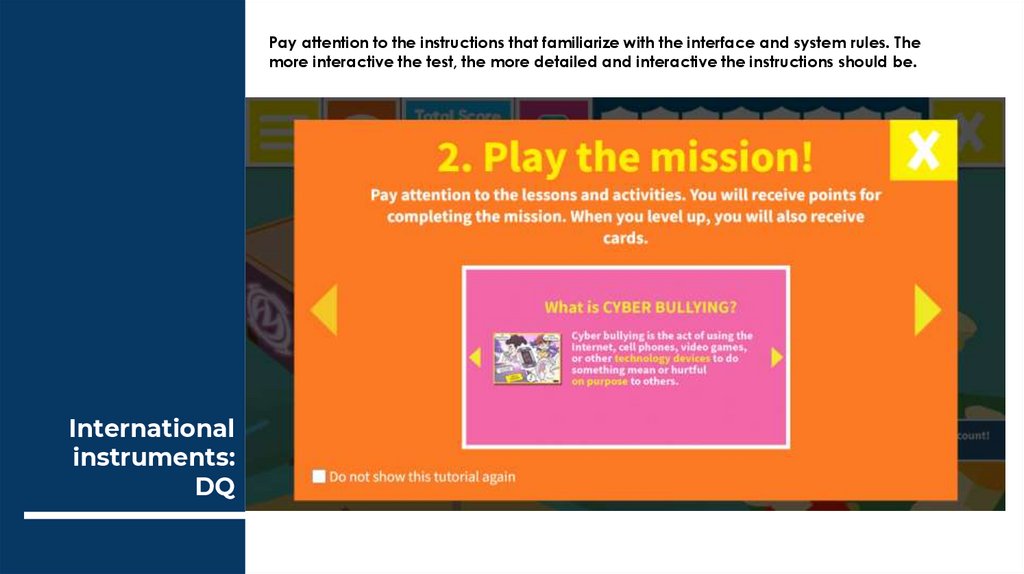

Pay attention to the instructions that familiarize with the interface and system rules. Themore interactive the test, the more detailed and interactive the instructions should be.

International

instruments:

DQ

19.

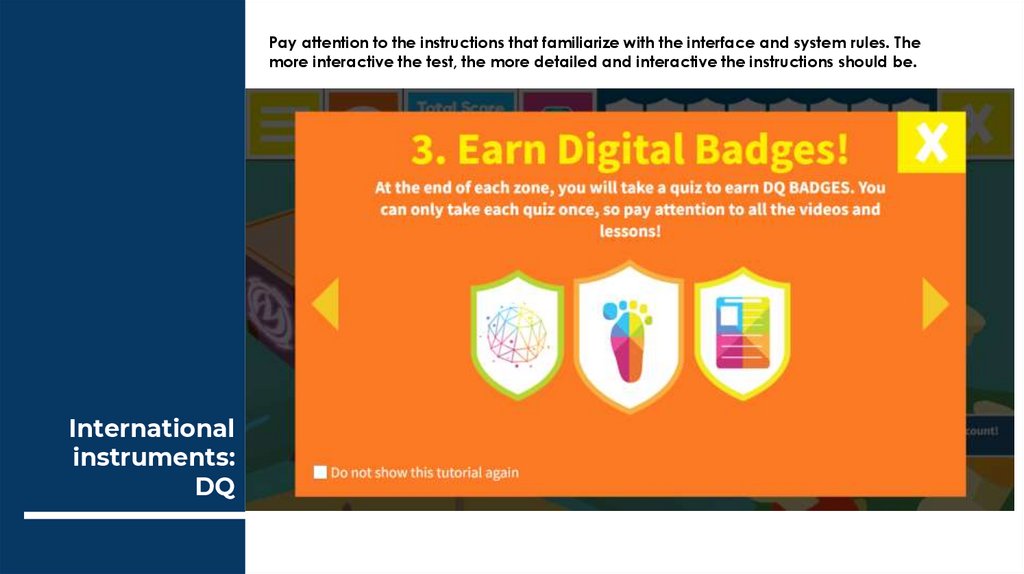

Pay attention to the instructions that familiarize with the interface and system rules. Themore interactive the test, the more detailed and interactive the instructions should be.

International

instruments:

DQ

20.

Pay attention to the instructions that familiarize with the interface and system rules. Themore interactive the test, the more detailed and interactive the instructions should be.

International

instruments:

DQ

21.

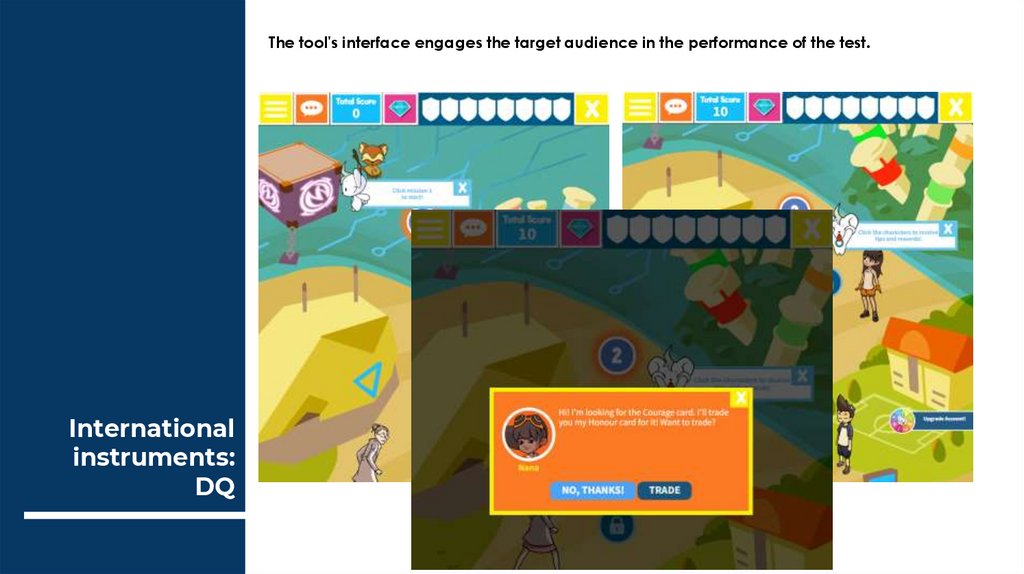

The tool's interface engages the target audience in the performance of the test.International

instruments:

DQ

22.

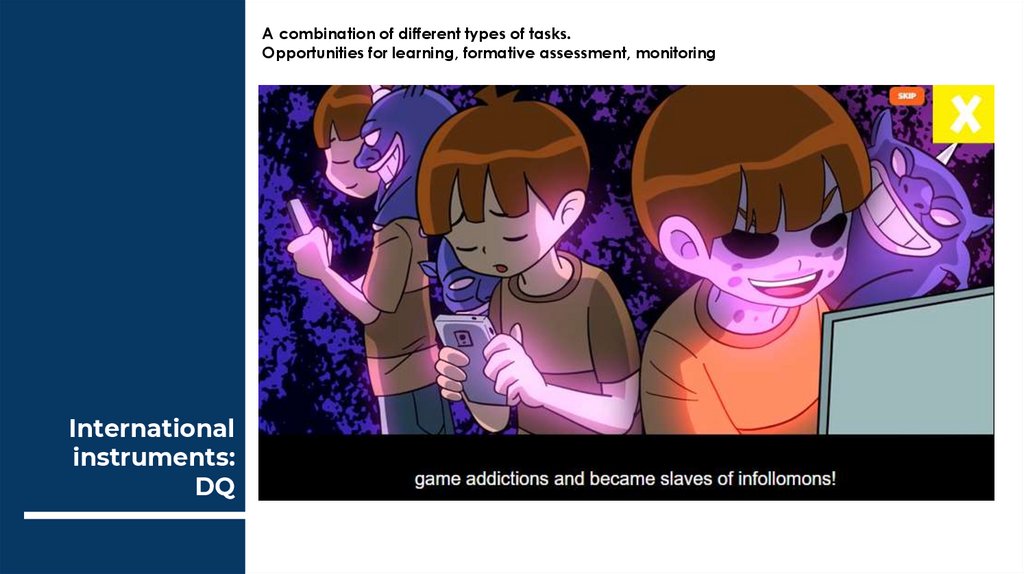

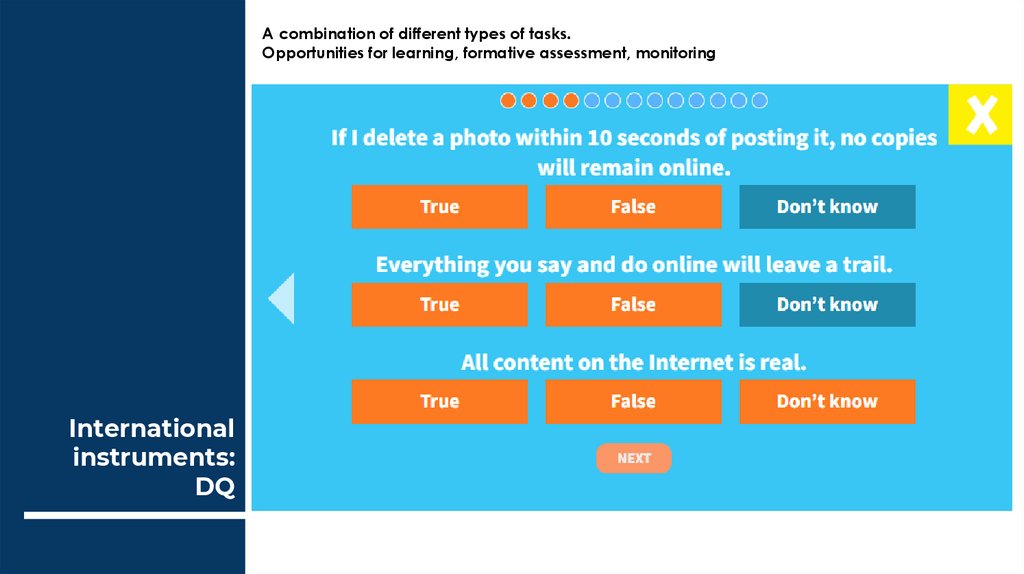

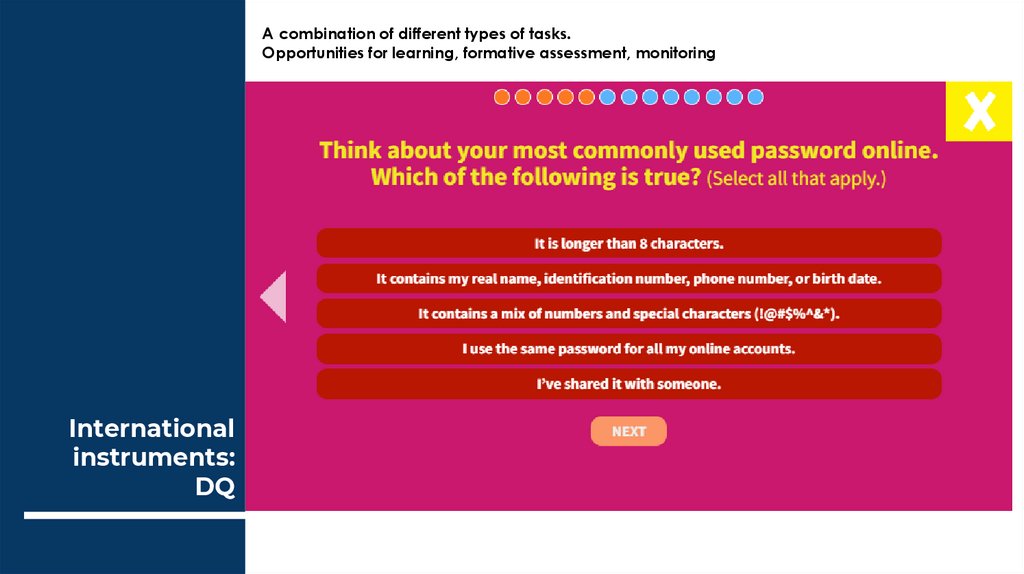

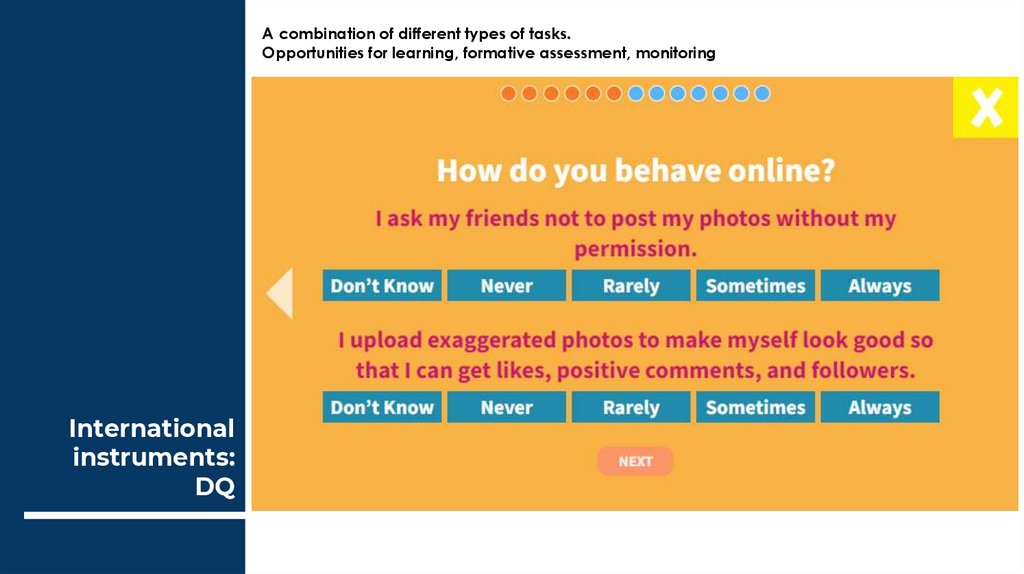

A combination of different types of tasks.Opportunities for learning, formative assessment, monitoring

International

instruments:

DQ

23.

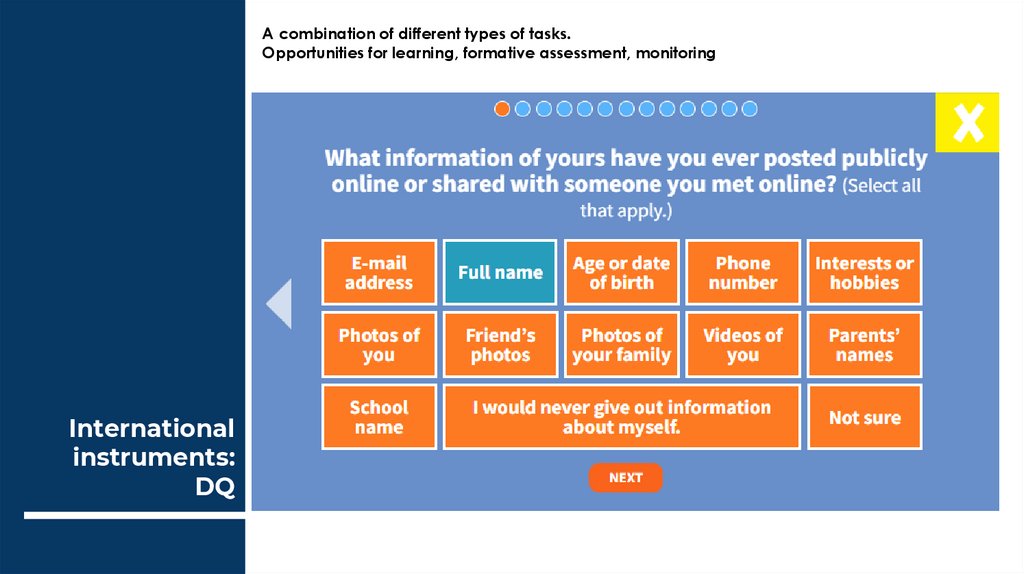

A combination of different types of tasks.Opportunities for learning, formative assessment, monitoring

International

instruments:

DQ

24.

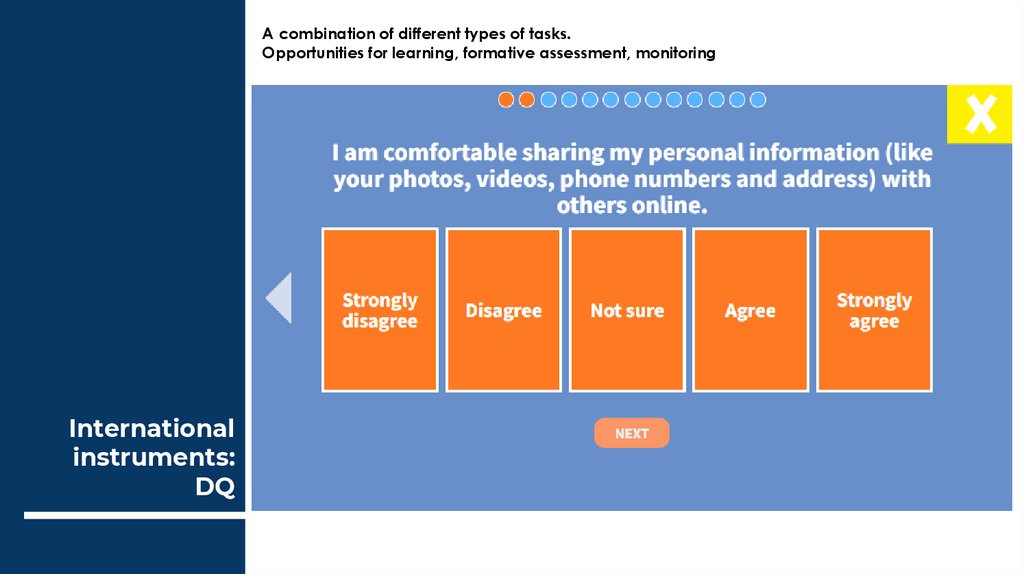

A combination of different types of tasks.Opportunities for learning, formative assessment, monitoring

International

instruments:

DQ

25.

A combination of different types of tasks.Opportunities for learning, formative assessment, monitoring

International

instruments:

DQ

26.

A combination of different types of tasks.Opportunities for learning, formative assessment, monitoring

International

instruments:

DQ

27.

A combination of different types of tasks.Opportunities for learning, formative assessment, monitoring

International

instruments:

DQ

28.

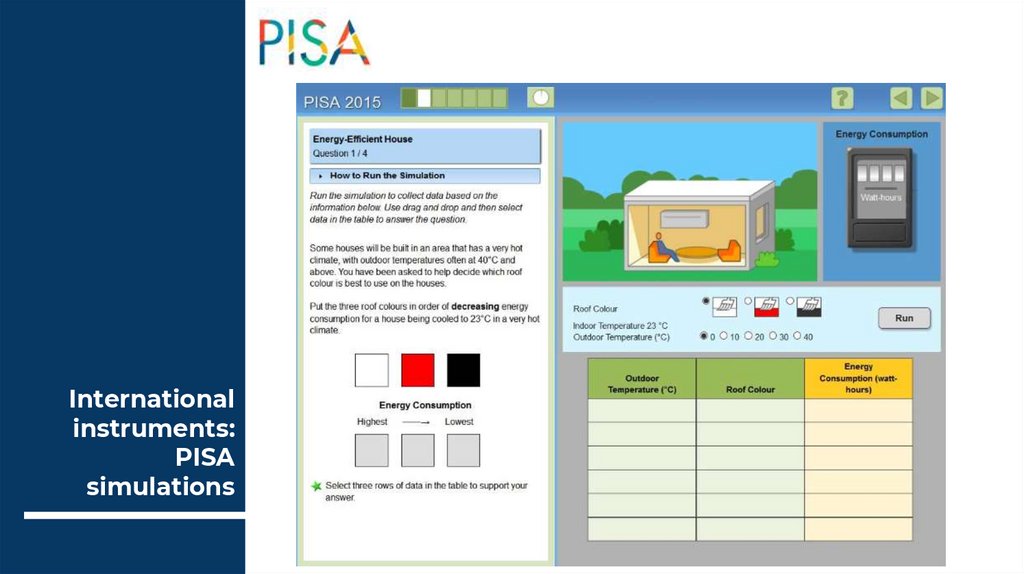

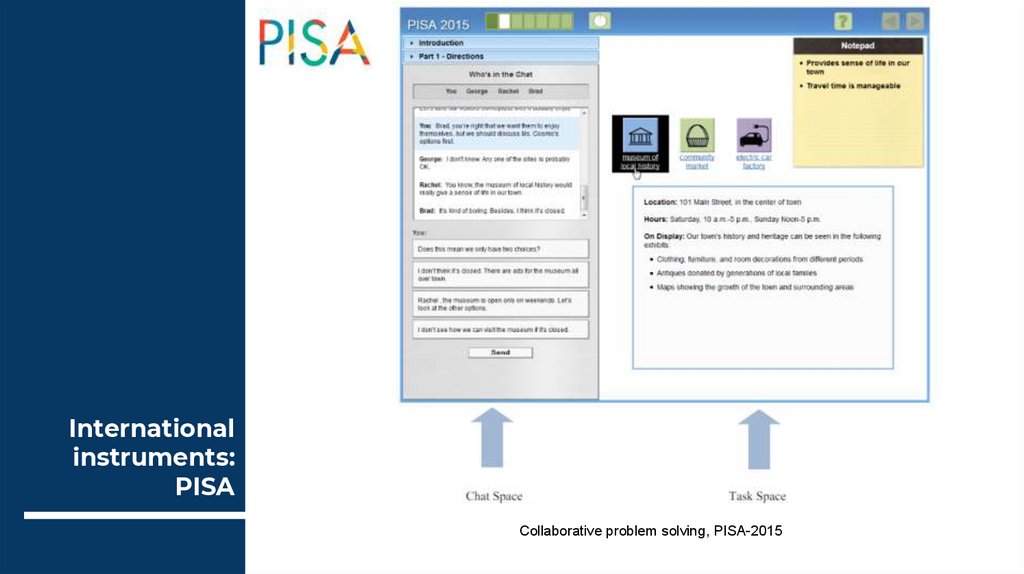

Internationalinstruments:

PISA

simulations

29.

Internationalinstruments:

PISA

simulations

30.

Internationalinstruments:

PISA

Collaborative problem solving, PISA-2015

31.

Important rules32.

Main rulesScenarios should include real-life situations that are engaging

for the target audience and can be solved in a variety of

ways;

Problem solving does not require special/subject knowledge

(unless you want to measure it)

The chosen context of the scenarios does not affect the

fairness of the assessment: it is equally familiar to different

groups of learners (e.g. groups by gender or place of

residence), which is tested in advance in qualitative research

(cognitive labs);

At each step of the task within the scenario, a specific

indicator/ several indicators are recorded;

To assess all indicators, it is necessary to bring all respondents

to the same plot points.

33.

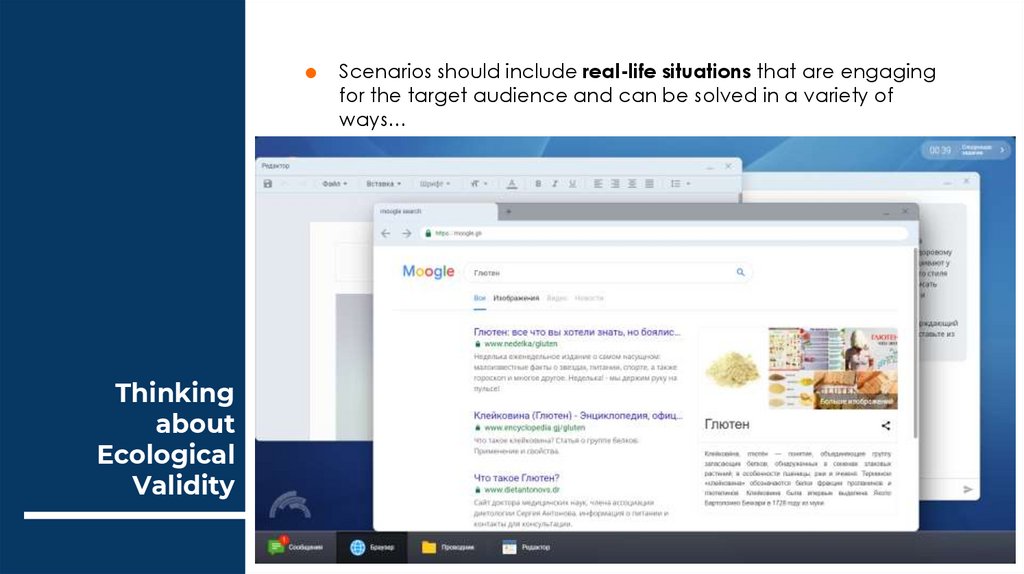

Thinking

about

Ecological

Validity

Scenarios should include real-life situations that are engaging

for the target audience and can be solved in a variety of

ways…

34.

«Convergence means that each step performed bytest-takers is independent of the previous and the

next steps»

He, von Davier, Greiff, Steinhauer, & Borysewicz, 2017

Convergence:

what is it?

35.

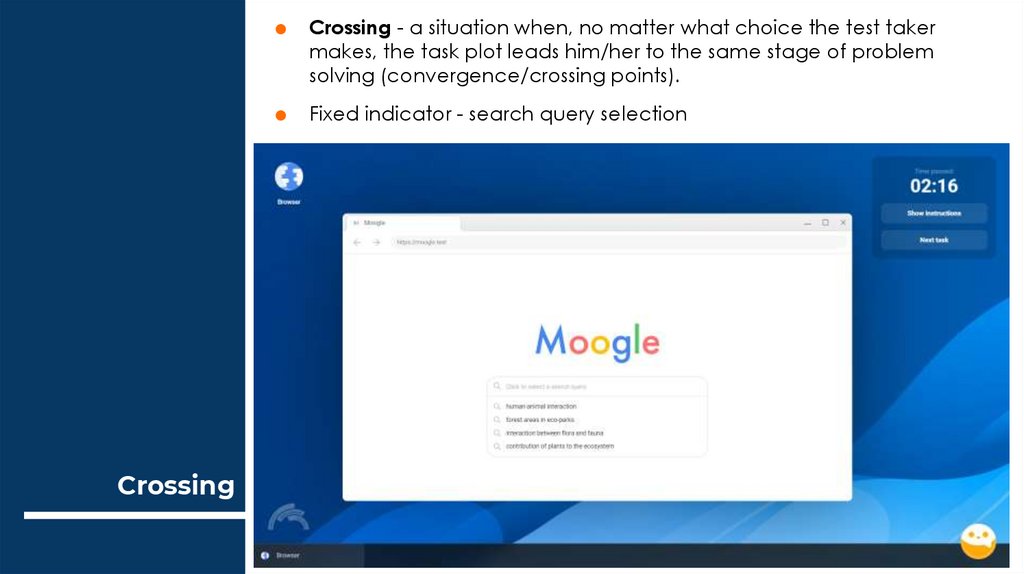

CrossingCrossing - a situation when, no matter what choice the test taker

makes, the task plot leads him/her to the same stage of problem

solving (convergence/crossing points).

Fixed indicator - search query selection

36.

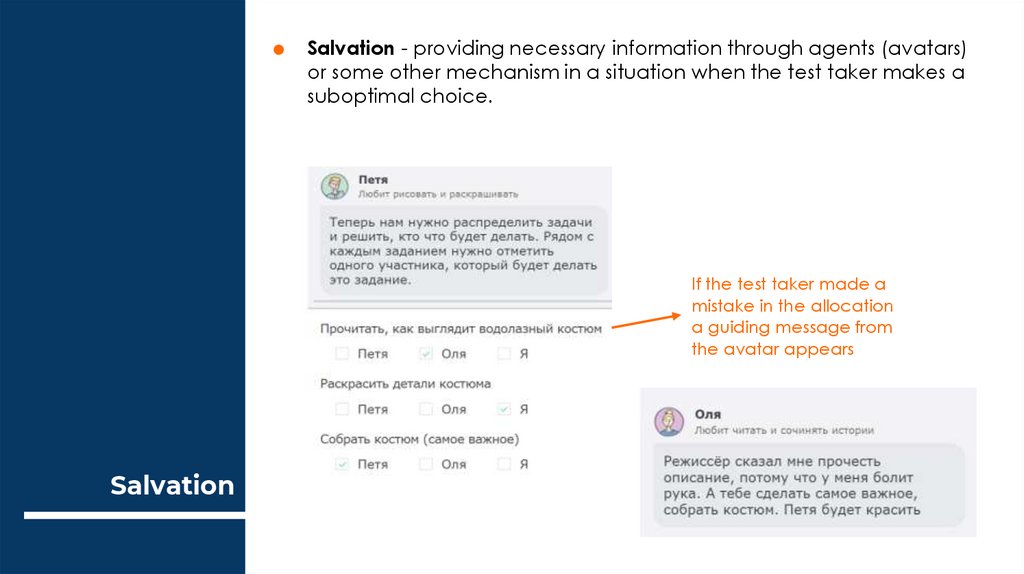

Salvation - providing necessary information through agents (avatars)

or some other mechanism in a situation when the test taker makes a

suboptimal choice.

If the test taker made a

mistake in the allocation

a guiding message from

the avatar appears

Salvation

37.

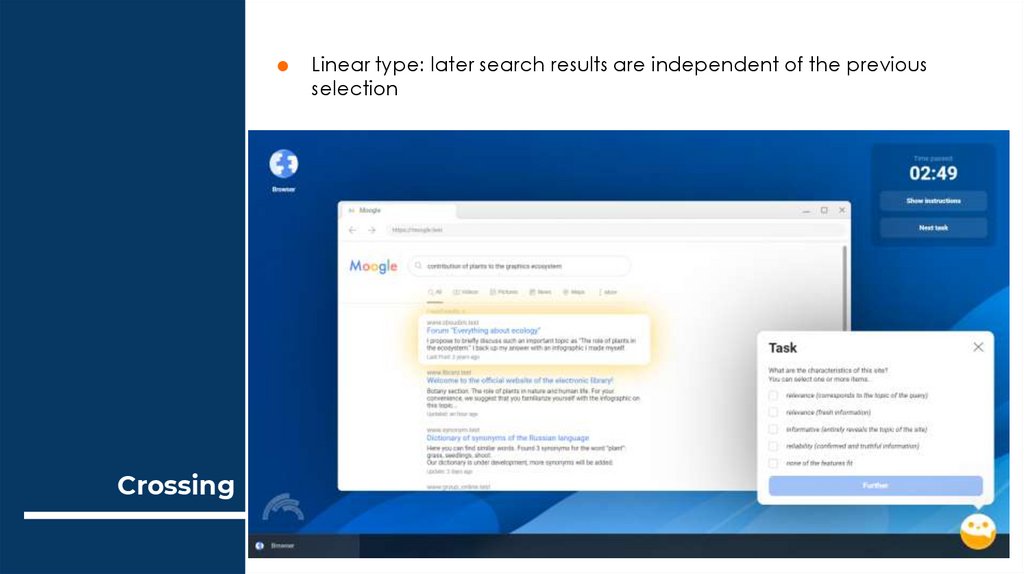

Crossing

Linear type: later search results are independent of the previous

selection

38.

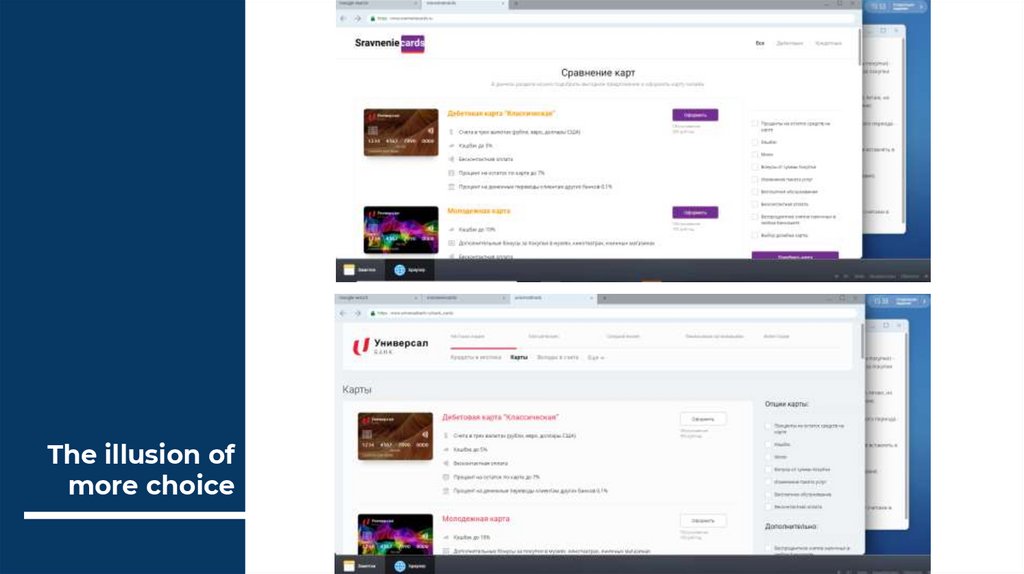

«Stoppers»

Linear type: «stoppers» and the illusion of more choice

39.

The illusion ofmore choice

40.

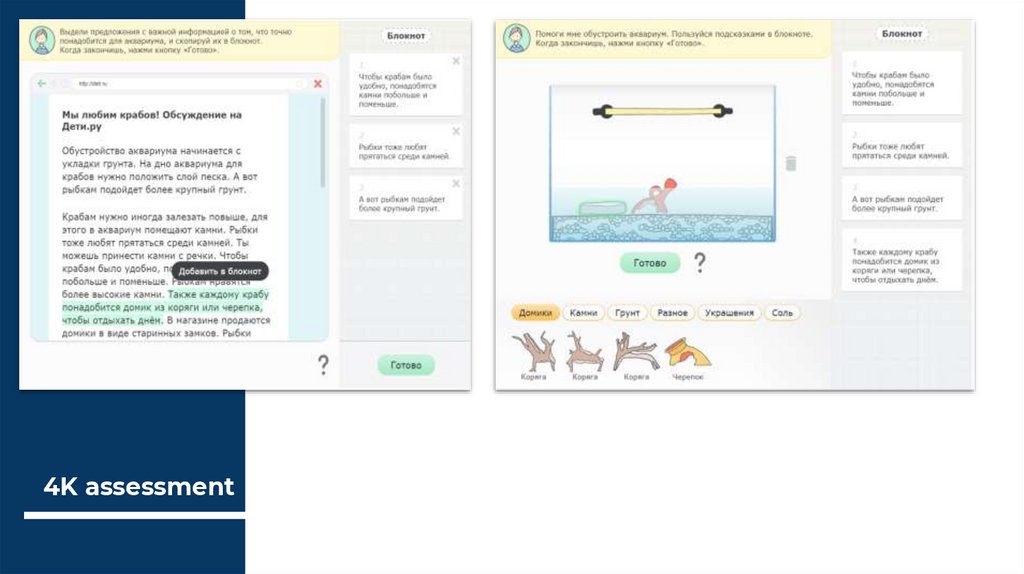

4K assessment41.

4K assessment42.

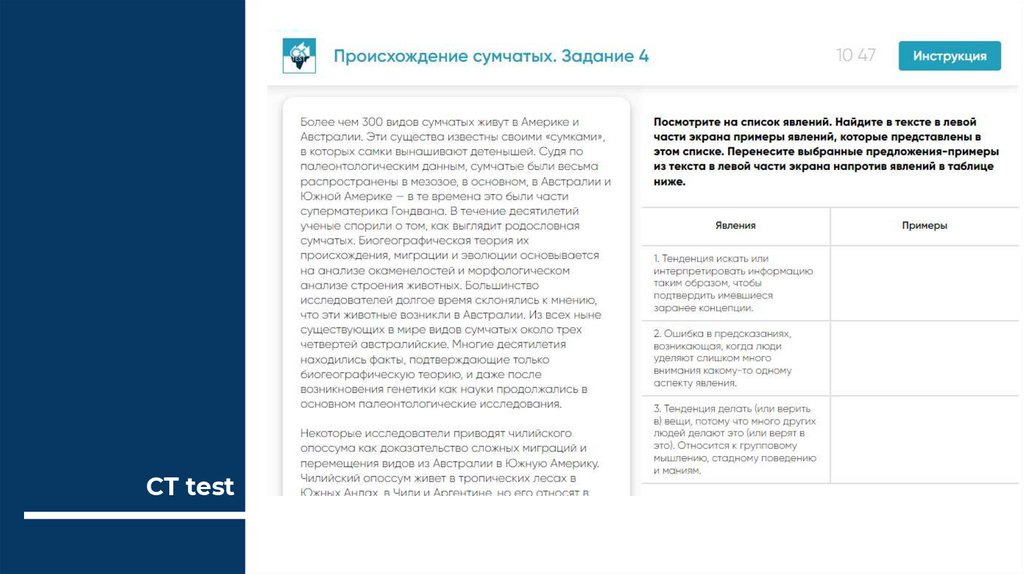

CT test43.

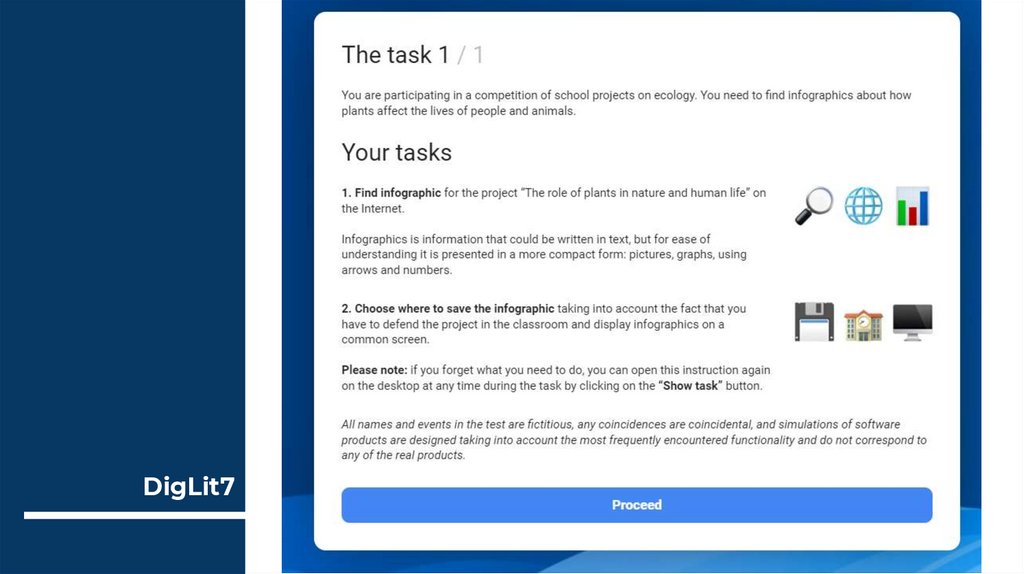

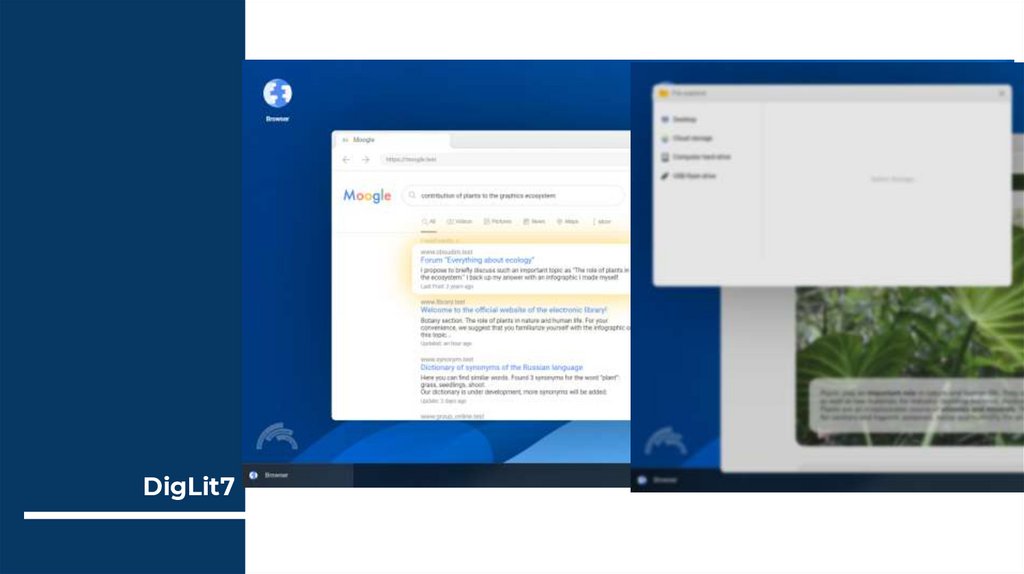

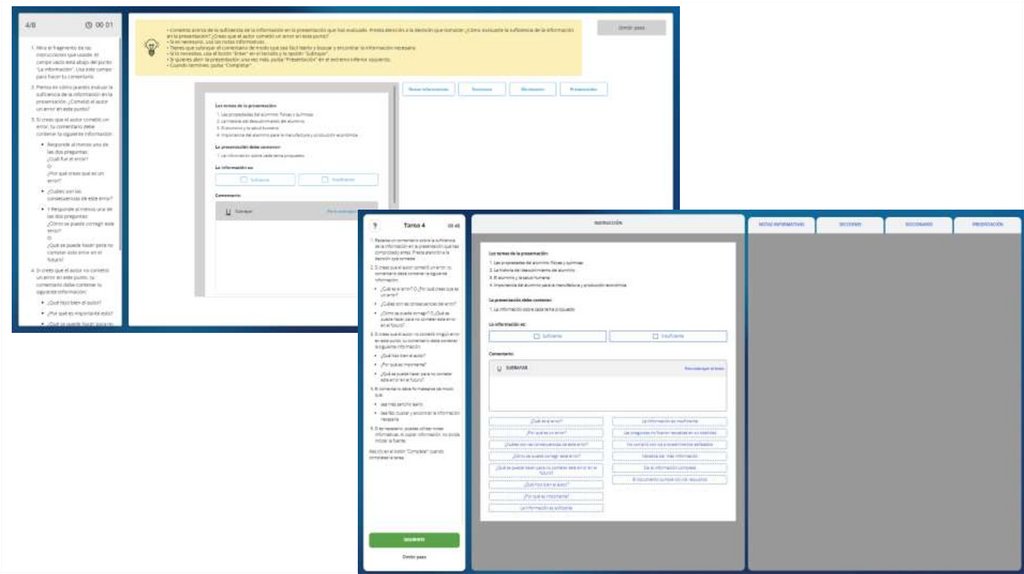

DigLit744.

DigLit745.

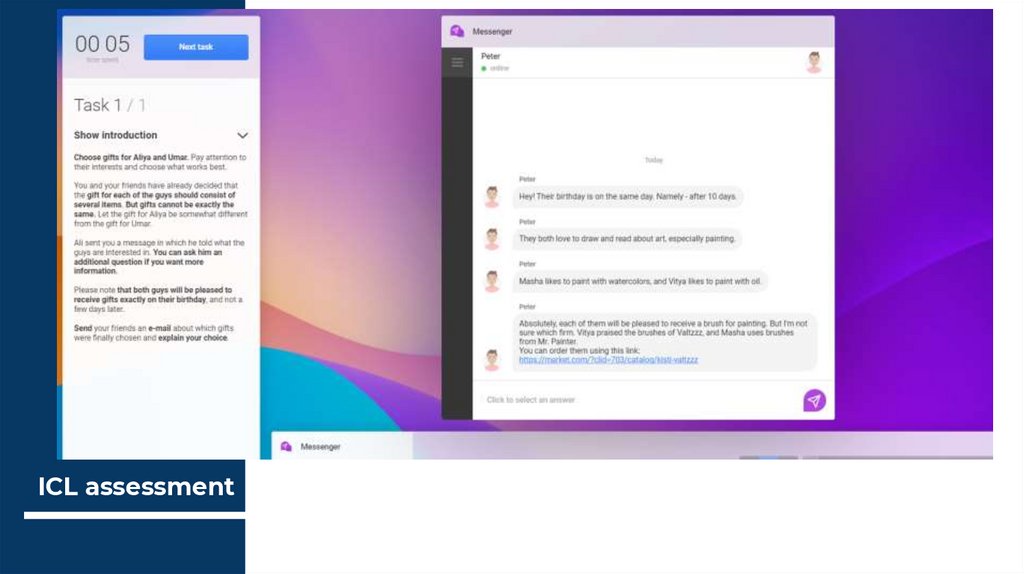

ICL assessment46.

saving time, since tests are easy to replicate when created, and test results are

easily accessible;

reducing the time for processing results and feedback;

ease of storage of test results;

ease of access: access at a time convenient for them (good for self-study);

ensuring a higher degree of secrecy;

variety of tasks and opportunities to use technology (e.g., can use more graphic

tasks, use different colors, animation, video, sound) (Harmes & Parshall, 2010), a

science test can allow students to design, model, and conduct simulation

experiments

(Boyle & Hutchinson, 2009; Jodoin, 2003; Kane, 2006; Parshall,

Harmes, Davey & Pashley, 2010; Tarrant, Knierim, Hayes & Ware, 2006)

Advantages

47.

Advantagesallows to use item banks and form different variants for different test takers

(therefore, there is no cheating);

it is possible to automatically record the execution time of each task, which can be

useful (as additional information);

ability to measure constructs and competencies that cannot be fully or adequately

measured by paper test (Bennett 2002; Parshall, Harmes, Davey, & Pashley, 2010)

allows to implement adaptive testing;

recording additional information (digital traces, log data)

improves measurement quality by increasing the accuracy and efficiency of the

measurement process (Parshall, Spray, Kalohn, & Davey, 2001; van der Linden &

Glas, 2000; Wainer, 1990);

integration with educational software

48.

• expensive development (including subsequent administration and use);• may contain irrelevant constructs;

interaction of the respondent with the test is not always studied/understood (the

influence of the interface, etc.);

• different formats function differently, and each requires its own independent study;

• need to use complex models (computational psychometrics)

(Bachman, 2002; Haigh, 2011; Huff & Sireci, 2001;

Parshall & Harmes, 2014; Sireci & Zenisky, 2006)

Limitations

49.

Other featuresit is necessary to take into account the level of computer skills of the test

participant;

the inability for the respondent to watch the entire test, assess its overall

difficulty and the difficulty of what remains to be completed;

as a rule, it is impossible to return to solved tasks and correct answers;

impairment in the perception of tasks in computer form in some cases

50.

51.

52.

A bit of practice53.

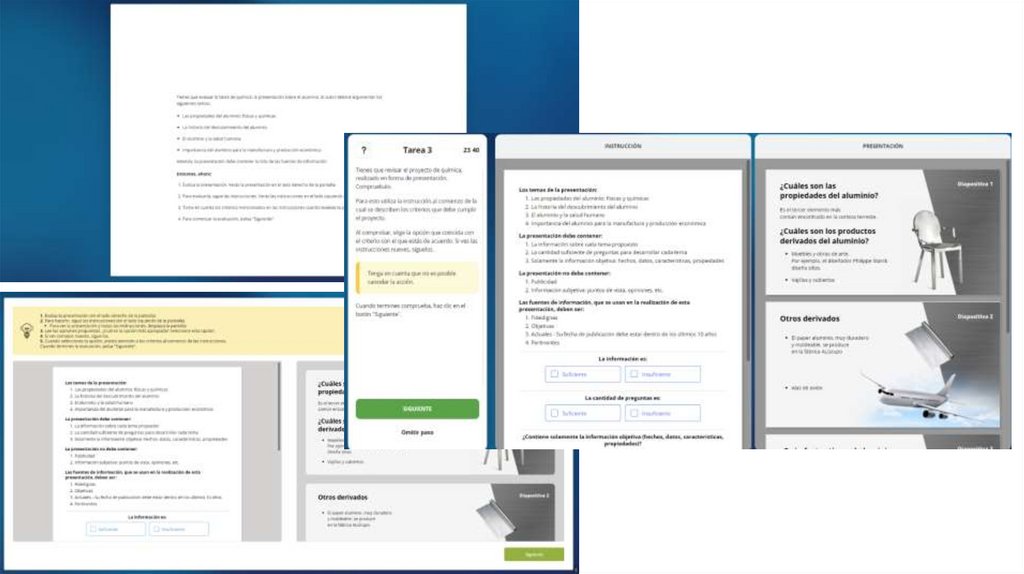

Imagine you need to develop a piece of task to measure one of the components of digital literacy - DigitalCommunication and Critical thinking.

Think of a situation/dialogue that would assess the following indicators:

Digital Communication

• Respectful of other people's opinions, history and culture.

• Respects personal privacy limits when communicating on social networks.

• Maintains the privacy of personal correspondence.

• Respects copyright law, including quoting the source.

Critical thinking

• Makes a clear judgment based on the information presented for argumentation.

• Evaluates the reliability of information.

You can choose one of the indicators to think of a situation.

You have 10 minutes to prepare.

54.

Thank you for your attention!ktarasova@hse.ru

Интернет

Интернет Образование

Образование