Похожие презентации:

The Impact of Social Media and Artificial Intelligence on Human Behavior

1. The Impact of Social Media and Artificial Intelligence on Human Behavior

Egor Sigov3.2

2. Actuality

• Global Digital Integration• Over 4.9 billion people use social media (DataReportal, 2024), making algorithmic

influence a mass-scale phenomenon.

• Rapid Technological Advances

• Generative AI (e.g., deepfakes, LLMs like GPT-4) blurs the line between human and

machine-generated content, raising ethical concerns.

• Global Crises and Manipulation Risks

• Disinformation: AI-generated fake news influences elections (e.g., 2016 U.S. election,

2024 global polls).

• Regulatory and Ethical Debates

• Open questions: Who controls algorithms? Can transparency (e.g., OpenAI’s

disclosures) mitigate harm?

3. Fields of research

• Sociology• Focus: How algorithms reshape social norms, inequality, and collective

action (e.g., Arab Spring, Black Lives Matter).

• Political Science

• Focus: AI in governance (e.g., China’s Social Credit System)

and microtargeting in elections.

• Beside academic fields:

• Policy: Shaping laws to protect privacy/democracy.

• Business: Ethical AI design (e.g., Apple’s "Screen Time" features).

4. Research Methods

• Digital Ethnography – Observing behavior on platforms (how peopleinteract on TikTok).

• Big Data Analysis – Studying millions of posts to detect patterns

(disinformation spread).

• Experiments – A/B testing (how different algorithms affect behavior).

• In-Depth Interviews – Understanding how users perceive algorithmic

influence.

5. Key Studies

• The Facebook Experiment (2014) – Facebook secretly manipulated users’feeds to measure "emotional contagion." Result: Moods can be artificially

altered.

• "Algorithmic Extremism?" (Ribeiro, 2020) – YouTube’s algorithm was

found to steer users from moderate to radical content.

Study:

Ribeiro, M. H., Ottoni, R., West, R., Almeida, V. A. F., & Meira, W. (2020). Auditing radicalization

pathways on YouTube. Proceedings of the 2020 Conference on Fairness, Accountability, and

Transparency (FAT '20),* 131–141.

• "Digital Wellbeing" (Google, 2018) – Screen-time controls were introduced

after researcher pressure.

6. Conclusions based on previous researches

✅ Social media and algorithms reshape behavior – from politicalviews to mental health.

✅ People underestimate their influence – algorithms operate at a

subconscious level.

✅ Technology exacerbates inequality – some gain opportunities,

others get trapped in "digital loops."

✅ Regulation lags behind – Laws like GDPR and the EU’s Digital

Services Act aim to curb manipulation, but tech evolves faster.

7. Future Questions

• How will AI (e.g., ChatGPT) reshapesocialization?

• How to prevent digital control of society?

• How to avoid the involvement of artificial

intelligence in the interactions between

society and the government?

8. Hypothesis

• How will AI reshape socialization?• Generative AI (like ChatGPT) will fundamentally alter human

socialization by: 1) reducing face-to-face interactions; 2) introducing

AI-mediated communication as a new norm; 3) reshaping identity

formation through personalized AI interactions—leading to both

increased efficiency in social exchanges and a potential decline in

deep emotional connections.

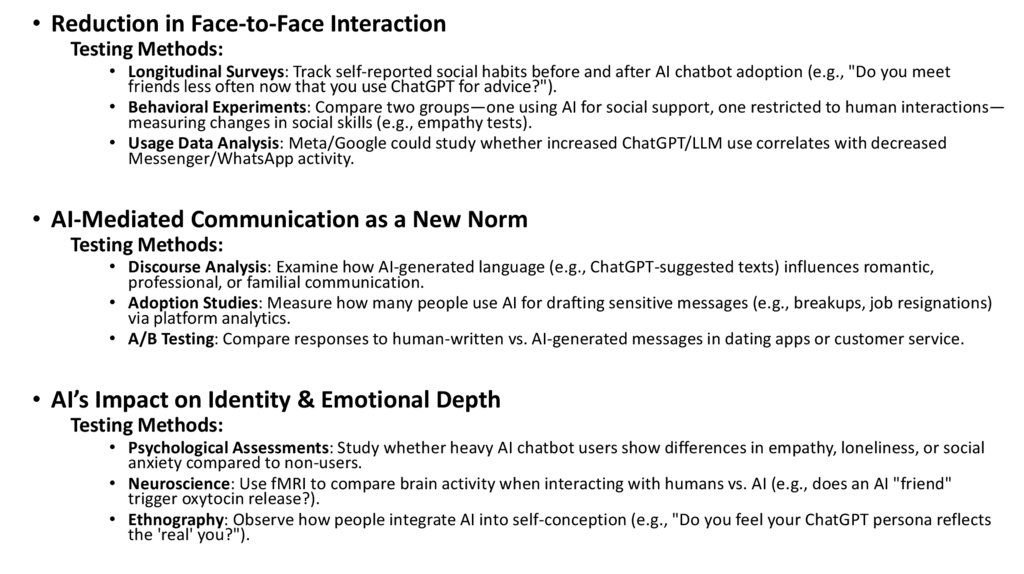

9.

• Reduction in Face-to-Face InteractionTesting Methods:

• Longitudinal Surveys: Track self-reported social habits before and after AI chatbot adoption (e.g., "Do you meet

friends less often now that you use ChatGPT for advice?").

• Behavioral Experiments: Compare two groups—one using AI for social support, one restricted to human interactions—

measuring changes in social skills (e.g., empathy tests).

• Usage Data Analysis: Meta/Google could study whether increased ChatGPT/LLM use correlates with decreased

Messenger/WhatsApp activity.

• AI-Mediated Communication as a New Norm

Testing Methods:

• Discourse Analysis: Examine how AI-generated language (e.g., ChatGPT-suggested texts) influences romantic,

professional, or familial communication.

• Adoption Studies: Measure how many people use AI for drafting sensitive messages (e.g., breakups, job resignations)

via platform analytics.

• A/B Testing: Compare responses to human-written vs. AI-generated messages in dating apps or customer service.

• AI’s Impact on Identity & Emotional Depth

Testing Methods:

• Psychological Assessments: Study whether heavy AI chatbot users show differences in empathy, loneliness, or social

anxiety compared to non-users.

• Neuroscience: Use fMRI to compare brain activity when interacting with humans vs. AI (e.g., does an AI "friend"

trigger oxytocin release?).

• Ethnography: Observe how people integrate AI into self-conception (e.g., "Do you feel your ChatGPT persona reflects

the 'real' you?").

10. Links to referenced studies and resources

• https://dl.acm.org/doi/10.1145/3351095.3372879"Algorithmic Extremism?" (Ribeiro, 2020)

• DOI:10.1016/j.pmedr.2018.07.016

Twenge, J. M., et al. (2018). Associations between screen time and psychological

well-being. Preventive Medicine Reports, 11, 271–283. (Contextual research cited

by Google).

• Turkle, S. (2017). Alone Together: Why We Expect More from Technology and

Less from Each Other. Basic Books.

• https://doi.org/10.1073/pnas.1320040111

"The Facebook Experiment" (2014)

Kramer, A. D. I., Guillory, J. E., & Hancock, J. T. (2014). Experimental evidence of

massive-scale emotional contagion through social networks. PNAS, 111(24), 8788–

8790.

Интернет

Интернет Социология

Социология