Похожие презентации:

Face Recognition: From Scratch to Hatch

1.

2.

Face Recognition in Cloud@Mail.ruUsers upload photos to Cloud

Backend identifies persons on

photos, tags and show clusters

3.

Social networksYou

???

Your

Ex-Girlfriend

4.

5.

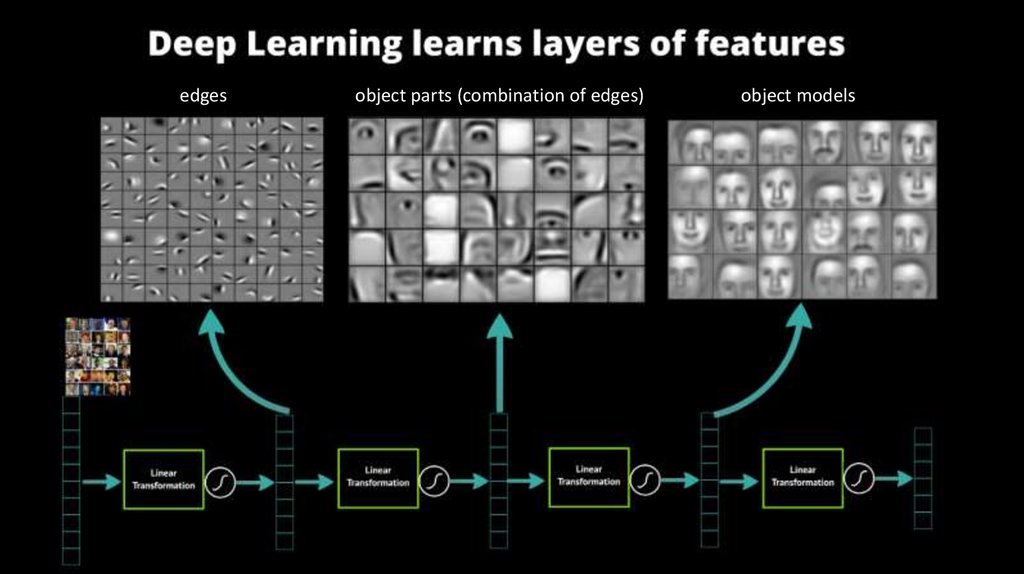

edgesobject parts (combination of edges)

object models

6.

7.

Face detection8.

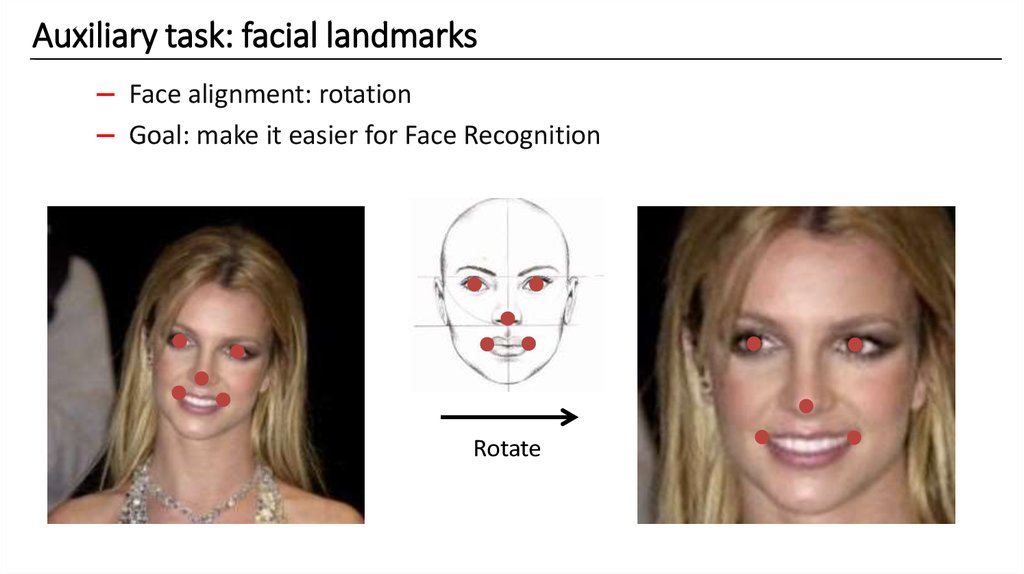

Auxiliary task: facial landmarks– Face alignment: rotation

– Goal: make it easier for Face Recognition

Rotate

9.

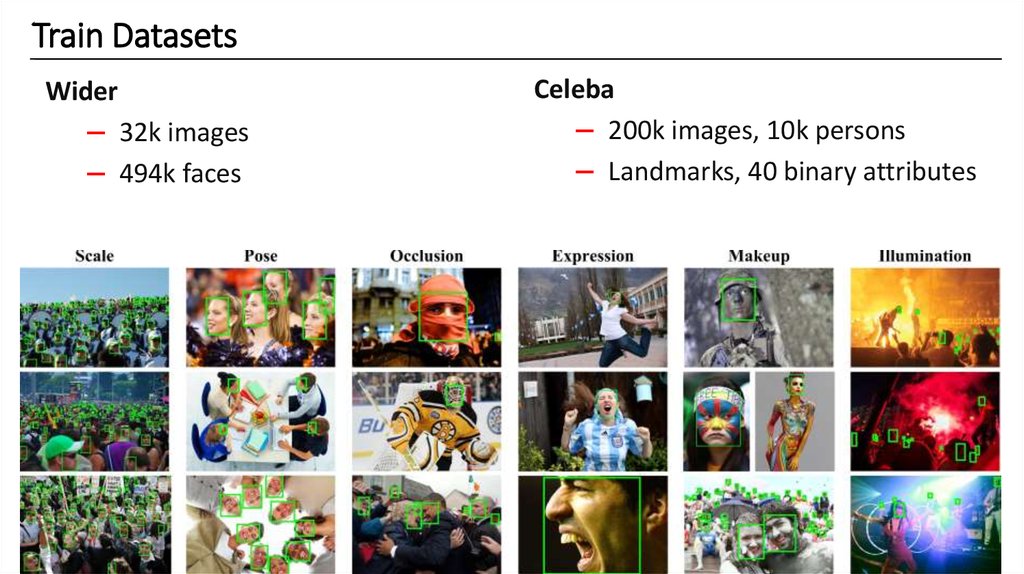

Train DatasetsWider

– 32k images

– 494k faces

Celeba

– 200k images, 10k persons

– Landmarks, 40 binary attributes

10.

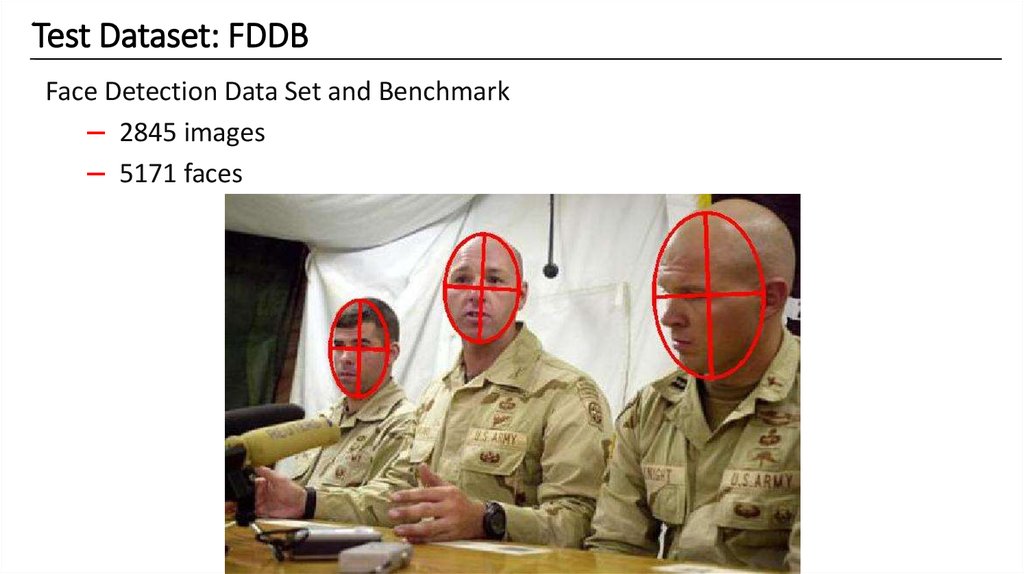

Test Dataset: FDDBFace Detection Data Set and Benchmark

– 2845 images

– 5171 faces

11.

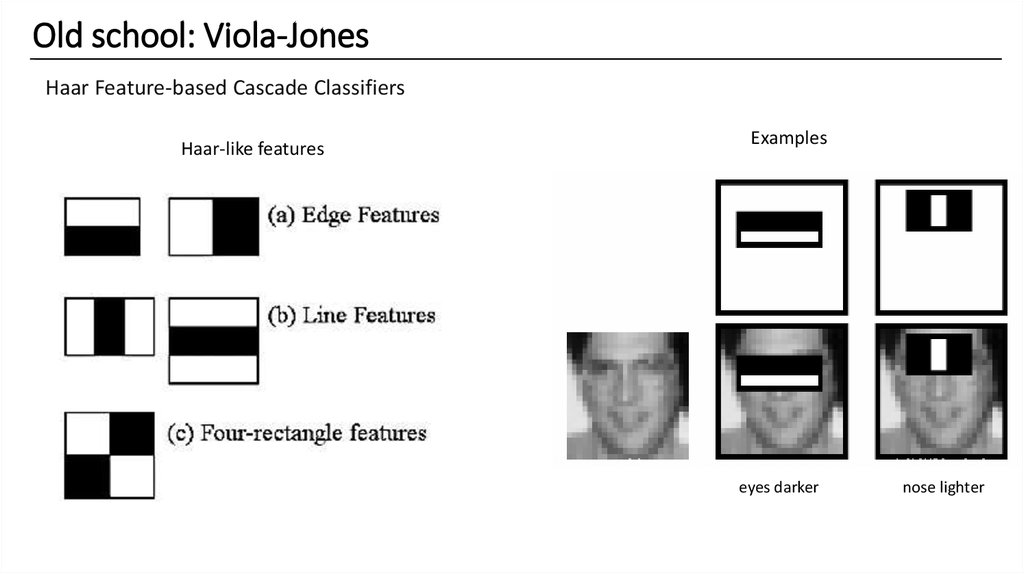

Old school: Viola-JonesHaar Feature-based Cascade Classifiers

Haar-like features

Examples

eyes darker

nose lighter

12.

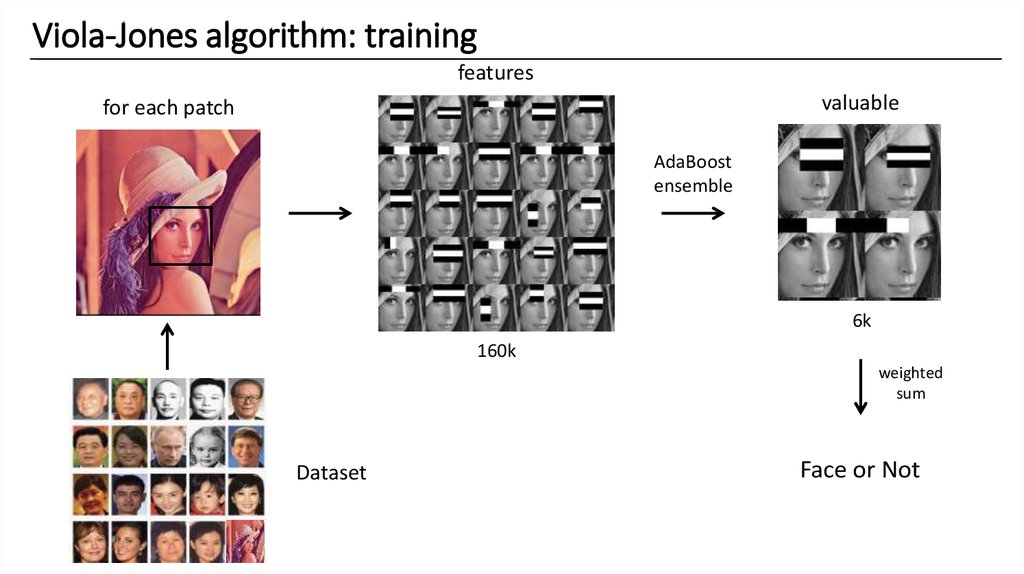

Viola-Jones algorithm: trainingfeatures

valuable

for each patch

AdaBoost

ensemble

6k

160k

weighted

sum

Dataset

Face or Not

13.

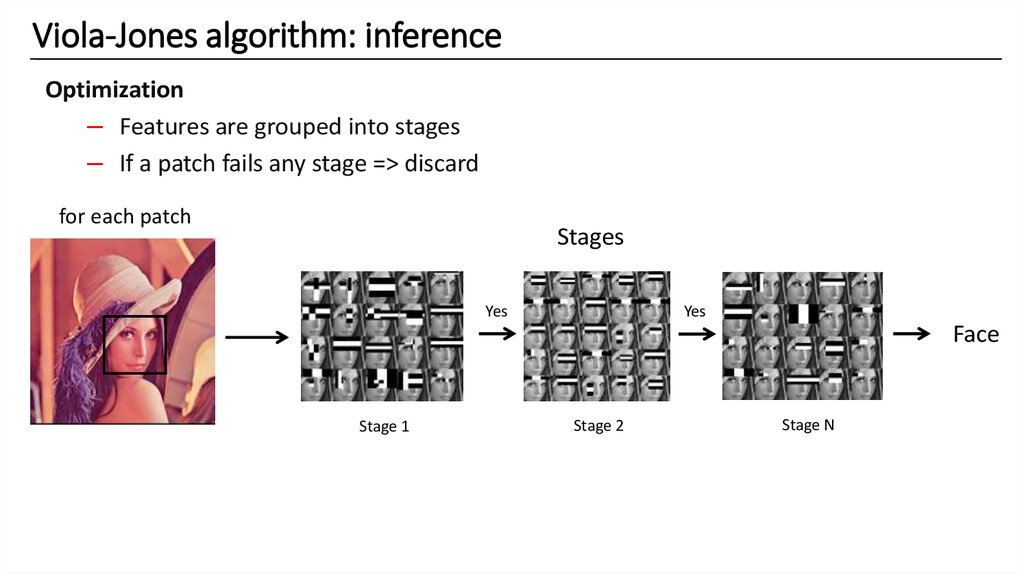

Viola-Jones algorithm: inferenceOptimization

– Features are grouped into stages

– If a patch fails any stage => discard

for each patch

Stages

Yes

Yes

Face

Stage 1

Stage 2

Stage N

14.

Viola-Jones resultsOpenCV implementation

– Fast: ~100ms on CPU

– Not accurate

FDDB results

0.45

15.

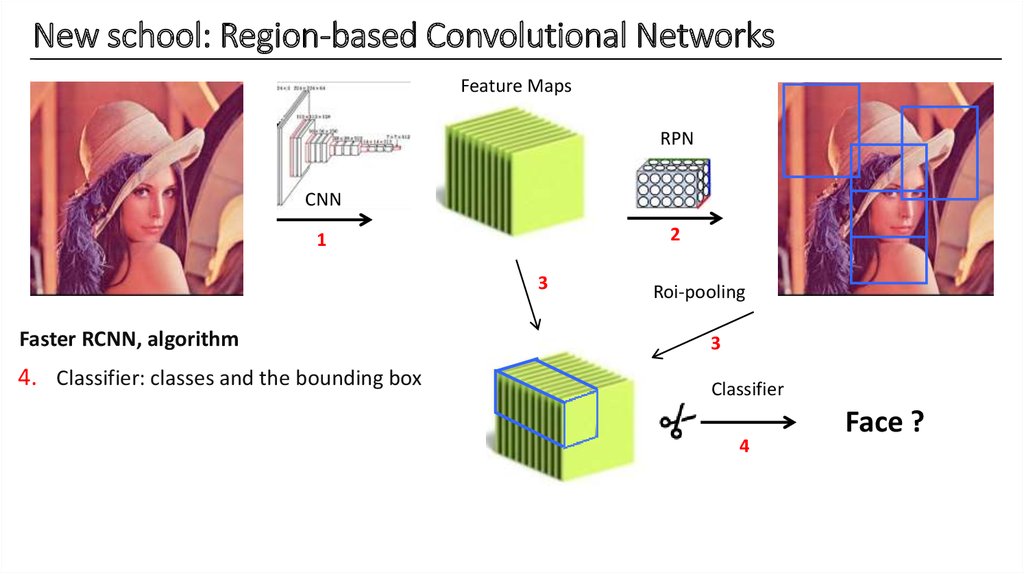

New school: Region-based Convolutional NetworksFeature Maps

RPN

CNN

2

1

3

Roi-pooling

Faster RCNN, algorithm

3

1.

Pre-trained

network:

extracting

features

2.

Region

proposal

network

4.

classes

andcorresponding

the bounding

box

3. Classifier:

RoI-pooling:

extract

tensor

Classifier

4

Face ?

16.

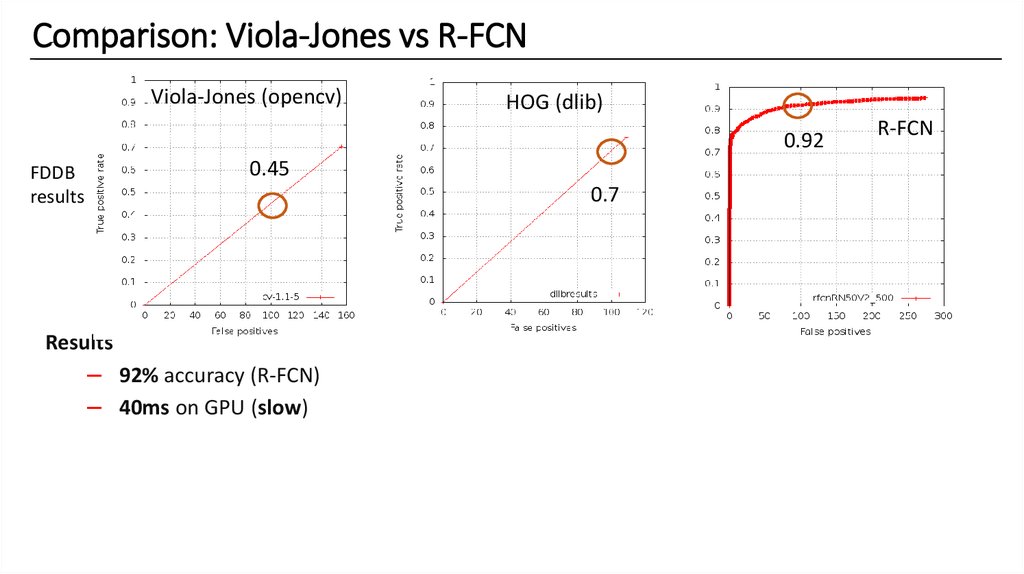

Comparison: Viola-Jones vs R-FCNViola-Jones (opencv)

HOG (dlib)

0.92

0.45

FDDB

results

0.7

Results

– 92% accuracy (R-FCN)

– 40ms on GPU (slow)

R-FCN

17.

Face detection: how fastWe need faster solution at the same accuracy!

Target: < 10ms

18.

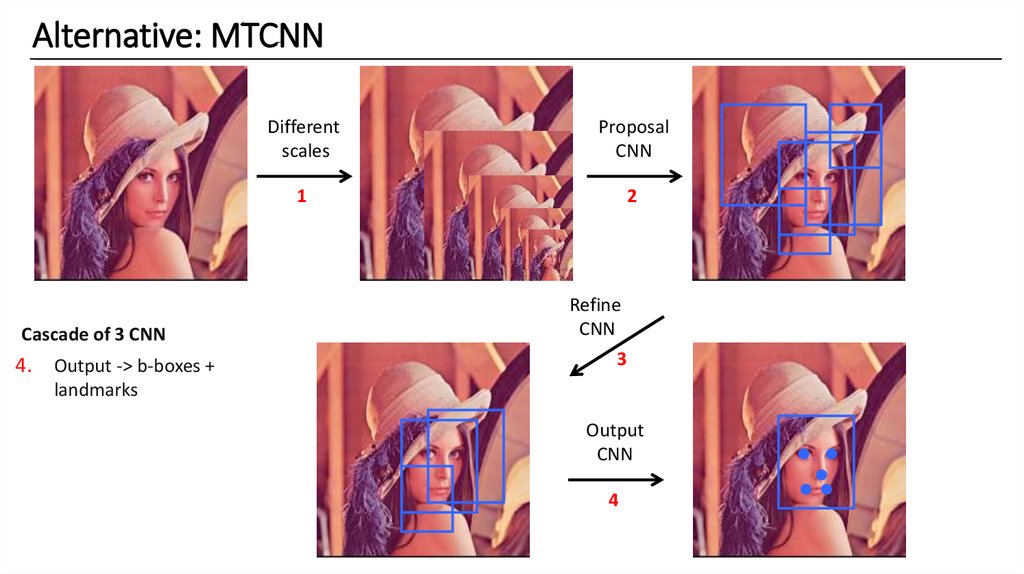

Alternative: MTCNNCascade of 3 CNN

2.

Proposal

candidates

+

1. Output

Resize ->

to

different

3.

Refine

calibration

4.

->->

b-boxes

+scales

Different

scales

Proposal

CNN

1

2

Refine

CNN

3

b-boxes

landmarks

Output

CNN

4

19.

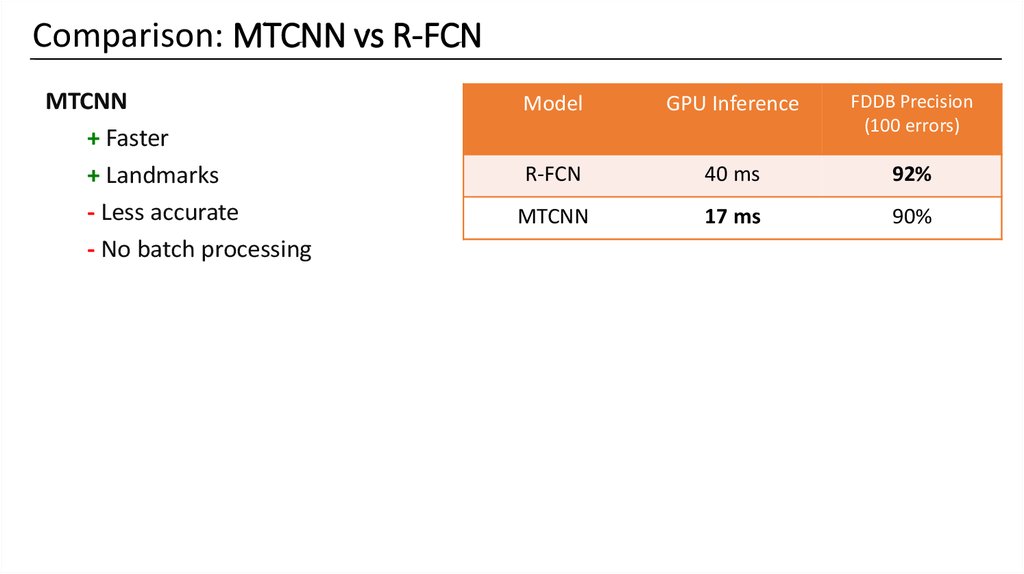

Comparison: MTCNN vs R-FCNMTCNN

+ Faster

+ Landmarks

- Less accurate

- No batch processing

Model

GPU Inference

FDDB Precision

(100 errors)

R-FCN

40 ms

92%

MTCNN

17 ms

90%

20.

21.

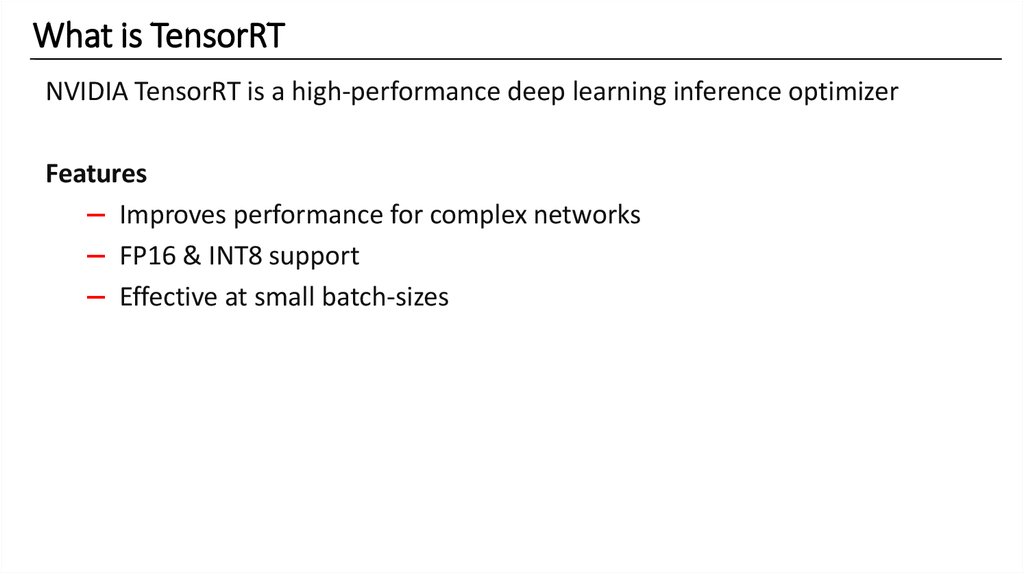

What is TensorRTNVIDIA TensorRT is a high-performance deep learning inference optimizer

Features

– Improves performance for complex networks

– FP16 & INT8 support

– Effective at small batch-sizes

22.

TensorRT: layer optimizations1. Vertical layer fusion

2. Horizontal fusion

3. Concat elision

23.

TensorRT: downsides1. Caffe + TensorFlow supported

2. Fixed input/batch size

3. Basic layers support

24.

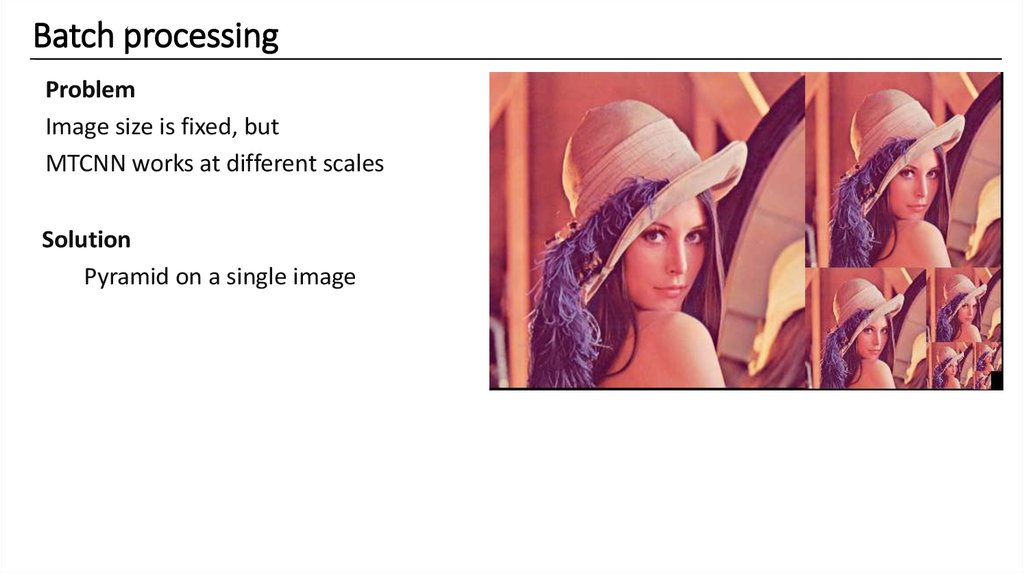

Batch processingProblem

Image size is fixed, but

MTCNN works at different scales

Solution

Pyramid on a single image

25.

Batch processingResults

– Single run

– Enables batch processing

Model

Inference

ms

MTCNN (Caffe, python)

17

MTCNN (Caffe, C++)

12.7

+ batch

10.7

26.

TensorRT: layersGPU Inference

ms

FDDB Precision

(100 errors)

MTCNN, batch

10.7

90%

+Tensor RT

8.8

91.2%

Model

Problem

No PReLU layer => default pre-trained

model can’t be used

Retrained with ReLU from scratch

-20%

27.

Face detection: inferenceTarget: < 10 ms

Result: 8.8 ms

Ingredients

1. MTCNN

2. Batch processing

3. TensorRT

28.

29.

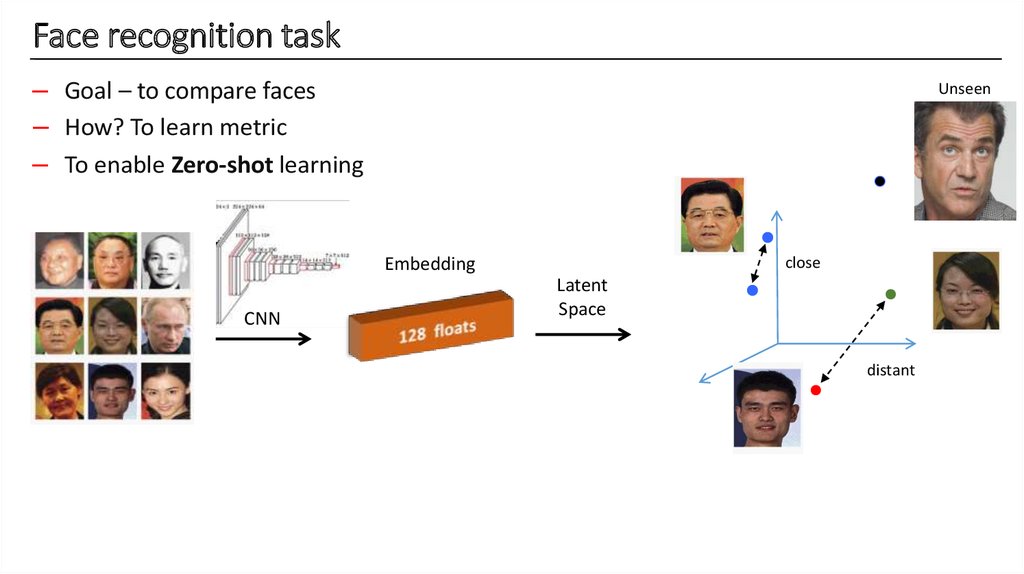

Face recognition task– Goal – to compare faces

– How? To learn metric

– To enable Zero-shot learning

Unseen

Embedding

CNN

close

Latent

Space

distant

30.

Training set: MSCeleb– Top 100k celebrities

– 10 Million images, 100 per person

– Noisy: constructed by leveraging public search engines

31.

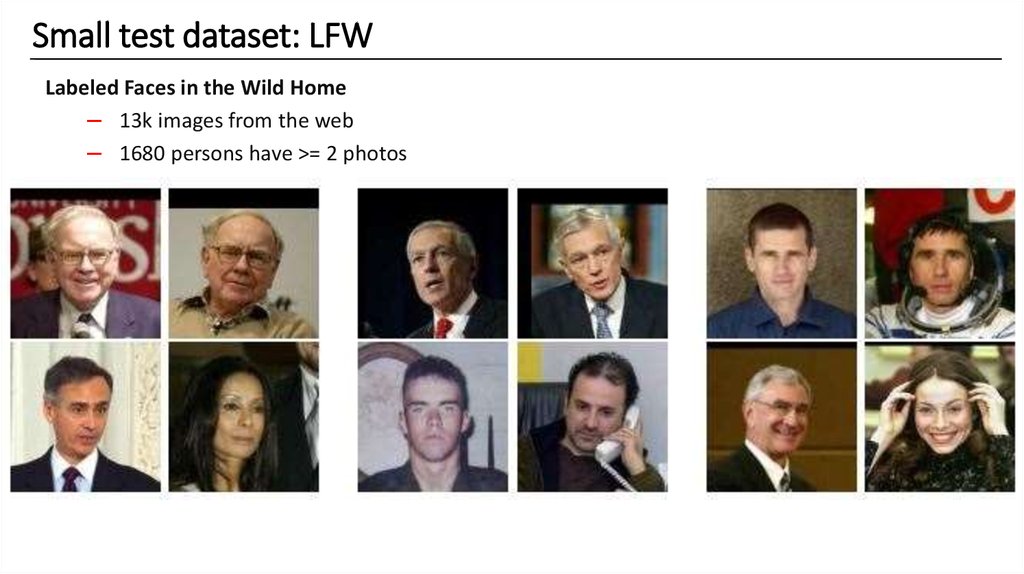

Small test dataset: LFWLabeled Faces in the Wild Home

– 13k images from the web

– 1680 persons have >= 2 photos

32.

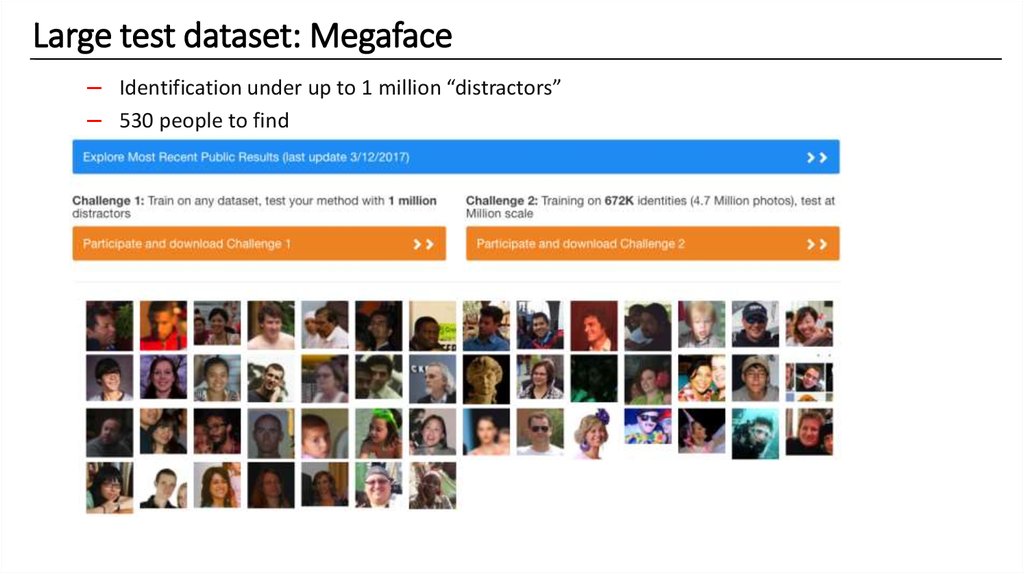

Large test dataset: Megaface– Identification under up to 1 million “distractors”

– 530 people to find

33.

Megaface leaderboard~98%

cleaned

~83%

34. Metric Learning

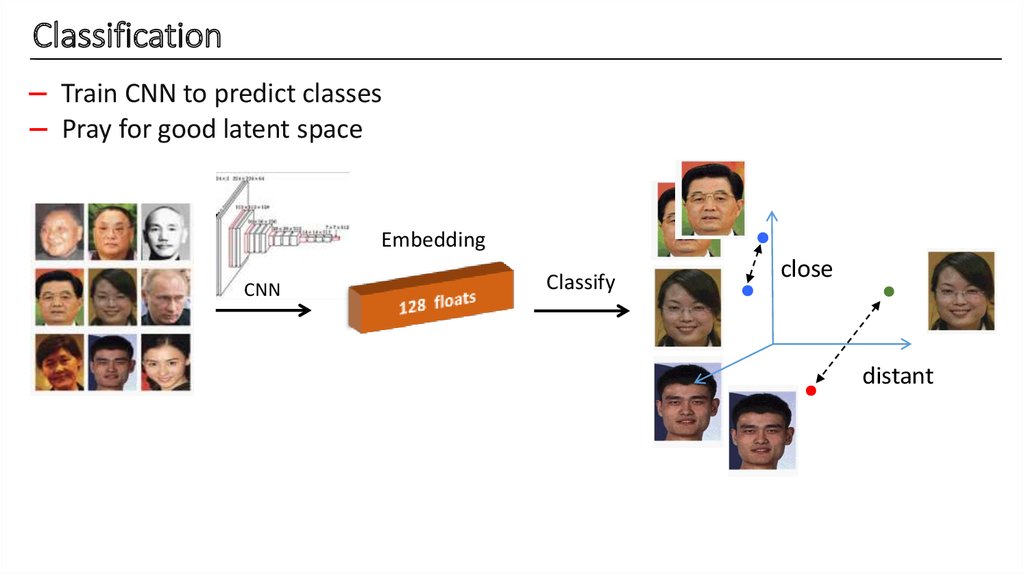

35.

Classification– Train CNN to predict classes

– Pray for good latent space

Embedding

CNN

Classify

close

distant

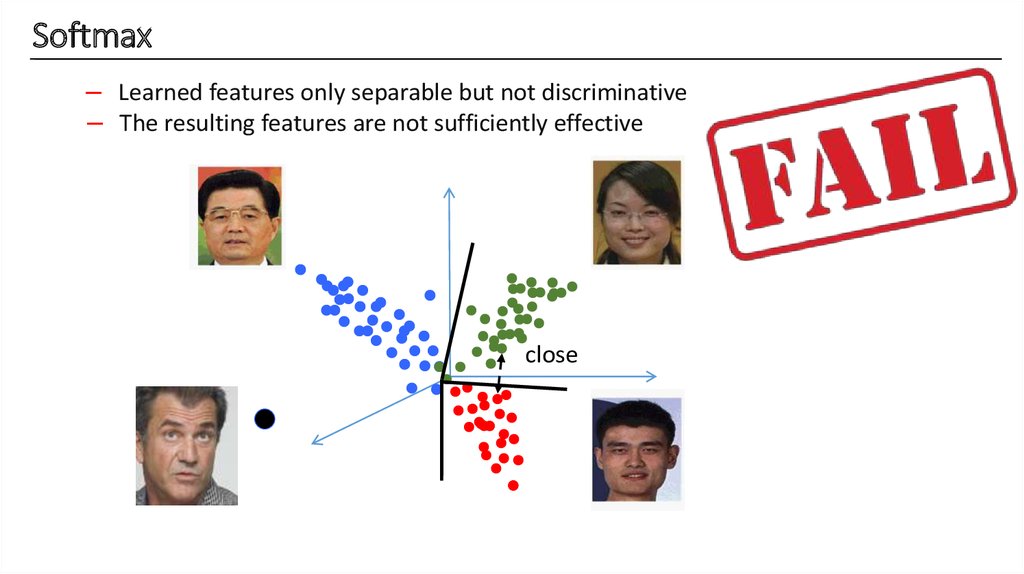

36.

Softmax– Learned features only separable but not discriminative

– The resulting features are not sufficiently effective

close

37.

We need metric learning– Tightness of the cluster

– Discriminative features

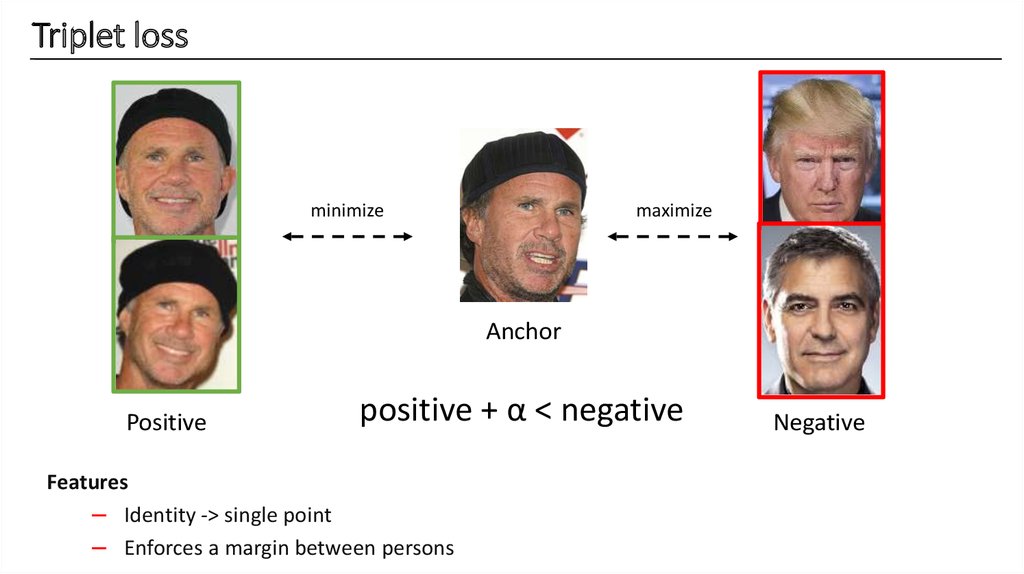

38.

Triplet lossmaximize

minimize

Anchor

Positive

positive + α < negative

Features

– Identity -> single point

– Enforces a margin between persons

Negative

39.

Choosing tripletsCrucial problem

How to choose triplets ? Useful triplets = hardest errors

Solution

Hard-mining within a large mini-batch (>1000)

Hard enough

Pick all

positive

Too easy

40.

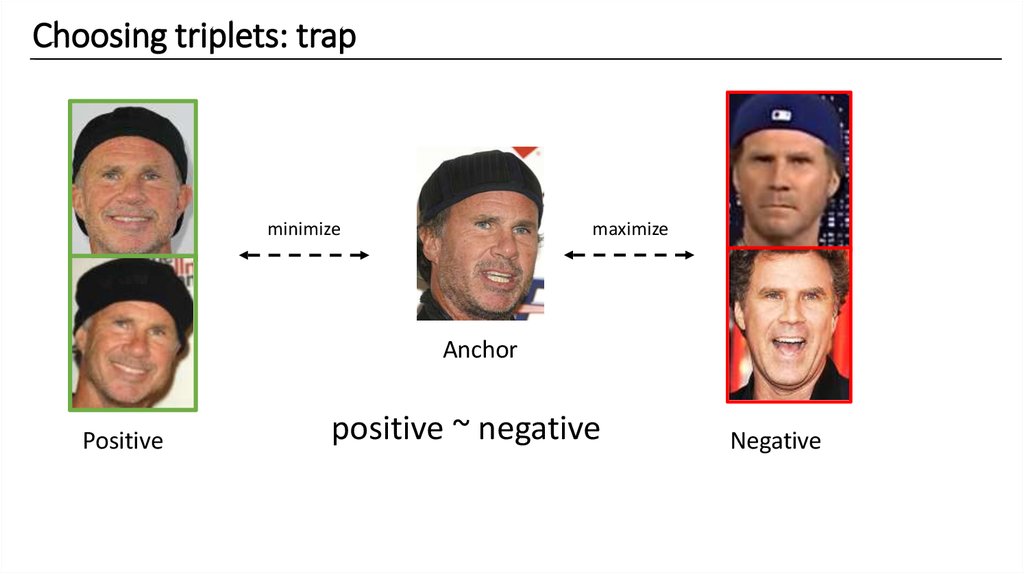

Choosing triplets: trap41.

Choosing triplets: trapmaximize

minimize

Anchor

Positive

positive ~ negative

Negative

42.

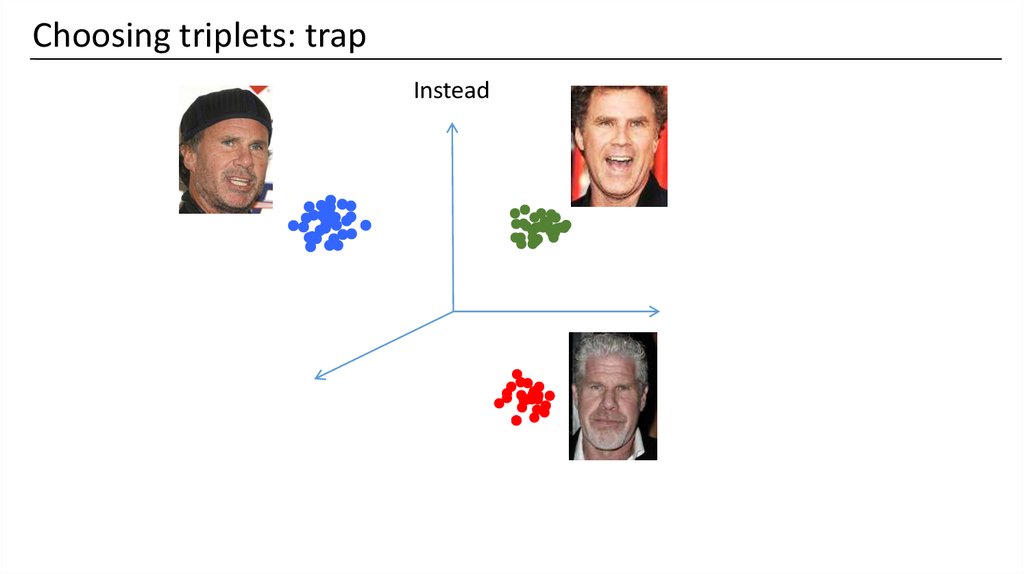

Choosing triplets: trapInstead

43.

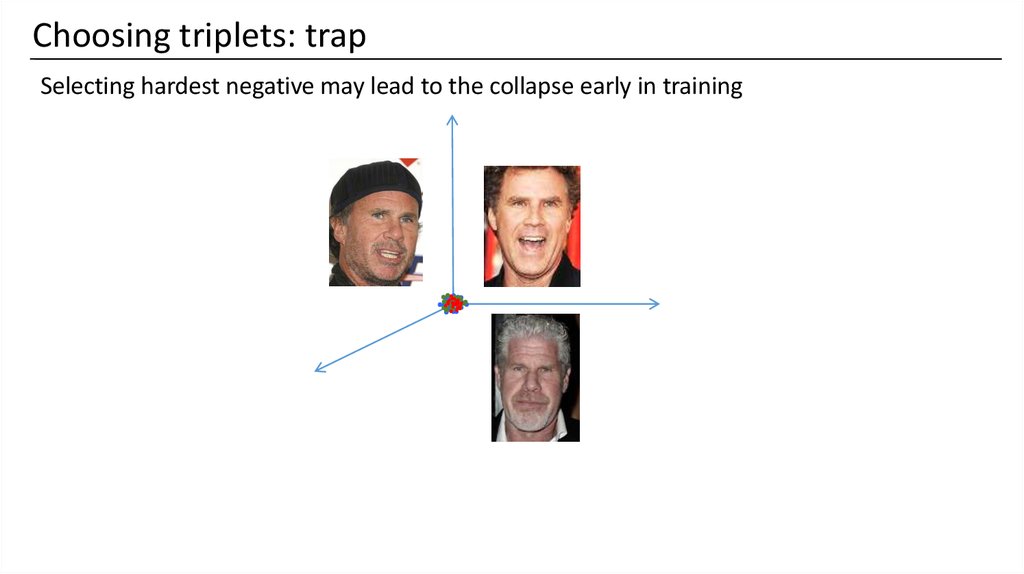

Choosing triplets: trapSelecting hardest negative may lead to the collapse early in training

44.

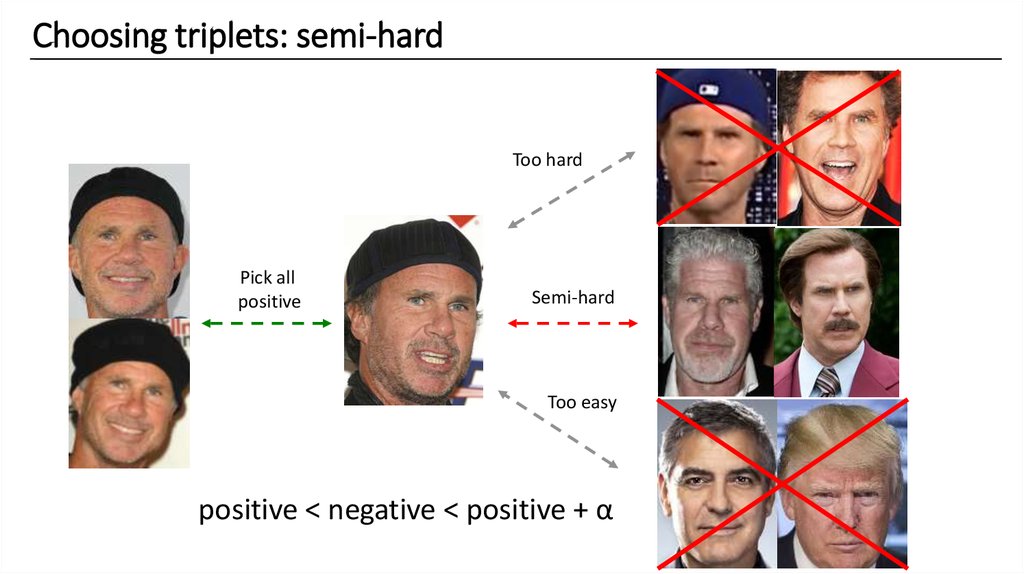

Choosing triplets: semi-hardToo hard

Pick all

positive

Semi-hard

Too easy

positive < negative < positive + α

45.

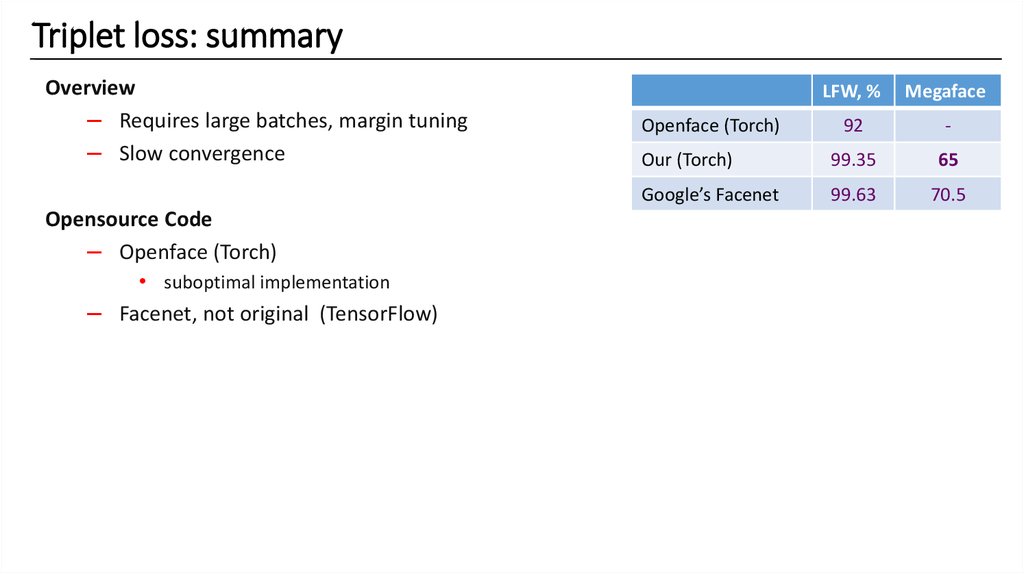

Triplet loss: summaryOverview

– Requires large batches, margin tuning

– Slow convergence

Opensource Code

– Openface (Torch)

• suboptimal implementation

– Facenet, not original (TensorFlow)

LFW, %

Megaface

92

-

Our (Torch)

99.35

65

Google’s Facenet

99.63

70.5

Openface (Torch)

46.

Center lossIdea: pull points to class centroids

47.

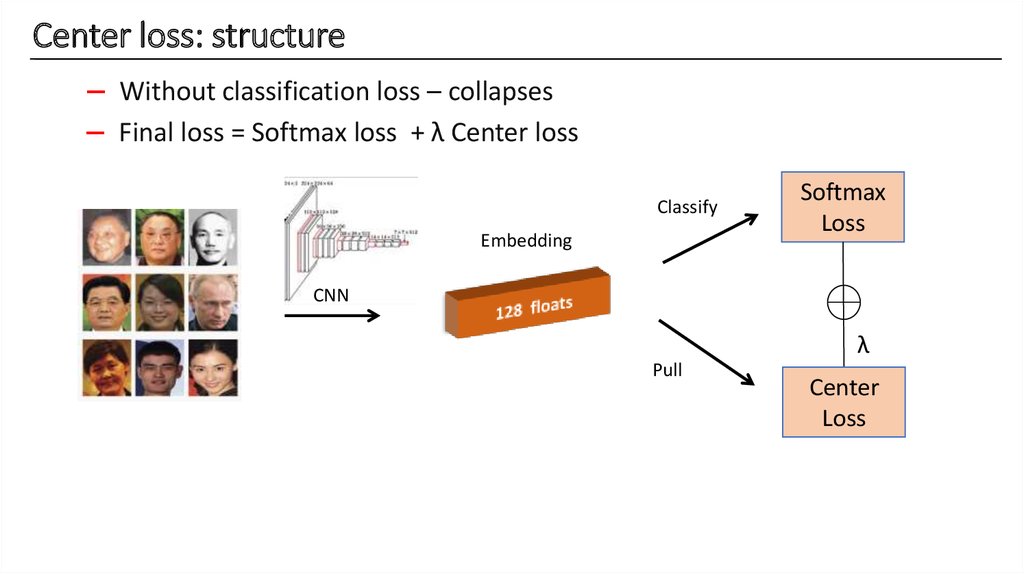

Center loss: structure– Without classification loss – collapses

– Final loss = Softmax loss + λ Center loss

Classify

Embedding

Softmax

Loss

CNN

λ

Pull

Center

Loss

48.

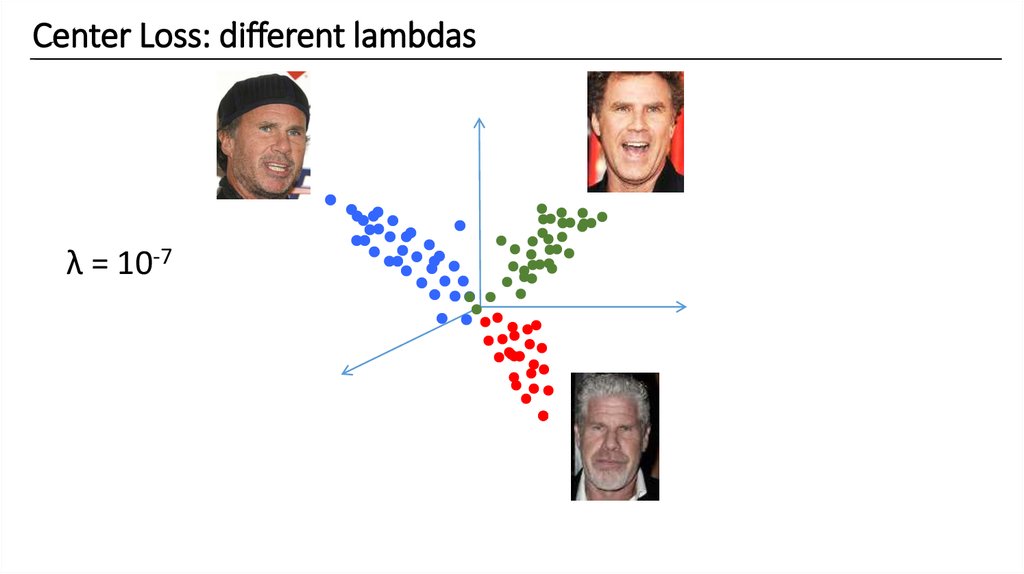

Center Loss: different lambdasλ = 10-7

49.

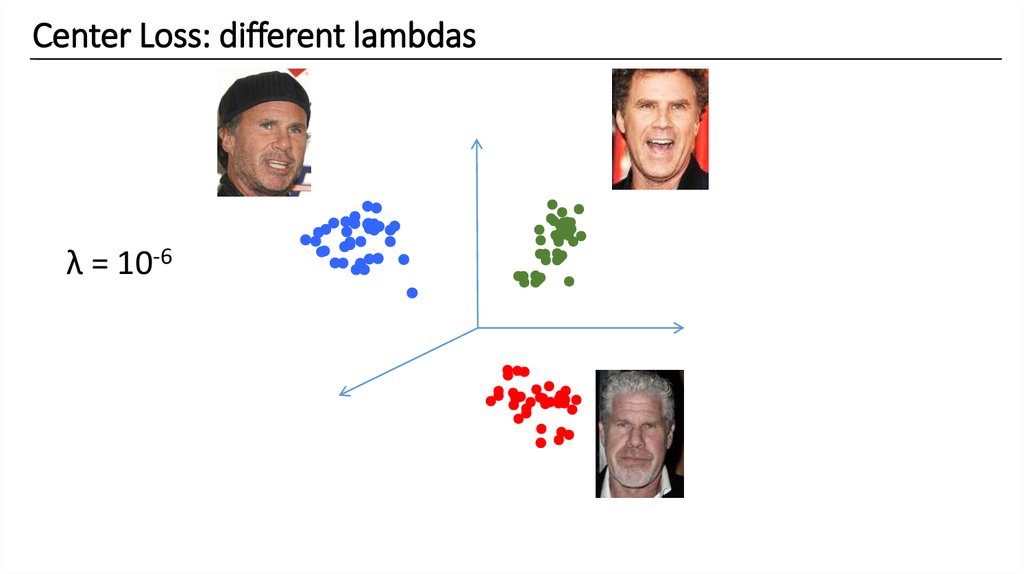

Center Loss: different lambdasλ = 10-6

50.

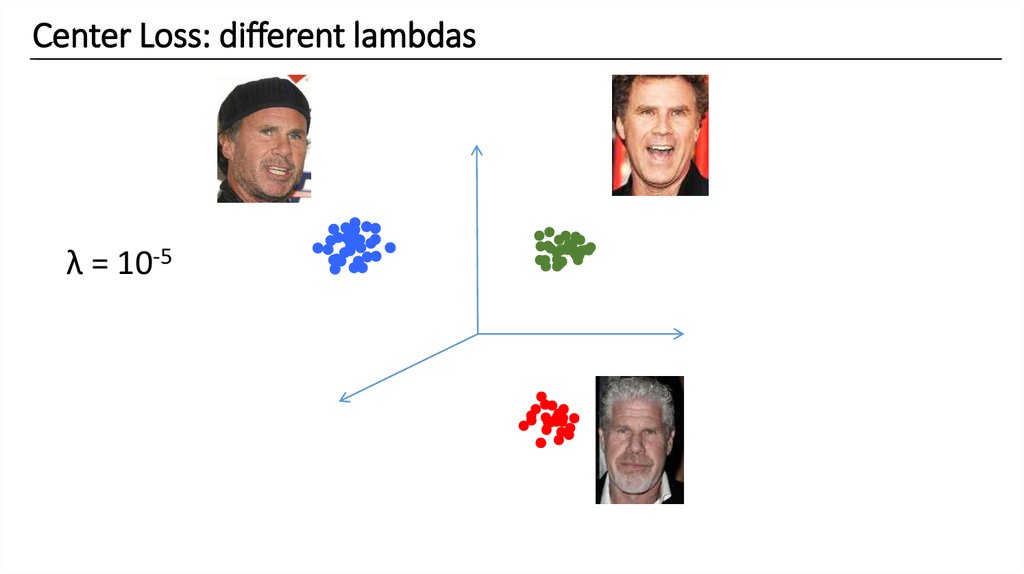

Center Loss: different lambdasλ = 10-5

51.

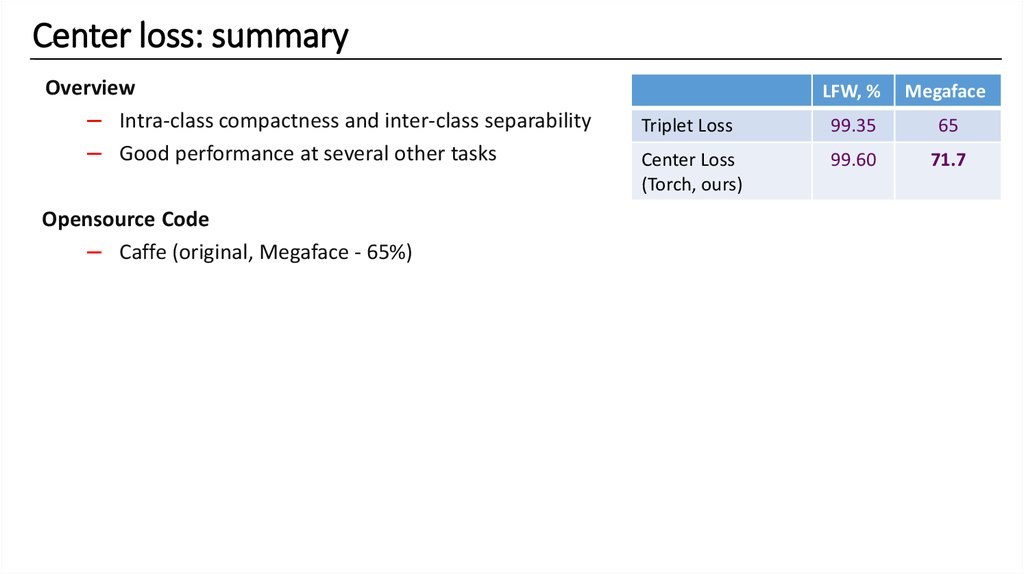

Center loss: summaryOverview

– Intra-class compactness and inter-class separability

– Good performance at several other tasks

Opensource Code

– Caffe (original, Megaface - 65%)

LFW, %

Megaface

Triplet Loss

99.35

65

Center Loss

(Torch, ours)

99.60

71.7

52.

Tricks: augmentationEmbedding

Final

Embedding

Average

Flipped

Embedding

Test time augmentation

– Flip image

– Compute 2 embeddings

– Average embeddings

53.

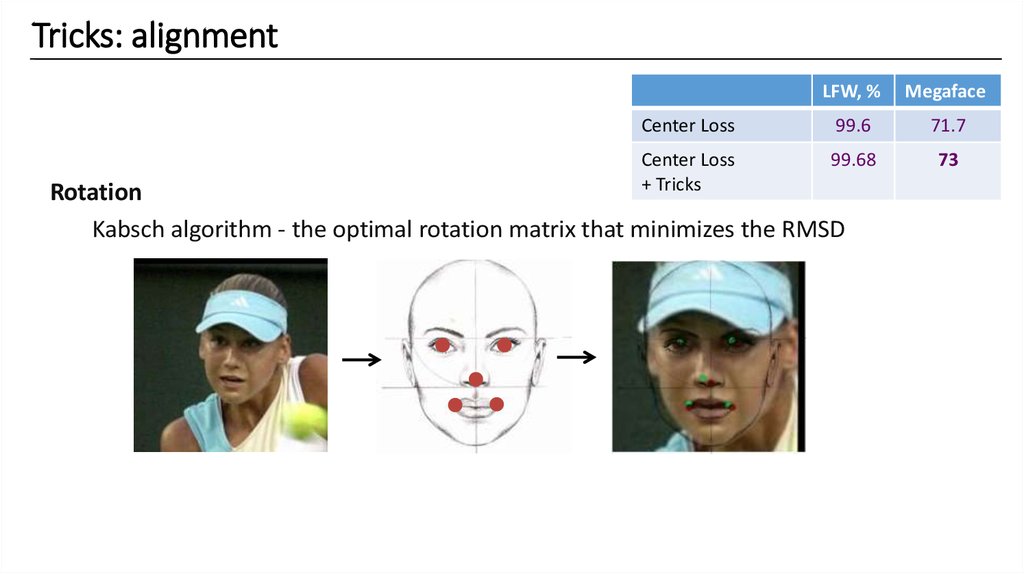

Tricks: alignmentLFW, %

Megaface

Center Loss

99.6

71.7

Center Loss

+ Tricks

99.68

73

Rotation

Kabsch algorithm - the optimal rotation matrix that minimizes the RMSD

54.

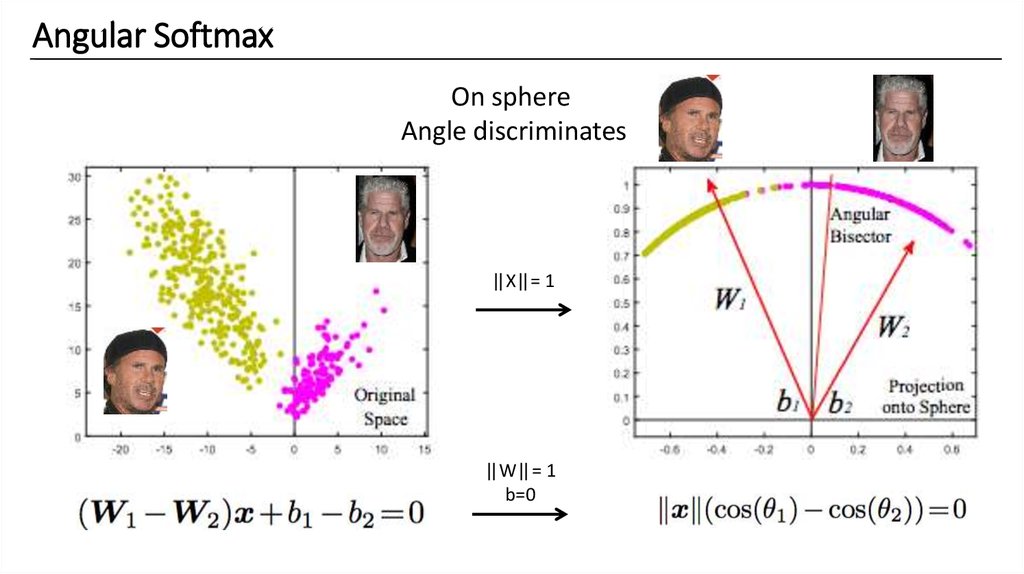

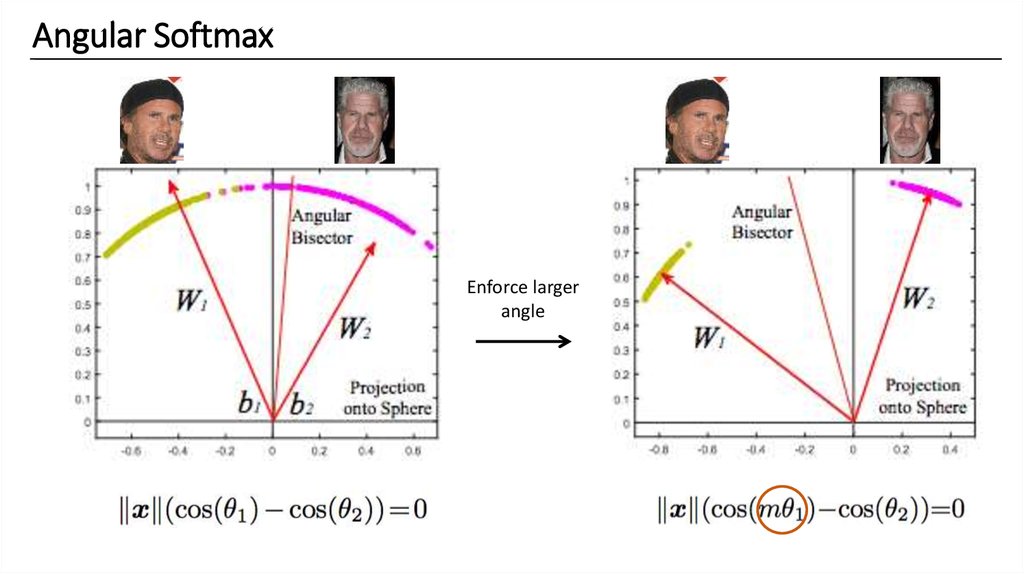

Angular SoftmaxOn sphere

Angle discriminates

||X||= 1

||W||= 1

b=0

55.

Angular SoftmaxEnforce larger

angle

56.

Angular Softmax: different «m»m=1

m=3

57.

Angular softmax: summaryOverview

– Works only on small datasets

– Slight modification of the loss yields 74.2%

LFW, %

Megaface

Center Loss

99.6

73

A-Softmax (Torch)

99.68

74.2

– Various modification of the loss function

CosineFace

ArcFace

58.

Metric learning: summarySoftmax < Triplet < Center < A-Softmax

A-Softmax

– With bells and whistles better than center loss

Overall

– Rule of thumb: use Center loss

– Metric learning may improve classification performance

Center loss

59. Fighting errors

60.

Errors after MSCeleb: childrenProblem

Children all look alike

Consequence

Average embedding ~ single point in the space

61.

Errors after MSCeleb: asianProblem

Face Recognition’s intolerant to

Asians

Reason

Dataset doesn’t contain enough

photos of these categories

62.

How to fix these errors ?It’s all about data, we need diverse

dataset!

Natural choice – avatars of social networks

63.

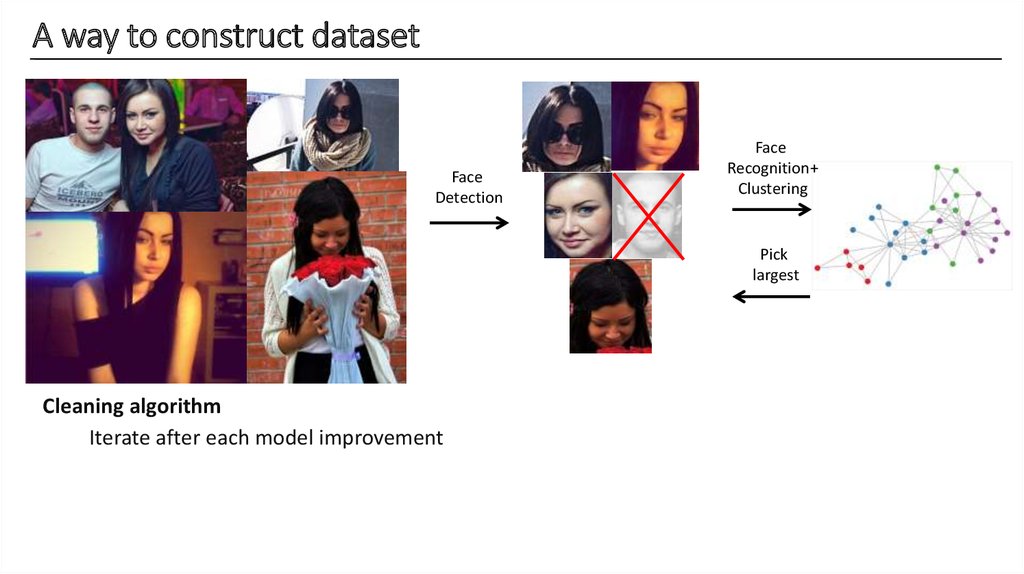

A way to construct datasetFace

Detection

Face

Recognition+

Clustering

Pick

largest

Cleaning algorithm

4.

Pick

the

largest

cluster

as a person

Iterate

after

each

model

improvement

2.

recognition

-> embeddings

3. Face

Hierarchical

clustering

algorithm

1.

detection

64.

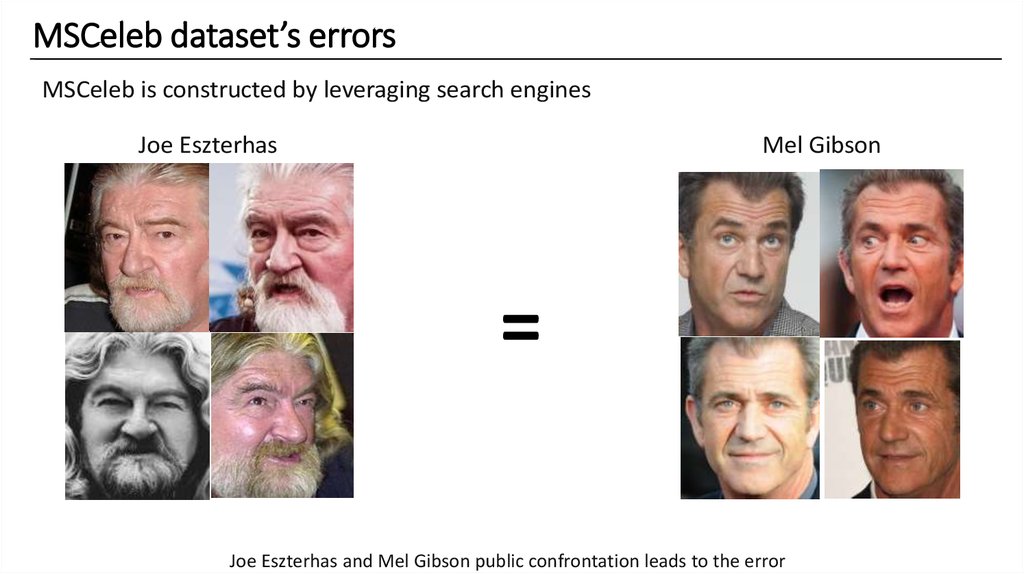

MSCeleb dataset’s errorsMSCeleb is constructed by leveraging search engines

Joe Eszterhas

Mel Gibson

=

Joe Eszterhas and Mel Gibson public confrontation leads to the error

65.

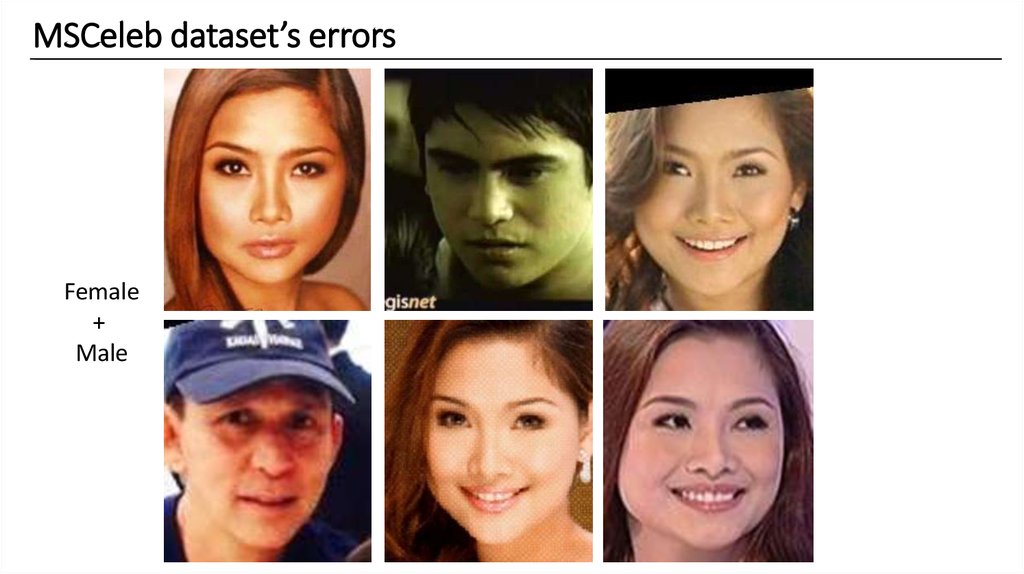

MSCeleb dataset’s errorsFemale

+

Male

66.

MSCeleb dataset’s errorsAsia

Mix

67.

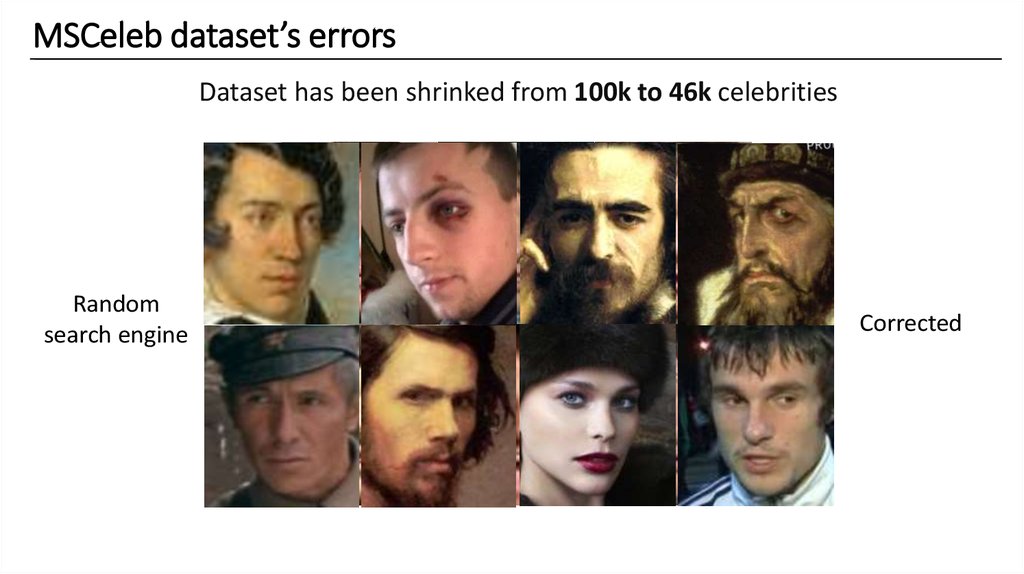

MSCeleb dataset’s errorsDataset has been shrinked from 100k to 46k celebrities

Random

search engine

Corrected

68.

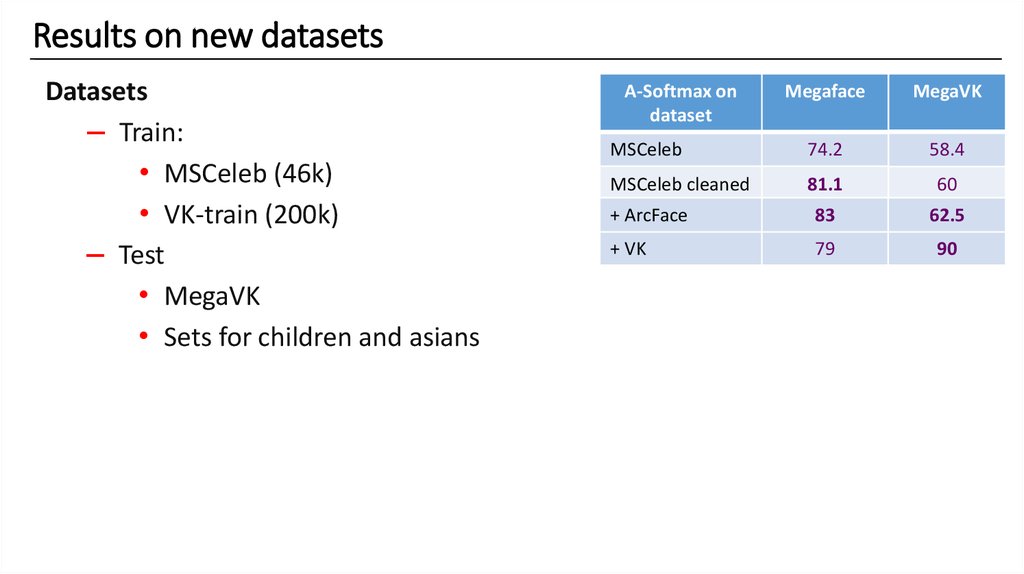

Results on new datasetsDatasets

– Train:

• MSCeleb (46k)

• VK-train (200k)

– Test

• MegaVK

• Sets for children and asians

A-Softmax on

dataset

Megaface

MegaVK

MSCeleb

74.2

58.4

MSCeleb cleaned

81.1

60

+ ArcFace

83

62.5

+ VK

79

90

69.

How to handle big datasetIt seems we can add more data infinitely, but no.

Problems

– Memory consumption (Softmax)

– Computational costs

– A lot of noise in gradients

70.

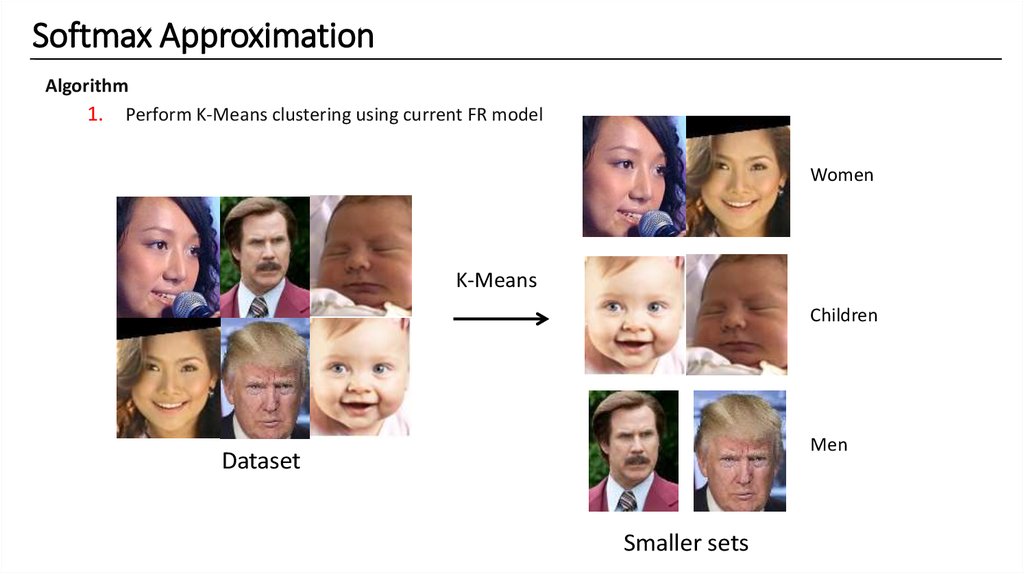

Softmax ApproximationAlgorithm

1. Perform K-Means clustering using current FR model

Women

K-Means

Children

Men

Dataset

Smaller sets

71.

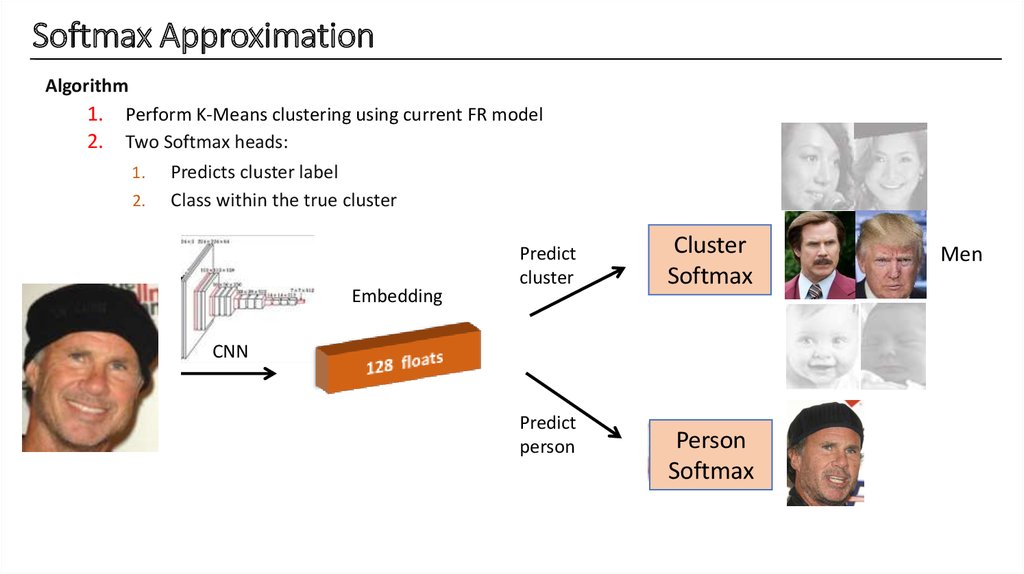

Softmax ApproximationAlgorithm

1. Perform K-Means clustering using current FR model

2. Two Softmax heads:

1.

2.

Predicts cluster label

Class within the true cluster

Embedding

Predict

cluster

Cluster

Softmax

CNN

Predict

person

Men

Person

Softmax

Men

72.

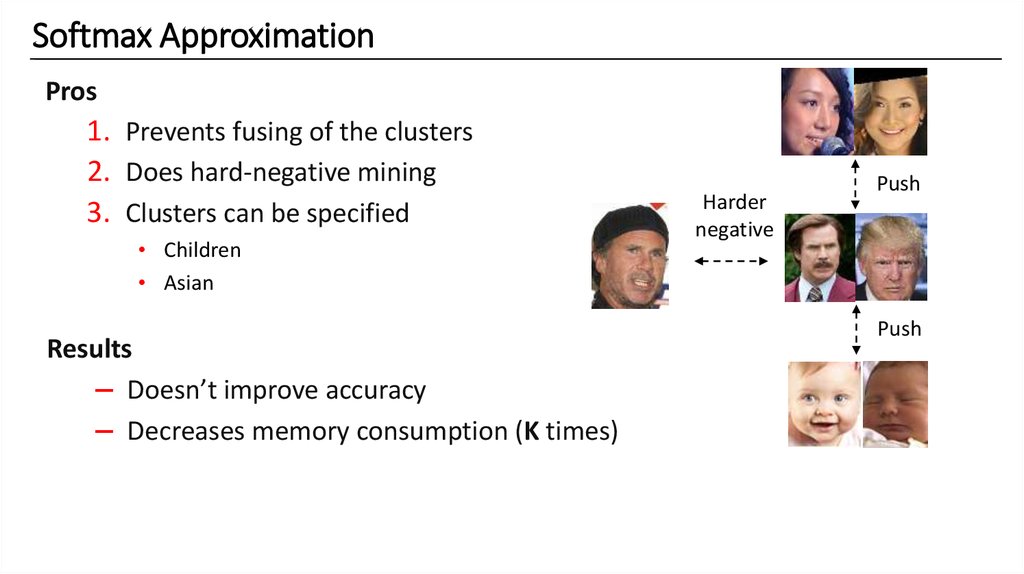

Softmax ApproximationPros

1. Prevents fusing of the clusters

2. Does hard-negative mining

3. Clusters can be specified

• Children

• Asian

Results

– Doesn’t improve accuracy

– Decreases memory consumption (K times)

Harder

negative

Push

Push

73. Fighting errors on production

74.

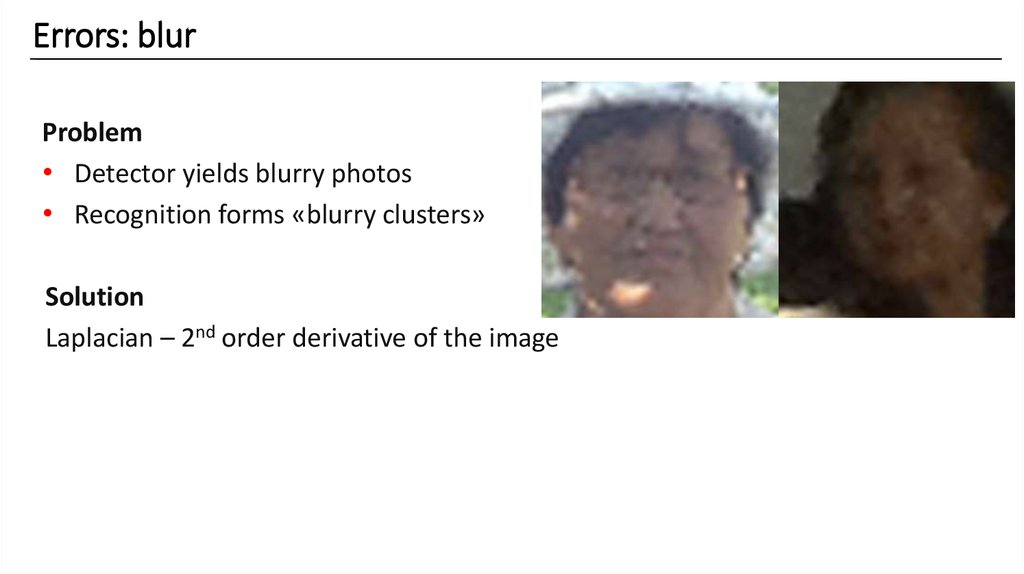

Errors: blurProblem

• Detector yields blurry photos

• Recognition forms «blurry clusters»

Solution

Laplacian – 2nd order derivative of the image

75.

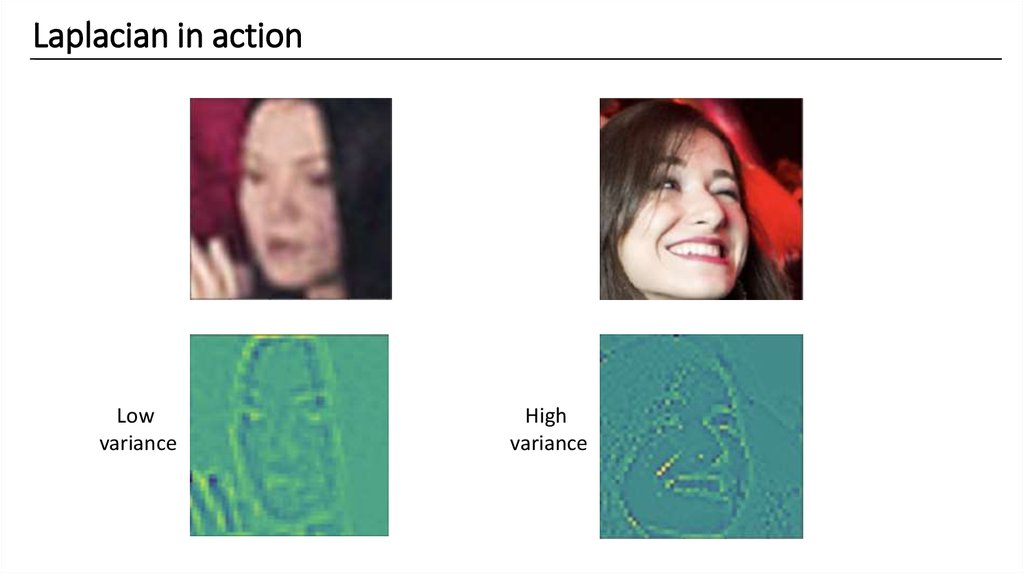

Laplacian in actionLow

variance

High

variance

76.

Errors: body partsDetection

mistakes form

clusters

77.

Errors: diagrams & mushrooms78.

Fixing trash clustersThere is similarity between “no faces”!

Embedding

Embedding

CNN

Specific

activations

79.

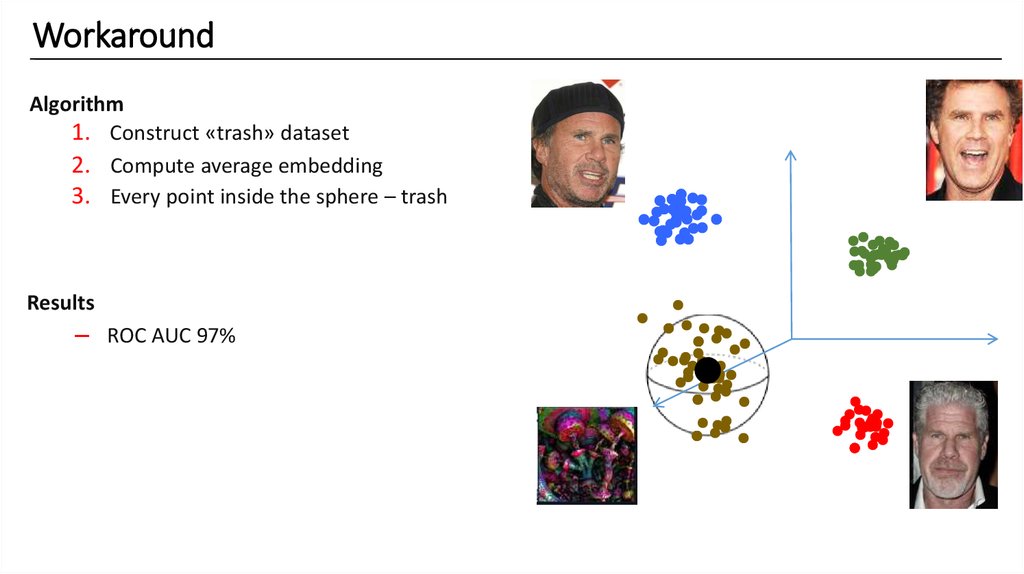

WorkaroundAlgorithm

1. Construct «trash» dataset

2. Compute average embedding

3. Every point inside the sphere – trash

Results

– ROC AUC 97%

80. Spectacular results

81.

Fun: new governorsRecently appointed governors are almost twins, but FR distinguishes them

Dmitriy

Gleb

82.

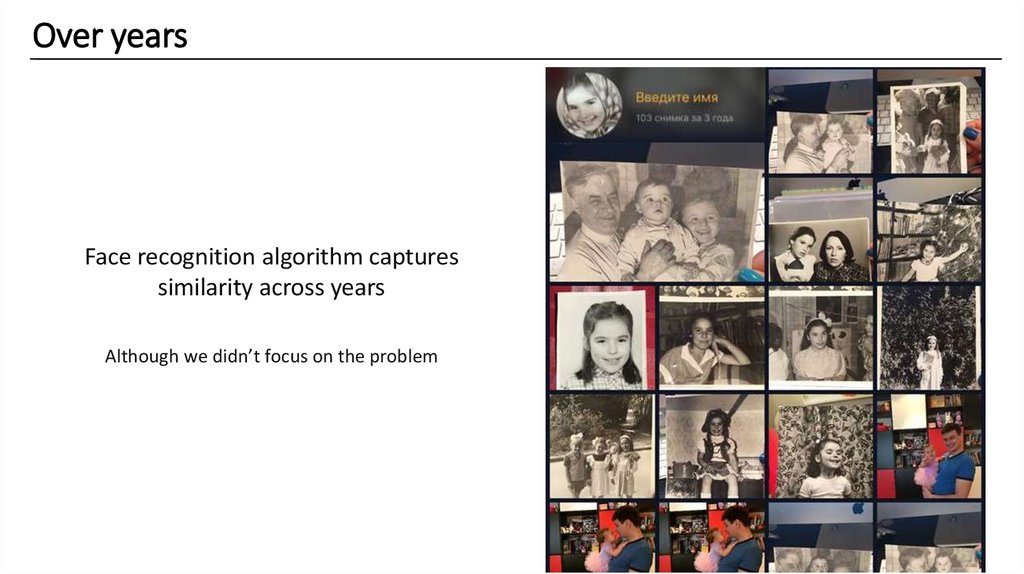

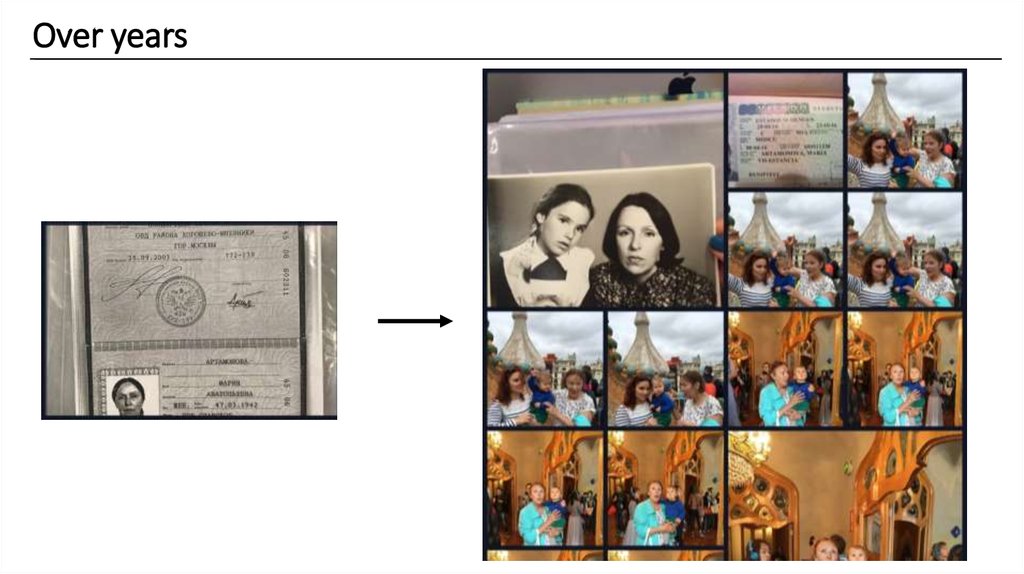

Over yearsFace recognition algorithm captures

similarity across years

Although we didn’t focus on the problem

83.

Over years84.

Summary1.

2.

3.

4.

Use TensorRT to speed up inference

Metric learning: use Center loss by default

Clean your data thoroughly

Understanding CNN helps to fight errors

85.

86. Auxiliary

87.

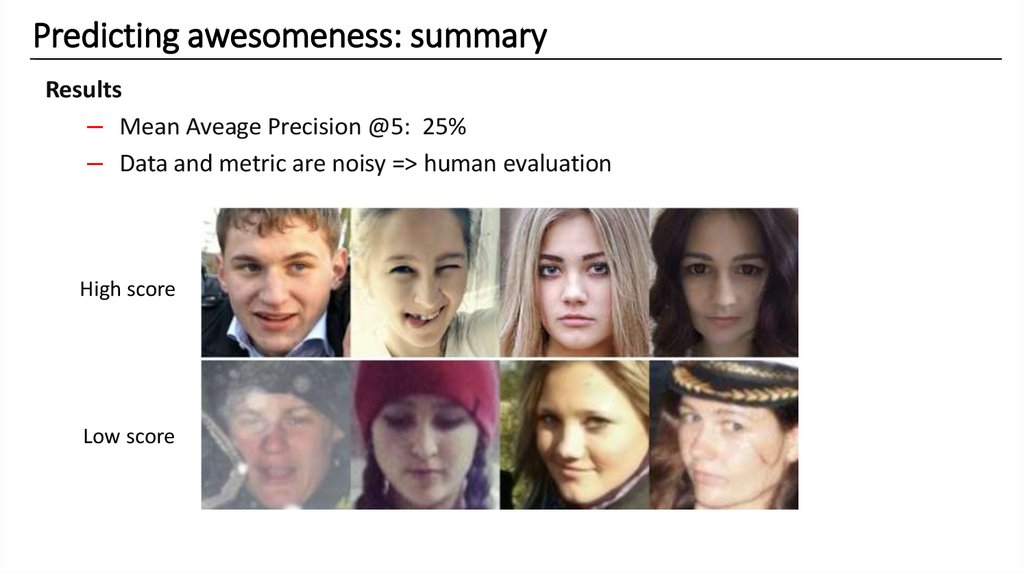

Best avatarProblem

How to pick an avatar for a person ?

Solution

Train model to predict awesomeness of photo

88.

Predicting awesomeness: how to approachSocial networks – not only photos, but likes too

89.

Predicting awesomeness: datasetAwesomeness (A) = likes/audience

A=18%

A=27%

A=75%

90.

Predicting awesomeness: summaryResults

– Mean Aveage Precision @5: 25%

– Data and metric are noisy => human evaluation

High score

Low score

91.

Predicting awesomeness: incorporating into FRface

One more branch in Face Recognition CNN

Small overhead

embedding

awesomeness

Интернет

Интернет